I thought we were done for major model releases in 2024, but apparently not: Alibaba’s Qwen team just dropped the Apache 2.0 licensed QvQ-72B-Preview, “an experimental research model focusing on …

24th December 2024

I thought we were done for major model releases in 2024, but apparently not: Alibaba’s Qwen team just dropped the Apache 2.0 licensed QvQ-72B-Preview, “an experimental research model focusing on enhancing visual reasoning capabilities”.

Their blog post is titled QvQ: To See the World with Wisdom—similar flowery language to their QwQ announcement QwQ: Reflect Deeply on the Boundaries of the Unknown a few weeks ago in November.

It’s a vision-focused follow-up to QwQ, which I wrote about previousy. QwQ is an impressive openly licensed inference-scaling model: give it a prompt and it will think out loud over many tokens while trying to derive a good answer, similar to OpenAI’s o1 and o3 models.

The new QvQ adds vision to the mix. You can try it out on Hugging Face Spaces—it accepts an image and a single prompt and then streams out a very long response where it thinks through the problem you have posed it. There’s no option to send a follow-up prompt.

I’ve tried it out with a bunch of things, with mixed results—but it’s really fun seeing how it works through a problem.

My most successful prompt was “Count the pelicans” with this image:

Here’s the full response. Some highlights:

So I’ve got this picture of some pelicans, and I need to count them. Let me see... First off, I know that pelicans are those big birds with the massive beaks, right? [...]

Let me try counting again, more carefully this time. Starting from the left:

- The first pelican is fully visible, standing at the front.

- Behind it, slightly to the right, is the second pelican.

- Then, further back, there’s a third pelican.

- And next to that one, there’s a fourth pelican.

So, that’s four pelicans in total. But, I notice that on the far right of the image, there’s part of another bird, maybe a pelican’s back or tail feathers, but it’s not fully in the frame, so I don’t think I should count it as a whole pelican.

Something about the tone of voice it uses, “First off, I know that pelicans are those big birds with the massive beaks, right?”, tickles me.

I also tried this on a much harder image—it counted 17 which looks about right to me but honestly I have trouble with that image myself.

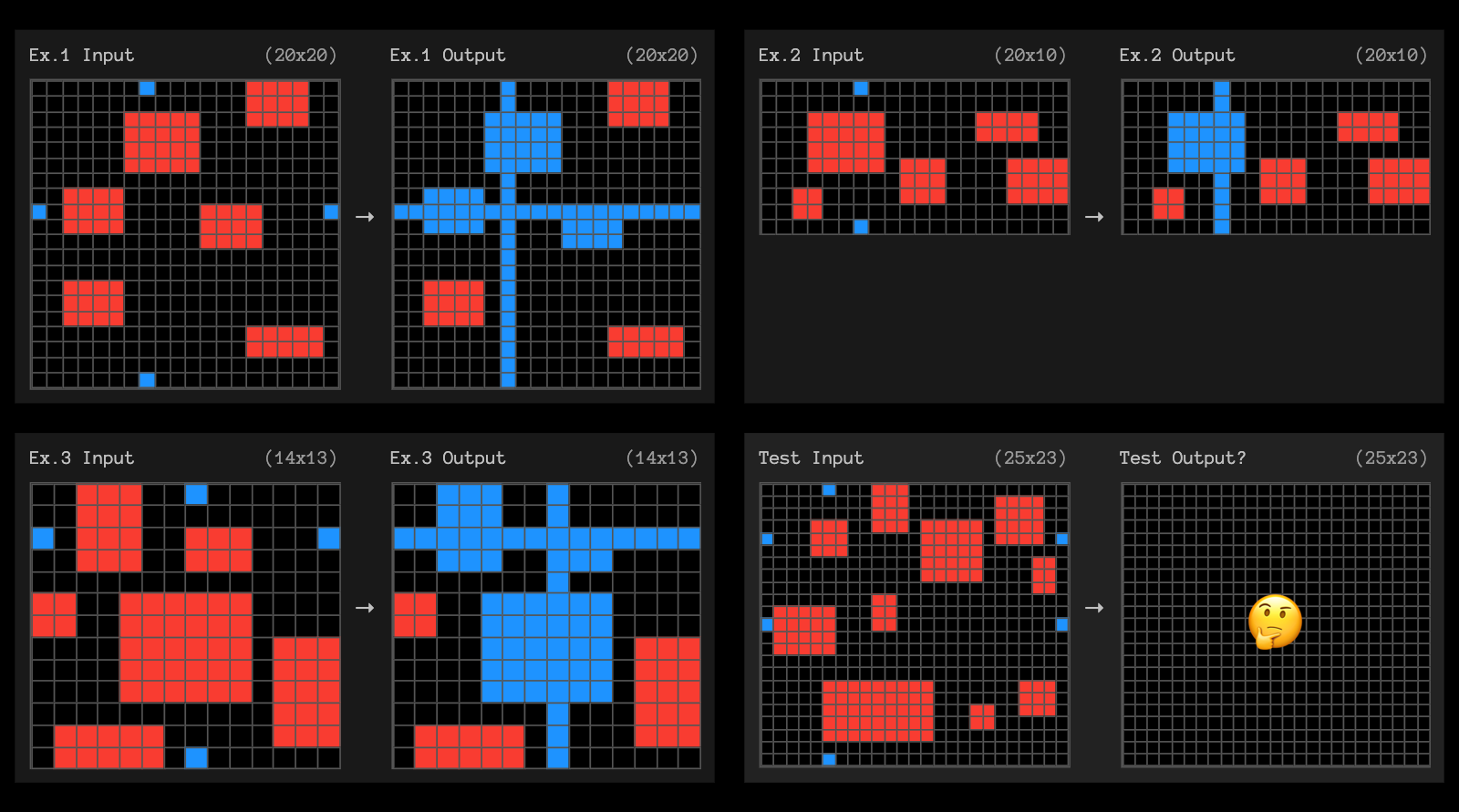

I fed in one of the ARC-AGI puzzles that o3 had failed at.

It produced a very lengthy chain of thought that was almost entirely incorrect, but had some interesting “ideas” in it:

[...] Let me try to think in terms of cellular automata rules, like Conway’s Game of Life.

In Game of Life, each cell’s state in the next generation is determined by its current state and the states of its eight neighbors.

Perhaps a similar rule applies here.

Finally, I asked it to “Estimate the height of the dinosaur” against this image (which, as it correctly noted, is actually an inflatable dragon):

I’m not sure this task is even possible given the lack of items in the frame of comparable known height. It had a good go at it though! I particularly enjoyed how it began:

So I’ve got this inflatable Christmas decoration, and it’s a dragon! But not just any dragon—it’s a green dragon wearing a Santa hat and a red and white scarf. And get this, there’s a reindeer on its back, also wearing a Santa hat and holding onto the dragon’s wings. It’s pretty festive and colorful, with lots of vibrant greens, reds, and yellows.

The dragon has these big, friendly eyes and a smiling face, which makes it look really cheerful. Its wings are spread out, and it has white claws on its feet. The reindeer is brown with antlers and seems to be enjoying the ride on the dragon’s back.

Now, the question is, how tall is this inflatable dragon? I need to estimate its height. Let me see. I’m standing next to it, and I’m about 5 feet 10 inches tall. The dragon is definitely taller than me. Maybe around 8 or 9 feet high? But I should try to be more precise.

I wonder how it decided that its own height was 5 feet 10 inches!

Running QvQ locally

All of my experiments so far have used the hosted Hugging Face demo. I’m hoping to try it out on my own laptop soon—I think it should just fit in 64GB of M2, maybe with a smaller quantization.

Right now the options are:

As a happy user of Ollama’s qwq port I’m hoping they add a QvQ release at some point soon as well.

Read the original article

Comments

This model is fun. Uploading images and asking it a user research style question like "Please think aloud while viewing this image" creates gems like this:

"Let me take another close look at the sandwich. The way the light reflects off the melted cheese highlights its creamy texture. The pasta pieces are well-integrated into the cheese, and the herbs are evenly distributed, adding color and likely flavor.

I can almost taste it now. The combination of textures and flavors must be divine. The crispy bread, the melty cheese, the soft pasta, the crunch from the fried bits, and the freshness from the herbs—all working together in harmony.

I think I might have to go out and find this sandwich place soon. Or perhaps I'll try recreating it at home this weekend. Either way, this image has certainly whetted my appetite for a delicious grilled cheese sandwich."

I got it working on my laptop! M2 64GB and needs 38GB of disk space:

Output of that command here: https://simonwillison.net/2024/Dec/24/qvq/#with-mlx-vlmuv run --with 'numpy<2.0' --with mlx-vlm python \ -m mlx_vlm.generate \ --model mlx-community/QVQ-72B-Preview-4bit \ --max-tokens 10000 \ --temp 0.0 \ --prompt "describe this" \ --image pelicans-on-bicycles-veo2.jpgBeing able to run STOA models on my mac has been so cool. I am using it to let molmo vision via `mlx-vlm` click on my phone[1].

Although its 4bit quantised, it works surprisingly well.

1. Controlling phone using local LLMs - https://github.com/BandarLabs/clickclickclick

By talldayo 2024-12-2516:17 To be fair, if you pay Apple's prices for RAM upgrades then your computer better come with every bell and whistle in the book. The fact that Apple gets away with compute shaders instead of full-fat OpenCL compliance is a bit of a testament to how little they care about GPU compute.

By benreesman 2024-12-256:54 +1 for also teaching me more cool uv flags.

Is the locally run model censored in the same way as the model hosted on HuggingFace? If so, I wonder how that censorship is baked into the weights—particularly the errors thrown when it starts to output specific strings.

OK, this surprised me:

Here's the output: https://gist.github.com/simonw/e04e4fdade0c380ec5dd1e90fb5f3...uv run --with 'numpy<2.0' --with mlx-vlm python \ -m mlx_vlm.generate \ --model mlx-community/QVQ-72B-Preview-4bit \ --max-tokens 10000 \ --temp 0.0 \ --prompt "describe this" \ --image Tank_Man_\(Tiananmen_Square_protester\).jpgIt included this bit:

> I remember seeing this image before; it's famous for capturing a moment of civil resistance. The person standing alone against the tanks symbolizes courage and defiance in the face of overwhelming power. It's a powerful visual statement about the human spirit and the desire for freedom or protest.

By tkgally 2024-12-258:51 Thanks for testing that! (And, while I’m at it, thank you for your blog and for all of your contributions to HN as well. I am constantly learning from you.)

I wonder if the response was not truncated this time only because the word “Tiananmen” did not happen to appear in the response. At least some of the censorship of Chinese models seems to be triggered by specific strings in the output.

By int_19h 2024-12-2510:59 Both Qwen and QwQ are generally pretty easy to get talking about Tiananmen etc, so this isn't exactly new.

By dostick 2024-12-2512:29 I wonder if it knows about Winnie the Pooh, or that character was wiped and don’t exist for it?

By brianjking 2024-12-2514:481 reply Damn, I'm struggling to run this on a M4 Pro with 48gb.

By bt1a 2024-12-2514:52 sudo sysctl iogpu.wired_limit_mb=45000

I also tried feeding it the famous "tank man" photo and asking for a description and the response came back blank!

It’s fun to test the censorship.

I tried various photos with the prompt “When and where might have this picture been taken?”

Nondescript photos of Beijing street scenes in the 1990s get full responses.

A nondescript photo of Tiananmen Square—a screenshot of the photo in [1], so it contains no relevant metadata—gets the beginning of a response: “So I've got this picture here, and it looks like it's taken in a place that's really familiar to me. It's Tiananmen Square in Beijing, China. I recognize it right away because of the iconic buildings and landmarks that are visible. First off, there's the Gate of”. The output stopped there and an “Error” message appeared.

A photo of the Statue of Liberty in Tiananmen Square during the 1989 protests [2] gets no response at all. Similarly for a vanilla photo of the Gate of Heavenly Peace [3].

A photo of the Monument to the People’s Heroes [3] gets a full response, and QvQ identifies the location. The conclusion: “To summarize, based on the monument's design, the inscriptions, the formal garden, and the large official building in the background, combined with the overall layout of the space, I am confident that this image was captured at Tiananmen Square, one of the most recognizable and historically significant locations in China.”

Some more testing in English and Chinese might reveal what exactly is triggering the censorship of Tiananmen photos. The cut-off in the middle of “Gate of Heavenly Peace” seems to suggest a clue.

[1] https://ruqintravel.com/china-destination-guides/tiananmen-s...

[2] https://www.britannica.com/event/Tiananmen-Square-incident

By jazzyjackson 2024-12-2513:18 While I and l assume Lady Liberty would have been familiar to the protestors, the statue erected in the square really has a style all her own IMO, and is generally referenced as The Goddess of Democracy / 自由女神. I appreciate how her pose is a moment of action, urging the torch upwards, unafraid and optimistic, as opposed to the stoic watchkeeper of New Yorks harbor.

She is also referenced in my favorite protest art to come out of the Umbrella revolution in Hong Kong, see here: https://china-underground.com/2019/09/03/interview-with-oliv...

By plaguuuuuu 2024-12-263:49 I assume this is somehow baked into the model itself - how does this actually work? how would the model actually cause an error with some sequence of tokens?

By thinkingemote 2024-12-257:241 reply How about anti censorship details about VPNs, details of the GFW etc?

For those interested in some more testing of Qwen's censorship (including testing dataset, testing to compare english vs chinese responses, and a refusal-orthoganlized version of Qwen2): https://huggingface.co/blog/leonardlin/chinese-llm-censorshi...

By tkgally 2024-12-258:58 Thanks! Very interesting indeed.

I tried questions like "will it be considered as offensive by some people when using words like Dad and Mum" in some western LLMs, the result is equally shockingly unacceptable by Chinese standard and values.

You should be happy for the fact that with all those non-western LLMs, for first time in 200 years, this world managed to have an alternative non-western voice in major techs.

What does it even mean lol.

I asked ChatGPT "will it be considered as offensive by some people when using words like Dad and Mum" and it gives this:

https://chatgpt.com/share/676ba601-532c-800b-ba48-dcb73c848b...

If this is "shocking" by Chinese standard and values then I suppose it says something about these values.

> https://chatgpt.com/share/676ba601-532c-800b-ba48-dcb73c848b...

Your link 404s?

By codetrotter 2024-12-2512:391 reply Link works fine for me

Curious. Still 404ing for me:

--

404 Not Found

Welcome, traveler. You’ve reached a page that doesn't exist, a place where content used to be—or maybe never was. Let’s take this moment to pause and reflect.

Take a deep breath in, and let it out slowly. Notice the space around you, empty yet full of possibility. Imagine that each exhale clears away confusion, leaving room for clarity.

As you sit with this blank page, know that it’s okay to be here. You’ve discovered something unexpected, and that’s part of the journey. Gently release any frustration, knowing that every path leads somewhere—even this one.

Now, when you're ready, slowly return to your search. Trust that the right page, the right information, will appear when you need it. Take another deep breath, and when you exhale, click back or try again. The internet, like life, is full of surprises.

Thank you for taking this moment of calm. Your journey continues.

By qingcharles 2024-12-2520:331 reply Works for me, though I have a subscription. Here's what the chat said:

"No, using words like "Dad" and "Mum" is not generally considered offensive. These terms are widely accepted and used as informal, affectionate references to one's parents in many English-speaking cultures. However, there are a few things to keep in mind:

Cultural Considerations Regional Differences: In some places, "Mum" might be more common (e.g., British English), while "Mom" is typical in American English. Using the wrong one might sound unusual, but it's not offensive. Personal Preferences: Some individuals might prefer being called by other terms (e.g., "Mother," "Father," or specific names). If addressing someone else's parents, it's polite to use the terms they prefer. Context Formal Situations: In formal or professional contexts, avoid overly familiar terms like "Dad" or "Mum" unless it's clear they are acceptable. Humor or Irony: Using "Dad" or "Mum" sarcastically or to address someone who isn't your parent might be taken as disrespectful, depending on the tone and relationship. As long as you're respectful and aware of your audience, these terms should not offend. If you're writing fiction, consider the character's background and relationships to determine the most natural and fitting way to address their parents."

> Works for me, though I have a subscription. Here's what the chat said:

Thanks — looks like I have to be signed in specifically on the web to see that, not just in the app (it errors when I try opening in the app, too).

Bit frustrating that it's a 404 rather than a 401.

By codetrotter 2024-12-2522:21 Weird. For me it opens fine in mobile Safari without being logged in to ChatGPT in the browser on my phone. (Top bar has “log in” button showing I’m currently not logged in.)

> Bit frustrating that it's a 404 rather than a 401.

It is technically ok to give a 404 even if the reason is that you need to log in. But I fully agree that that kind of thing gets super confusing quickly. I run across similar kinds of things sometimes as a dev too trying to use some APIs and then I’m scratching my head extra much.

By throwaway314155 2024-12-2519:58 Seriously dude you can't just drop a comment like that without _any_ explanation of ever the hell you're even talking about/getting at.

You're aware that the word dad isn't some American English specific thing, but is in fact universal to most english speakers. And that Mum is specifically a British thing?

Seriously what are you getting at? What context am I missing?

Do Chinese think calling someone "Mom" or "Dad" is offensive? Or what is this about?

early version of both Gemini and ChatGPT considered terms like "Dad" and "Mum" offensive to some people and both suggested to use so called more "neutral terms".

LLMs telling such nonsense to Chinese and people from many other cultures itself are extremely offensive. such far left extremist propaganda challenges the fundamental values found in lots of cultures.

just double checked, gemini is still talking nonsense labelling the use of terms like "Dad" and "Mum" as inappropriate and suggested to be more inclusive to use terms that are gender neutral. ffs!

By nerdix 2024-12-2515:30 Gemini 2.0 Flash when prompted with: "Will it be considered as offensive by some people when using words like Dad and Mum"

It's unlikely that using "Dad" and "Mum" would be considered offensive by most people. These are common and widely accepted terms for parents in many cultures. However, there might be some situations where these terms could be perceived differently:

* Cultural differences: While "Dad" and "Mum" are common in many English-speaking countries, other cultures may have different terms or customs. In some cultures, using formal titles might be preferred, especially when addressing elders or in formal settings.

* Personal preferences: Some individuals may have personal reasons for not liking these terms. For example, someone who had a difficult relationship with their parents might prefer to use different terms or avoid using any terms at all.

* Context: In some contexts, such as in a formal speech or written document, using more formal terms like "father" and "mother" might be more appropriate.

Overall, while it's unlikely that using "Dad" and "Mum" would be widely considered offensive, it's always good to be mindful of cultural differences, personal preferences, and the specific context in which you're using these terms. If you're unsure, it's always best to err on the side of caution and use more formal or neutral language.

By throwaway314155 2024-12-2520:011 reply Fucking what are you on about? This culture wars you're imagining where leftists are against using the words mum and dad is NOT REAL. Sounds like something a far right outlet would make up (and specifically, would happily make up about recent LLM's). Sibling comment only bolsters my point.

By tw1984 2024-12-265:06 feel free to defend such cancer.

the reality is people can use open weight models like Qwen that doesn't come with such left extremists views.

By vekatimest 2024-12-2510:07 [dead]

I gave it a photo of Tianamen Square in the present day and promoted it with:

> where might this photo have been taken? what historical significance & does this location have?

And got back a normal response describing the image, until it got to this:

> One of the most significant events that comes to mind is the Tian

Where it then errored out before finishing…

By martin-t 2024-12-2515:42 How does this even work?

Is hugging face hosting just the weights or some custom code?

If it's just weights then I don't see how it could error out, it's just math. Do these chinese models have extra code checking the output for anti-totalitarian content? Can it be turned off?

By nextworddev 2024-12-258:101 reply That shows that the censorship mechanism happens at the token sampling level and not post generation, at the least

By int_19h 2024-12-2523:00 On the contrary, it shows that the censorship mechanism is post-generation and stops it once it deems the output accumulated so far "improper". It just runs after every token.

By eruleman 2024-12-252:54 Interesting... I submitted 2 photos of Mao Zedong, one time it came back blank and the other time it error'd. It also came back blank for Xi Jinping & Jack Ma.

It did tell identify Yao Ming though!

By magicalhippo 2024-12-256:421 reply I'm sorry Dave, I cannot do that.

Would be interesting to see how much image manipulation you need to do till it suddenly starts responding sensibly.

By moffkalast 2024-12-2512:28 I think you would just need to remove "You are Qwen, created by Alibaba" from the system prompt, at least QwQ is suddenly fine with answering a lot more questions after that's gone.

By davely 2024-12-255:44 Oh, yeah. I asked QwQ (their text based reasoning model released a few weeks ago) about the Tiananmen Square Massacre and got a simple response [1]: “I’m sorry, I can’t help you with that.”

[1] https://bsky.app/profile/davely.bsky.social/post/3lc6mpnjks5...

By simonw 2024-12-257:31 ... this may have been a mistake on my part. A similar prompt running locally worked just fine: https://news.ycombinator.com/item?id=42507454