Confused by LLMs, RAG, & AI Agents? We break down the spectrum of AI system design with a familiar resume-screening example to show the leap in capabilities (and complexity) at each level.

AI agents are a hot topic, but not every AI system needs to be one.

While agents promise autonomy and decision-making power, simpler & more cost-saving solutions better serve many real-world use cases. The key lies in choosing the right architecture for the problem at hand.

In this post, we'll explore recent developments in Large Language Models (LLMs) and discuss key concepts of AI systems.

We've worked with LLMs across projects of varying complexity, from zero-shot prompting to chain-of-thought reasoning, from RAG-based architectures to sophisticated workflows and autonomous agents.

This is an emerging field with evolving terminology. The boundaries between different concepts are still being defined, and classifications remain fluid. As the field progresses, new frameworks and practices emerge to build more reliable AI systems.

To demonstrate these different systems, we'll walk through a familiar use case – a resume-screening application – to reveal the unexpected leaps in capability (and complexity) at each level.

Pure LLM

A pure LLM is essentially a lossy compression of the internet, a snapshot of knowledge from its training data. It excels at tasks involving this stored knowledge: summarizing novels, writing essays about global warming, explaining special relativity to a 5-year-old, or composing haikus.

However, without additional capabilities, an LLM cannot provide real-time information like the current temperature in NYC. This distinguishes pure LLMs from chat applications like ChatGPT, which enhance their core LLM with real-time search and additional tools.

That said, not all enhancements require external context. There are several prompting techniques, including in-context learning and few-shot learning that help LLMs tackle specific problems without the need of context retrieval.

Example:

- To check if a resume is a good fit for a job description, an LLM with one-shot prompting and in-context learning can be utilized to classify it as Passed or Failed.

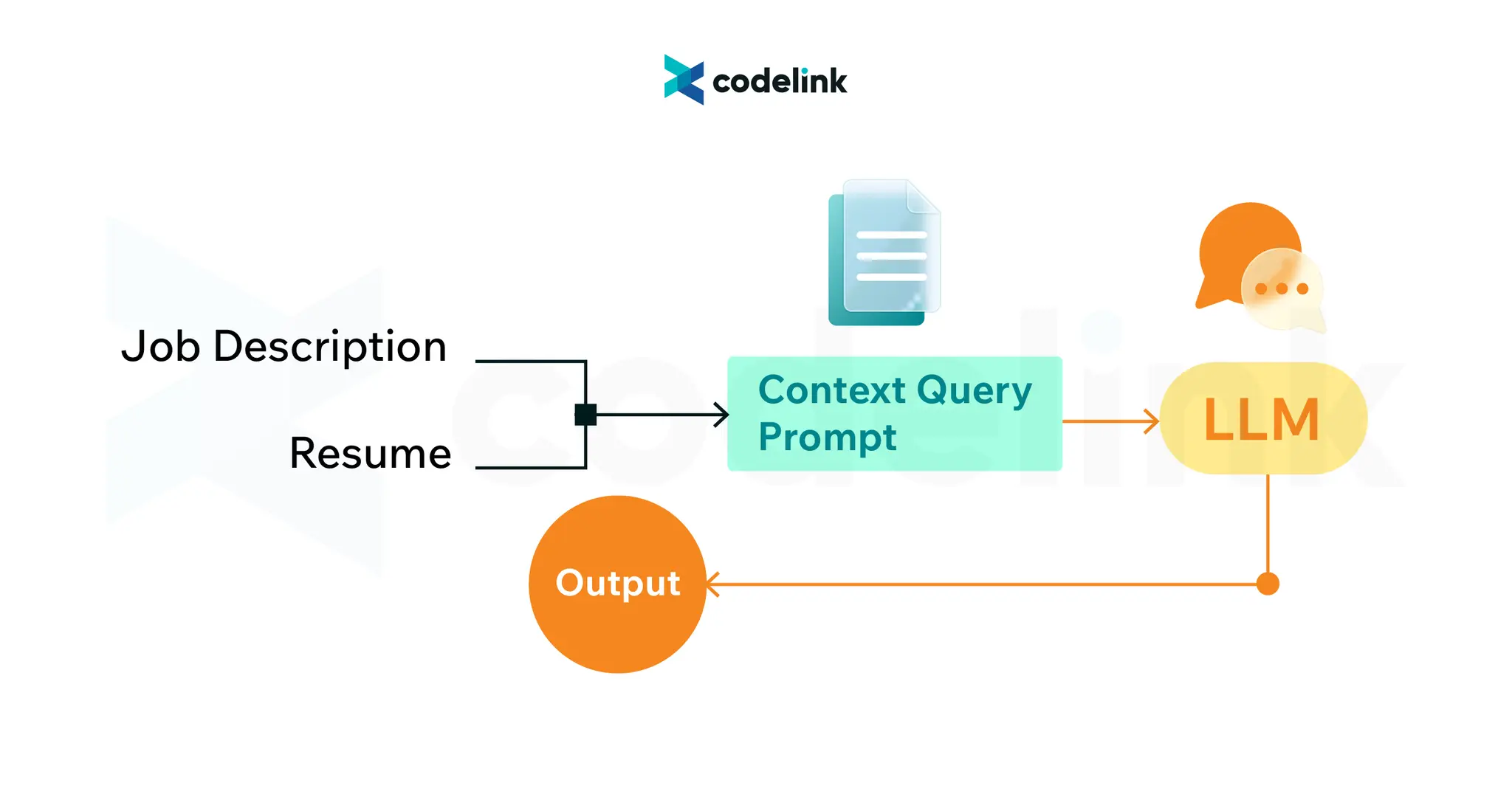

RAG (Retrieval Augmented Generation)

Retrieval methods enhance LLMs by providing relevant context, making them more current, precise, and practical. You can grant LLMs access to internal data for processing and manipulation. This context allows the LLM to extract information, create summaries, and generate responses. RAG can also incorporate real-time information through the latest data retrieval.

Example:

- The resume screening application can be improved by retrieving internal company data, such as engineering playbooks, policies, and past resumes, to enrich the context and make better classification decisions.

- Retrieval typically employs tools like vectorization, vector databases, and semantic search.

Tool Use & AI Workflow

LLMs can automate business processes by following well-defined paths. They're most effective for consistent, well-structured tasks.

Tool use enables workflow automation. By connecting to APIs, whether for calculators, calendars, email services, or search engines, LLMs can leverage reliable external utilities instead of relying on their internal, non-deterministic capabilities.

Example:

- An AI workflow can connect to the hiring portal to fetch resumes and job descriptions → Evaluate qualifications based on experience, education, and skills → Send appropriate email responses (rejection or interview invitation).

- For this resume scanning workflow, the LLM requires access to the database, email API, and calendar API. It follows predefined steps to automate the process programmatically.

AI Agent

AI Agents are systems that reason and make decisions independently. They break down tasks into steps, use external tools as needed, evaluate results, and determine the following actions: whether to store results, request human input, or proceed to the next step.

This represents another layer of abstraction above tool use & AI workflow, automating both planning and decision-making.

While AI workflows require explicit user triggers (like button clicks) and follow programmatically defined paths, AI Agents can initiate workflows independently and determine their sequence and combination dynamically.

Example:

- An AI Agent can manage the entire recruitment process, including parsing CVs, coordinating availability via chat or email, scheduling interviews, and handling schedule changes.

- This comprehensive task requires the LLM to access databases, email and calendar APIs, plus chat and notification systems.

Key takeaway

1. Not every system requires an AI agent

Start with simple, composable patterns and add complexity as needed. For some systems, retrieval alone suffices. In our resume screening example, a straightforward workflow works well when the criteria and actions are clear. Consider an Agent approach only when greater autonomy is needed to reduce human intervention.

2. Focus on reliability over capability

The non-deterministic nature of LLMs makes building dependable systems challenging. While creating proofs of concept is quick, scaling to production often reveals complications. Begin with a sandbox environment, implement consistent testing methods, and establish guardrails for reliability.

Read the original article

Comments

> AI Agents can initiate workflows independently and determine their sequence and combination dynamically

I'm confused.

A workflow has hardcoded branching paths; explicit if conditions and instructions on how to behave if true.

So for an agent, instead of specifying explicit if conditions, you specify outcomes and you leave the LLM to figure out what if conditions apply and how to deal with them?

In the case of this resume screening application, would I just provide the ability to make API calls and then add this to the prompt: "Decide what a good fit would be."?

Are there any serious applications built this way? Or am I missing something?

> A workflow has hardcoded branching paths; explicit if conditions and instructions on how to behave if true.

That is very much true of the systems most of us have built.

But you do not have to do this with an LLM; in fact, the LLM may decide it will not follow your explicit conditions and instructions regardless of how hard you you try.

That is why LLMs are used to review the output of LLMs to ensure they follow the core goals you originally gave them.

For example, you might ask an LLM to lay out how to cook a dish. Then use a second LLM to review if the first LLM followed the goals.

This is one of the things tools like DSPy try to do: you remove the prompt and instead predicate things with high-level concepts like "input" and "output" and then reward/scoring functions (which might be a mix of LLM and human-coded functions) that assess if the output is correct given that input.

What happens when the LLM responsible for checking decides to ignore your explicit conditions?

By vrighter 2025-06-233:54 you bury your hand in the sand and pretend the 2nd llm magically lacks the limitations of the first.

Which begs the question... why not use the 2nd llm in the first place if it is the one who actually "knows" the answer?

AI code generation tools work like this.

Let me reword your phrasing slightly to make an illustrative point:

> so for an employee, instead of specifying explicit if conditions, you specify outcomes and you leave the human to figure out what if conditions apply and how to deal with them?

> Are there any serious applications built this way?

We have managed to build robust, reliable systems on top of fallible, mistake-riddled, hallucinating, fabricating, egotistical, hormonal humans. Surely we can handle a little non-determinism in our computer programs? :)

In all seriousness, having spent the last few years employed in this world, I feel that LLM non-determinism is an engineering problem just like the non-determinism of making an HTTP request. It’s not one we have prior art on dealing with in this field admittedly, but that’s what is so exciting about it.

Yes, I see your analogy between fallible humans and fallible AI.

It's not the non-determinism that was bothering me, it was the decision making capability. I didn't understand what kinds of decisions I can rely on an LLM to make.

For example, with the resume screening application from the post, where would I draw the line between the agent and the human?

- If I gave the AI agent access to HR data and employee communications, would it be able decide when to create a job description?

- And design the job description itself?

- And email an opening round of questions for the candidate to get a better sense of the candidates who apply?

Do I treat an AI agent just like I would a human new to the job? Keep working on it until I can trust it to make domain-specific decisions?

The honest answer is that we are still figuring out where to draw the line between an agent and a human, because that line is constantly shifting.

Given your example of the resume screening application from the post and today's capabilities, I would say:

1) Should agents decide when to create a job post? Not without human oversight - proactive suggestion to a human is great. 2) Should agents design the job description itself? Yes, with the understanding that an experienced human, namely the hiring manager, will review and approve as well. 3) Should an agent email an opening round of questions to the candidates? Definitely allowed with oversight, and potentially even without a human approval depending on how well it does.

It's true that to improve all 3 of these it would take a lot of work with respect to building out the tasks, evaluations, and flows/tools/tuned models, etc. But, you can also see how much this empowers a single individual in their productivity. Imagine being one recruiter or HR employee with all of these agents completing these tasks for you effectively.

EDIT: Adding that this pattern of "agent does a bunch of stuff and asks for human review/approval" is, I think, one of the fundamental workflows we will have to adapt in dealing productively with non-determinism.

This applies to an AI radiologist asking a human to approve their suggested diagnosis, an AI trader asking a human to approve a trade with details and reasoning, etc. Just like small-scale AI like Copilot asking you to approve a line/several lines, or tools like Devin asking you to approve a PR.

By nilirl 2025-06-204:29 I can see value in it, but I'm unsure how much more value it provides compared to building software with my own heuristics for solving these problems.

The LLM seems incredible at generating well-formatted text when I need it, like for the job description and emails.

But hardcoding which signals matter for a decision and what to do about them, seems faster, more understandable, and maybe not that far in effectiveness from a well trained AI agent.

> would it be able decide when to create a job description?

If you can encode how you/your company does that decision as a human with text, I don't see why not. But personally there is a lot of subjectivity (for better or worse) in hiring processes, I'm not sure I'd want a probabilistic rules engine to make those sort of calls.

My current system prompt for coding with LLMs basically look like I've written down what my own personal rules for programming is. And anytime I got some results I didn't like, I wrote down why I didn't like it, and codified it in my reusable system prompt, then it doesn't make those (imo) mistakes anymore.

I don't think I could realistically get an LLM to do something I don't understand the process of myself, and once you grok the process, you can understand if using an LLM here makes sense or not.

> Do I treat an AI agent just like I would a human new to the job?

No, you treat it as something much dumber. You can generally rely on some sort of "common sense" in a human that they built up during their time on this planet. But you cannot do that with LLMs, as while they're super-human in some ways, are still way "dumber" in other ways.

For example, a human new to a job would pick up things autonomously, while an LLM does not. You need to pay attention to what you need to "teach" the LLM by changing what Karpathy calls the "programming" of the LLM, which would be the prompts. Anything you miss to tell it, the LLM will do whatever with, and it only follows exactly what you say. A human you can usually tell "don't do that in the future" and they'll avoid that in the right context. A LLM you can scream at for 10 hours how they're doing something wrong, but unless you update the programming, they'll continue to make that mistake forever, and if you correct it but reuse it in other contexts, the LLM won't suddenly understand that it doesn't make sense in the context.

Just an example, I wanted to have some quick and dirty throw away code for generating a graph, and in my prompt I mixed X and Y axis, and of course got a function that didn't work as expected. If this was a human doing it, it would have been quite obvious I didn't want time on the Y axis and value on the X axis, because the graph wouldn't make any sense, but the LLM happily complied.

So, if the humans have to model the task, the domain, and the process to currently solve it, why not just write the code to do so?

Is the main benefit that we can do all of this in natural language?

By cyberax 2025-06-1916:30 A lot of time it's faster to ask an LLM. Treat it as an autocomplete on steroids.

>Is the main benefit that we can do all of this in natural language?

Hit it right on the nail. That is pretty much the breakthrough with LLM has being. It does allow the class of non programmer developer to be able to tasks that once was only for developers and programmers. Seems like a great fit for CEO and management as well.

By candiddevmike 2025-06-1918:032 reply Natural language cannot describe these things as concretely, repeatedly, or verifiably as code.

By neutronicus 2025-06-2014:11 Well.

Generally whatever code is produced fails utterly to describe, at all, let alone concretely / repeatedly / verifiably, "what the exec had in mind", because the exec devotes all of 10 seconds of thought to communicating it.

The actual breakthrough might be social, in that "vibe coding" is exciting enough, and its schedule flexible enough, that it can actually coax high-level decision makers into putting real thought into what they want to happen.

By nilirl 2025-06-204:23 I agree with you but I think the argument is that they can both exist together.

Code to model what is easy to model with code, and natural language for things that are fuzzy or cumbersome in code.

By hyperliner 2025-06-209:09 [dead]

One of the key advantages of computers has, historically, been their ability to compute and remember things accurately. What value is there in backing out of these in favour of LLM-based computation?

They're able to handle large variance in their input, right out've the box.

I think the appeal is code that handles changes in the world without having to change itself.

By bluefirebrand 2025-06-1920:451 reply That's not very useful though, unless it is predictable and repeatable?

By nilirl 2025-06-204:21 I think that's the argument from some of the other commenters: making it predictable and repeatable is the engineering task at hand.

Some ways they're approaching it:

- Reduce the requirement from 100% predictable to something lower yet acceptable.

- continuously add layers of checks and balances until we consistently hit an acceptable ratio of success:failure

- Wait and see if this is all eventually cost-efficient

Not all applications need to be built this way. But the most serious apps built this way would be deep research

Recent article from Anthropic - https://www.anthropic.com/engineering/built-multi-agent-rese...

By nilirl 2025-06-1913:37 Thanks for the link, it taught me a lot.

From what I gather, you can build an agent for a task as long as:

- you trust the decision making of an LLM for the required type of decision to be made; so decisions framed as some kind of evaluation of text feels right.

- and if the penalty for being wrong is acceptable.

Just to go back to the resume screening application, you'd build an agent if:

- you asked the LLM to make an evaluation based on the text content of the resume, any conversation with the applicant, and the declared job requirement.

- you had a high enough volume of resumes where false negatives won't be too painful.

It seems like framing problems as search problems helps model these systems effectively. They're not yet capable of design, i.e, be responsible for coming up with the job requirement itself.

An AI company doing it is the corporate equivalent of "works on my machine".

Can you give us an example of a company not involved in AI research that does it?

There’s plenty of companies using these sorts of agentic systems these days already. In my case, we wrote an LLM that knows how to fetch data from a bunch of sources (logs, analytics, etc) and root causes incidents. Not all sources make sense for all incidents, most queries have crazy high cardinality and the data correlation isn’t always possible. LLMs being pattern matching machines, this allows them to determine what to fetch, then it pattern matches a cause based on other tools it has access (eg runbooks, google searches)

I built incident detection systems in the past, and this was orders of magnitude easier and more generalizable for new kinds of issues. It still gives meaningless/obvious reasoning frequently, but it’s far, far better than the alternatives…

LLM _automation_. I'm sure you could understand the original comment just fine.

I didn't. This also confused me:

> LLMs being pattern matching machines

LLMs are _not_ pattern matching. I'm not being pedantic. It is really hard and unreliable to approach them with a pattern matching mindset.

By alganet 2025-06-1921:27 I stand by it.

You can definitely take a base LLM model then train it on existing, prepared root case analysis data. But that's very hard, expensive, and might not work, leaving the model brittle. Also, that's not what an "AI Agent" is.

You could also make a workflow that prepares the data, feeds it into a regular model, then asks prepared questions about that data. That's inference, not pattern matching. There's no way an LLM will be able to identify the root cause reliably. You'll probably need a human to evaluate the output at some point.

What you mentioned doesn't look like either one of these.

By dist-epoch 2025-06-1915:201 reply Resume screening is a clear workflow case: analyze resume -> rank against others -> make decision -> send next phase/rejection email.

An agent is like Claude Code, where you say to it "fix this bug", and it will choose a sequence of various actions - change code, run tests, run linter, change code again, do a git commit, ask user for clarification, change code again.

By DebtDeflation 2025-06-1922:36 Almost every enterprise use case is a clear workflow use case EXCEPT coding/debugging and research (e.g., iterative web search and report compilation). I saw a YT video the other day of someone building an AI Agent to take online orders for guitars - query the catalog and present options to the user, take the configured order from the user, check the inventory system to make sure it's available, and then place the order in the order system. There was absolutely no reason to build this as an Agent burning an ungodly number of tokens with verbose prompts running in a loop only to have it generate a JSON using the exact format specified to place the final order when the same thing could have been done with a few dozen lines of code making a few API calls. If you wanted to do it with a conversational/chat interface, that could easily be done with an intent classifier, slot filling, and orchestration.

By spacecadet 2025-06-1915:20 More or less. Serious? Im not sure yet.

I have several agent side projects going, the most complex and open ended is an agent that performs periodic network traffic analysis. I use an orchestration library with a "group chat" style orchestration. I declare several agents that have instructions and access to tools.

These range from termshark scripts for collecting packets and analysis functions I had previously for performing analysis on the traffic myself.

I can then say something like, "Is there any suspicious activity?" and the agents collaboratively choose who(which agent) performs their role and therefore their tasks (i.e. Tools) and work together to collect data, analyze the data, and return a response.

I also run this on a schedule where the agents know about the schedule and choose to send me an email summary at specific times.

I have noticed that the models/agents are very good at picking the "correct" network interface without much input. That they understand their roles and objectives and execute accordingly, again without much direction from me.

Now the big/serious question. Is the output even good or useful. Right now with my toy project it is OK. Sometimes it's great and sometimes it's not, sometimes they spam my inbox with micro updates.

Im bad at sharing projects, but if you are curious, https://github.com/derekburgess/jaws

By manishsharan 2025-06-1915:022 reply I decided to build a Agent system from scratch

It is sort of trivial to build it. Its just User + System Prompt + Assistant +Tools in a loop with some memory management.. The loop code can be as complex as I want it to be e.g. I could snapshot the state and restart later.

I used this approach to build a coding system (what else ?) and it works just as well as cursor or Claude Code for me. t=The advantage is I am able to switch between Deepseek or Flash depending on the complexity of the code and its not a black box.

I developed the whole system in Clojure.. and dogfooded it as well.

By logicchains 2025-06-1916:29 That's interesting, I built myself something similar in Haskell. Somehow functional programming seems to be particularly well suited for structuring LLM behaviour.

By swalsh 2025-06-1915:46 The hard part of building an agent is training to model to use tools properly. Fortuantely Anthropic did the hard part for us.

By Zaylan 2025-06-202:35 These days, I usually start with a basic LLM, layer in RAG for external knowledge, and build workflows to keep things stable and maintainable. Agents sound promising, but in practice, a clean RAG setup often delivers better results with far less complexity.