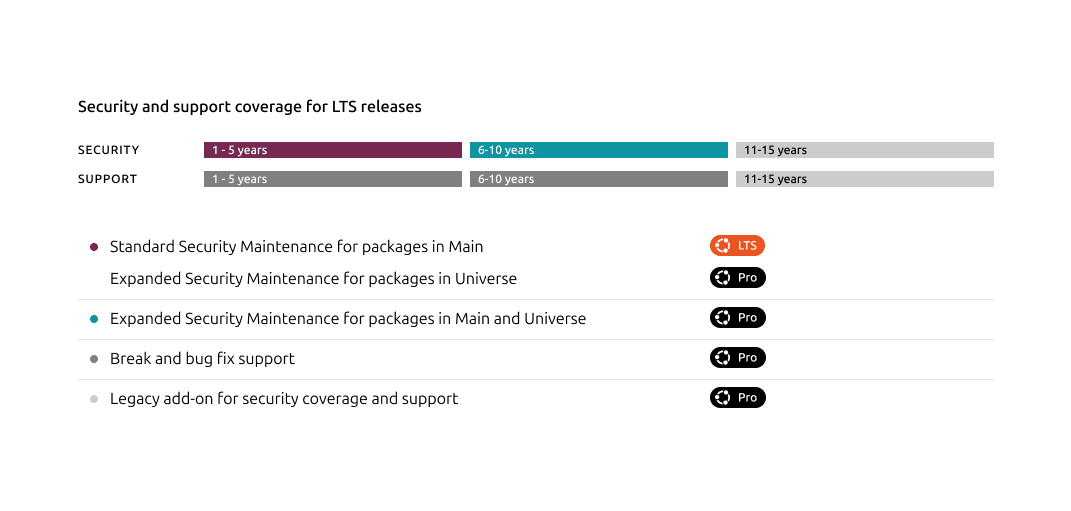

Ubuntu Pro now supports LTS releases for up to 15 years through the Legacy add-on. More security, more stability, and greater control over upgrade timelines for enterprises.

Today, Canonical announced the expansion of the Legacy add-on for Ubuntu Pro, extending total coverage for Ubuntu LTS releases to 15 years. Starting with Ubuntu 14.04 LTS (Trusty Tahr), this extension brings the full benefits of Ubuntu Pro – including continuous security patching, compliance tooling and support for your OS – to long-lived production systems.

In highly regulated or hardware-dependent industries, upgrades threaten to disrupt tightly controlled security and compliance. For many organizations, maintaining production systems for more than a decade is complex, but remains a more sensible option than a full upgrade.

That’s why, in 2024, we first introduced the Legacy add-on for Ubuntu Pro, starting with Ubuntu 14.04 LTS (Trusty Tahr). The Legacy add-on increased the total maintenance window for Ubuntu LTS releases to 12 years: five years of standard security maintenance, five years of Expanded Security Maintenance (ESM), and two years of additional coverage with the Legacy add-on – with optional support throughout. Due to the positive reception and growing interest in longer lifecycle coverage, we’re excited to now extend the Legacy add-on to 5 years, bringing a 15-year security maintenance and support window to Ubuntu LTS releases.

Throughout this 15-year window, Ubuntu Pro provides continuous security maintenance across the entire Ubuntu base, kernel, and key open source components. Canonical’s security team actively scans, triages, and backports critical, high, and select medium CVEs to all maintained LTS releases, ensuring security without forcing disruptive major upgrades that break compatibility or require re-certification.

Break/fix support remains an optional add-on. When production issues arise, you can get access to our Support team through this service and troubleshoot with experts who contribute to Ubuntu every day, who’ve seen similar problems before and know how to resolve them quickly.

The scope of the Legacy add-on itself is unchanged, but the commitment is longer, giving users additional years to manage transition timelines and maintain compliance.

This updated coverage applies from Ubuntu 14.04 LTS onward. With this extension, Ubuntu 14.04 LTS is now supported until April 2029, a full 15 years after its debut.

By committing to a 15-year lifecycle, Canonical gives users:

- Realistic timelines for planning and executing major migrations

- Continuous security and compliance coverage for long-lived systems

- Flexibility to modernize infrastructure strategically rather than reactively

Infrastructure is complicated, and upgrades carry real costs and risks. This expansion acknowledges those realities and gives you the support duration your deployments actually require.

Current Ubuntu Pro subscriptions will continue uninterrupted. No re-enrollment, no reinstallation, no surprise migration projects.

The Legacy add-on is available after the first 10 years of coverage (standard security maintenance plus ESM, and optional break/bug fix support), priced at a 50% premium over standard Ubuntu Pro. This applies whether you’re approaching that milestone with 16.04 LTS or already using the Legacy add-on with 14.04 LTS.

To activate coverage beyond ESM, contact Canonical Sales or reach out to your account manager.

For more information about the Legacy add-on, visit our Ubuntu Pro page.

Learn more:

Read the original article

Comments

By cookiengineer 2025-11-238:586 reply This is a good thing, despite my own concerns.

The major argument you get from "why are you using Windows 7" is exactly this, companies in infrastructure argue that they still get a supported operating system in return (despite the facts, despite EOL, despite reality of MS not patching actually, and just disclosing new vulnerabilities).

And currently there's a huge migration problem because Microsoft Windows 11 is a non-deterministic operating system, and you can't risk a core meltdown because of a popup ad in explorer.exe.

I have no idea why Microsoft is sleeping at the wheel so much, literally every big industry customer I've been at in Europe tells me the exact same thing, and almost all of them were Windows customers, and are now migrating to Debian because of those reasons.

(I'm proponent of Linux, but if I were a proponent of Windows I'd ask myself wtf Microsoft is doing for the last 10 years since Windows 7)

By p0w3n3d 2025-11-2320:26 I've read somewhere a post of alleged MS Windows developer which was about software crisis in company, namely young programmers do not want to ReleaseHandle(HWND*) so the rewrite everything (probably to C#). He gave some example that a well done functionality was rewritten without a lot of security checks. But I am unable to find it right now. It might have been on Reddit... or somewhere else...

As much as I’d love that picture to be true, how many “big industry” players are moving a sizable number of Windows machines to Debian? And how many Windows machines did they even have to begin with relative to Linux?

On the client side where this “non-deterministic” OS issue is far more advanced, moving away is so rare it’s news when it happens. On the data center side I’ve seen it more as consolidation of the tech stack around a single offering (getting rid of the few Windows holdouts) and not substantially Windows based companies moving to Linux.

By philistine 2025-11-2317:552 reply It’s about growth. Are any developers choosing to base their new backend on Windows in 2025? Or is Windows only really maintaining the relationships they already have, incapable of building a statistically significant network of new ones?

Even Azure, the new major revenue stream of Microsoft is built on Linux!

By close04 2025-11-2318:41 > Even Azure, the new major revenue stream of Microsoft is built on Linux!

Exactly, and has been for some time now. MS wasn’t asleep at the wheel, they just stopped caring about your infra. The money’s in the cloud now, especially the SaaS you neither own nor control.

My question was if these large companies moving away from Windows are just clearing the last remnants of the OS rather than just now shifting their sizable Windows footprint to Linux.

I’m trying to understand what was OP reporting. On the user side almost nobody is moving their endpoints to Linux, on the DC side almost nobody has too many Windows machines left to move to Linux after years of already doing this. The trend was apparent for years and years.

By pjmlp 2025-11-2421:29 Azure has circa 60% of VMs running Linux, however the underlying infrastructure is built on Windows.

By mixmastamyk 2025-11-2318:14 This concurrent post argues consumers are tech-debt to them: https://news.ycombinator.com/item?id=46025196

By stackskipton 2025-11-2316:48 Because they don’t care. All more stable installations using Desktop Windows is something I’m not sure they ever wanted but just cost cutting measure.

By keyringlight 2025-11-2311:321 reply It's good while the software you run on it still supports that OS, for example the big one would be anything build upon Chromium (or electron) framework which deprecated win7 support when Microsoft ended ESU support (EOL +3 years).

doesnt matter because windows can run even dos software out of the box

its compability its one of the best

Yes, sure. Which is why companies shipping old DOS games make them run in dosbox… Makes total sense. Could it be that windows isn't that compatible?

After all no game from >10 years ago runs any longer.

"After all no game from >10 years ago runs any longer."

stop lying, I can run original doom, sim city and red alert on my windows 10

You just need to click run compability as windows xxx then you can run it

By LtWorf 2025-11-2323:23 Spoken like a guy who doesn't use WINE.

By 1718627440 2025-11-2421:43 So software should be deployed with DOSbox and WINE on Linux on WSL on Windows 7. Got it!

By JackSlateur 2025-11-2313:0811 reply The LTS, long support version and stuff are all confessions of a technical and organisational failures

If you are not able to upgrade your stuff every 2 to 3 years, then you will not be able to upgrade your stuff after 5, 10 or 15 years. After so long time, that untouched pill of cruft will be considered as legacy, built by people gone long ago. It will be a massive project, an entire rebuild/refactor/migration of whatever you have.

"If you do not know how to do planned maintenance, then you will learn with incidents"

By da_chicken 2025-11-2314:165 reply I don't agree, and this feels like something written by someone who has never managed actual systems running actual business operations.

Operating systems in particular need to manage the hardware, manage memory, manage security, and otherwise absolutely need to shut up and stay out of the fucking way. Established software changes SLOWLY. It doesn't need to reinvent itself with a brand new dichotomy every 3 years.

Nobody builds a server because they want to run the latest version of Python. They built it to run the software they bought 10 years ago for $5m and for which they're paying annual support contracts of $50k. They run what the support contracts require them to run, and they don't want to waste time with an OS upgrade because the cost of the downtime is too high and none of the software they use is going to utilize any of the newly available features. All it does is introduce a new way for the system to fail in ways you're not yet familiar with. It adds ZERO value because all we actually want and need is the same shit but with security patches.

Genuinely I want HN to understand that not everyone is running a 25 person startup running a microservice they hope to scale to Twitter proportions. Very few people in IT are working in the tech industry. Most IT departments are understaffed and underfunded. If we can save three weeks of time over 10 years by not having to rebuild an entire system every 3 years, it's very much worth it.

By JackSlateur 2025-11-2314:314 reply Just for the context, I am employed by a multi-billion company (which has more than 100k people)

Here, I'm in charge of some low level infrastructure components (the kind on which absolutely everything rely on, 5sec of downtime = 5sec of everything is down)

On one of my scope, I've inherited from a 15 years-old junkyard

The kind with a yearly support

The kind that costs millions

The kind that is so complex, that has seen so less evolutions other the years that nobody knows it anymore (even the people who were there 15y ago)

The kind that slows everybody else because it cannot meet other teams' needs

Long story short, I've got a flamethrower and we are purging everything

Management is happy, customers are happy too, my mates also enjoy working with sane tech (and not braindamaged shit)

Yes, this is the key distinction: old software that works vs old software that sucks.

The one that sucks was a so-so compromise back in the day, and became a worse and worse compromise as better solutions became possible. It's holding the users back, and is a source of regular headaches. Users are happy to replace it, even at the cost of a disruption. Replacing it costs you but not replacing it also costs you.

The one that works just works now, but used to, too. Its users are fine with it, feel no headache, and loathe the idea to replace it. Replacing it is usually costly mistake.

By JackSlateur 2025-11-2316:351 reply But that software was probably nice, back in the day

It slowly rot, like everything else

By ordersofmag 2025-11-2317:431 reply Or it doesn't. Because "software as an organic thing" like all analogies is an analogy, not truth. Systems can sit there and run happily for a decade performing the needed function in exactly the way that is needed with no 'rot'. And then maybe the environment changes and you decide to replace it with something new because you decide the time is right. Doesn't always happen. Maybe not even the majority of the time. But in my experience running high-uptime systems over multiple decades it happens. Not having somebody outside forcing you to change because it suits their philosophy or profit strategy is preferrable.

By JackSlateur 2025-11-2321:221 reply My guess is that most stuff is part of a bigger whole, and so it rots (unless it is adapted to that ever-changing whole)

Of course, you can have stuff running is constraint environment

By ordersofmag 2025-11-2323:37 Or more likely the 'whole' accesses the stable bit through some interface. The stable bit can happily keep doing it's job via the interface and the whole can change however it likes knowing that for that particular tasks (which hasn't changed) it can just call the interface.

By cpncrunch 2025-11-2315:40 Sounds like that is a different issue. I prefer to avoid spending a few weeks migrating software that i understand and support to a new OS when i dont have to. Some of it is 30 years old, but it has had all the bugs worked out.

By trueismywork 2025-11-2316:041 reply You're talking about software. The other person is talking about OS. Big difference.

By JackSlateur 2025-11-2316:36 This is exactly the same thing: OS is nothing but software. And, in this specific case, we are talking about appliance-like stuff, where the OS and the actual workloads are bundled together and sold by a third party

By LtWorf 2025-11-2323:25 > I am employed by a multi-billion company (which has more than 100k people)

In my personal experience, this could mean that you're really good or that you're completely incompetent and unaware that computers need to be plugged to a power outlet to function.

By JoeBOFH 2025-11-2314:37 Having started my IT career in manufacturing this 100%. We didn’t have a choice in some sometimes. Our support contracts would say Windows XP is the supported OS. We had lines that ran on DOS 5 because it would’ve been several million in hardware and software costs to replace and then not counting downtime of the line and would the new stuff even be compatible with the PLCs and other items.

> .. they don't want to waste time with an OS upgrade because the cost of the downtime is too high and none of the software they use is going to utilize any of the newly available features

Oopsie you got pwned and now your database or factory floor is down for weeks. Recovery is going to require specialists and costs will be 10 times what an upgrade would have cost with controlled downtime.

Not at all, it depends on the level of public exposure of the service.

In a factory, access is the primary barrier.

It's like an onion, outer surface has to be protected very well, but as you get deeper in the zone where less and less services have access then the risk / urgency is usually lowered.

Many large companies are consciously running with security issues (even Cloudflare, Meta, etc).

Yes, on the paper it's better to upgrade, in the real world, it's always about assessing the risk/benefits balance.

Sometimes updates can bring new vulnerabilities (e.g. if you upgrade from Windows 2000 to the "better and safer" Windows 11).

In your example, you have the guarantee to down the factory floor (for an unknown amount of time, what if PostgreSQL does not reboot as expected, or crashes during runtime in the updated version).

This is essentially an (hopefully temporary) self-inflicted DoS.

Versus an almost non-existent risk if the machine is well isolated, or even better, air-gapped.

By cma 2025-11-2411:40 > Versus an almost non-existent risk if the machine is well isolated, or even better, air-gapped.

Anyone else remember stuxnet?

By da_chicken 2025-11-241:20 I don't understand this comment. What exactly do you think LTS is?

By trueismywork 2025-11-2316:02 Kernel live patching takes care of everything.

There's a difference between old software and old OS. Unless you've got new hardware, chances are you never really need a new OS.

By bunnie 2025-11-2316:30 I can't upvote this hard enough. It's nice to know there's at least one other person who feels this way out there.

Also, this is the most compelling reason I've seen so far to pay a subscription. For any business that merely relies upon software as an operations tool, it's far more valuable business-wise to have stuff that works adequately and is secure, than stuff that is new and fancy.

Getting security patches without having feature creep trojan-horsed into releases is exactly what I need!

By xnx 2025-11-240:09 I'm reminded of the services that will rebuild ancient electric motors to the exact spec so they can go back on the production line like nothing happened. For big manufacturing operations, it's not even worth the risk of replacing with aa new motor.

By aboringusername 2025-11-2313:421 reply I'm not sure why there's a need to update anything every 2-3 years. In fact, the pace of change becomes exhausting in itself. In my day-to-day life, things are mostly well designed systems and processes; there's a stable code of practice when driving cars, going to the shops, picking up the shopping, paying for the items and then storing them.

What part of that process needs to change every 2-3 years? Because some 'angel investor' says we need growth which means pushing updates to make it appear like you're doing something?

old.reddit has worked the same for the last 10 years now, new.reddit is absolutely awful. That's what 2-3 years of 'change' gets you.

In fact, this website itself remains largely the same. Why change for the sake of it?

By JackSlateur 2025-11-2313:585 reply In your day-to-day life, you do chore regurarly

Why not cleaning the room only once every 2-3 years ?

By frankchn 2025-11-2314:04 I do chores regularly, and I apply security patches regularly.

Major operating system version upgrades can be more akin to upgrading all the furniture and electronics in my house at the same time.

By buildbot 2025-11-2315:54 Not that you’ll agree, but cleaning the house sounds more like running rm -rf /tmp and docker system prune than upgrading from idk, bullseye to bookworm. Let’s call that a bathroom remodel? So sometimes you live in a historic house and the bathroom cannot be remodeled or changed because it’ll fall through the floor or King Louis the XV used it once. In software, the historic house could be the PLLc firmware controlling the valves in your nuclear reactor cooling loop.

By johnisgood 2025-11-2315:54 You keep using this analogy, but it is not comparable, and it is a horrible analogy.

By 9cb14c1ec0 2025-11-2315:331 reply Why not force everyone to upgrade their cars every 2 to 3 years?

By JackSlateur 2025-11-2316:381 reply Because it has physical consequences ?

Remove that, tell everybody : "hey, for 30min of your time, you can get a new car every 6 months"

See how everybody will get new cars :)

By stelonix 2025-11-2317:20 And then the new car no longer has the camera where you need it, the panel buttons changed, the cup-holder is in another place. Even worse, the upgraded firmware & OS of the car no longer comes with an app you needed; or it does, but removed a feature that was essential for your daily use. All because some SWE takes "computer security" as more important than having an useful system.

It's the kind of rhetoric that enables shoving down user-hostile features during a simple update. And breaking many use cases. Quite common in the FOSS/Linux mentality, not so much on the rest of the world.

By prmoustache 2025-11-2316:032 reply OTOH I never lived 5 years in the same place and I think it is not that bad of an idea when I look at the sheer amount of unused or barely used shit people hoard over the years in their house.

Then one day people's health or econoly dwindle, they need to move to a place without stairs or to a city center clother to amenities such as groceries, pharmacy and healthcare without relying on a car they cannot drive safely anymore, and moveming becomes a huge task. Or they die and their survivors have to take on the burden of emptying/donating/selling all that shit accumulated over the years.

Every move I assessed what I really needed and what I didn't and I think my life is better thanks to that.

I understand this is a YMMV thing. I am not saying everyone should move every couple of years. But to many people that isn't that big of a deal and it can be also considered in a very positive way.

By oblio 2025-11-2318:34 > I look at the sheer amount of unused or barely used shit people hoard over the years in their house.

Or they could spend a weekend and get rid of that stuff for 10% of the stress of moving.

By exe34 2025-11-2316:41 Last time I lived somewhere too long I got called to jury duty, so you're not entirely wrong!

By JackSlateur 2025-11-2314:343 reply Upgrade != replace with something new (like using another OS or whatever)

By ivell 2025-11-245:54 Backward incompatible change means there is cost associated. It is like changing your furniture every 6 months. New furniture is fun, but unless your needs have changed (like marriage, children), it won't add you any benefit other than a new look...

update/upgrade == replacing with something worse.

"because that's what you do" is not a valid justification.

By JackSlateur 2025-11-2321:27 This is a pretty nevrosed way of seeing the world

By exe34 2025-11-2316:40 How often do you remodel your house?

By JackSlateur 2025-11-2313:577 reply Why do you need to clean your house every week/couple of weeks ? Why not clean only once a year ?

Keeping your infrastructure/code somehow uptodate ensures: - each time you have to upgrade, this is not a big deal - you have less breaking changes at each iteration, thus less work to do - when you must upgrade for some reasons, the step is, again, not so big - you are sure you own the infrastructure. That current people owns it (versus people who left the company 8 years ago) - you benefits from innovation (yes, there is) and/or performance improvements (yes, there is)

Keeping your stuff rotting in a dark room brings nothing good

Why not think of it a different way; why do we need to put up with breaking changes at all?

I'd much rather stand up a replacement system adjacent to the current one, and then switch over, than run the headache of debugging breaking changes every single release.

To me, this is the difference between an update and an upgrade. An update just fixes things that are broken. And upgrade adds/removes/changes features from how they were before.

I'm all for keeping things up to date. And software vendors should support that as much as possible. But forcing me to deal with a new set of challenges every few weeks is ridiculous.

This idea of rapid releases with continuous development is great when that's the fundamental point of the product. But stability is a feature too, and a far more important one in my opinion. I'd much rather a stable platform to build upon, than a rickety one that keeps changing shape every other week that I need to figure out what changed and how that impacts my system, because it means I can spend all of my time _using_ the platform rather than fixing it.

This is why bleeding edge releases exist. For people who want the latest and greatest, and are willing to deal with the instability issues and want to help find and squash bugs. For the rest of us, we just want to use the system, not help develop it. Give me a stable system, ship me bug fixes that don't fundamentally break how anything works, and let me focus on my specific task. If that costs money, so be it, but I don't want to have to take one day per week running updates to find something else is broken and have to debug and fix it. That's not what I'm here to do.

And as for cleaning the house - we always have the option of hiring a cleaner. That costs us money, but they keep the house cleanliness stable whilst we focus on something else to make enough money to cover the cleaner's cost plus some profit.

By dpoloncsak 2025-11-2421:31 I assume the cost of duplicating every piece of infra we have to create a suitable 'replacement system' would cost more than the impact the few times we have had downtime due to breaking changes in updates. Ymmv ofc

By JackSlateur 2025-11-2314:33 Because many components are "all or nothing"

And also because, for the others, you have to migrate everybody from the "old" to the "new"; Large project, low value, nobody cares, "just to your job and don't bother us with your shit"

By epistasis 2025-11-2315:05 Considering "upgrading" to be "cleaning" is very odd. Same with "rotting".

Perhaps this is a side effect of dealing with software development ecosystems with huge dependency trees?

There's a lot of software not like that at all. No dependencies. No internet connection. No multi kilobyte lock files detailing long lists of continual software churn and bug fixes.

By wiseowise 2025-11-2317:29 > Why do you need to clean your house every week/couple of weeks ? Why not clean only once a year ?

OS is not a physical house with life waste.

Rest of your message doesn’t make any sense for majority of industry. For anything dealing with manufacturing stability is much more important that marginal performance gains. Any downtime is losing money.

By layer8 2025-11-2316:30 Why would the same exact software be considered “unclean” or “rotten” a few years down the line when it previously wasn’t? What has changed? Did it need to?

I've lost count of how many Ubuntu upgrades resulted in some weird problem (network interfaces renamed, lost default route, systemd timeouts taking 5 minutes, etc.)

There is an argument for staying on the latest stable version.

By loosescrews 2025-11-2317:091 reply I think you are talking about an upgrade install. Those have a long history of breaking things. You would have to be crazy to attempt one of those on a critical production system.

What you would do for anything important is build a new separate system and then migrate to that once it is working. You can then migrate back if you discover issues too.

By icedchai 2025-11-2318:56 Yes. These sorts of upgrades were done on my home network, not an actual work-related system.

Unfortunately Debian wouldn't be exempt from "network interfaces renamed, lost default route, systemd timeouts taking 5 minutes, etc." because systemd is systemd (and Debian systemd maintainers just go along with upstream and do nothing to mitigate it)

I think interface names changed like at least 2 times in the last 4 releases.

Maybe? I run Debian on several VMs and my experience has proven otherwise. Major upgrades go a lot smoother than on Ubuntu.

By kasabali 2025-11-256:03 Smoother, sure. I'm talking about interface name changes. it's nice you haven't encountered these releases but they sure happened in Debian, too.

By exe34 2025-11-2314:09 It didn't need to be this way. It's a choice made by companies who stand to gain from the continuous churn.

By Kenji 2025-11-2316:11 I bought a hammer 2 decades ago and it still works flawlessly without me performing any maintenance. One day, the idiots in software engineering will realize that this is how tools are supposed to behave.

What kind of argument is to "upgrade your stuff every 2 to 3 years". What are you upgrading for? If the software runs fine and does it job without issues, what "stuff" is there to upgrade?

By Nextgrid 2025-11-2319:09 > What are you upgrading for?

So that whoever is doing the upgrade can justify their salary and continued employment.

This is what someone would say who has never work on anything serious, or in a regulated industry.

By foofoo12 2025-11-2318:45 Yep, let alone life critical systems. You don't fuck with them just because.

By Y_Y 2025-11-2314:04 Consider that the average CTO is about 50† and that roughly people expect to retire at 65 and die at 80.

If you can get away with one or zero overhauls of your infra during your tenure then that's probably a hell of a lot easier than every two to three years.

† https://www.zippia.com/chief-technology-officer-jobs/demogra...

By dawnerd 2025-11-2318:03 Not necessarily. There are cases where hardware support ends and trying to get drivers for a newer kernel is basically impossible without a lot of work. For example, one of my highpoint HBAs are completely unusable without running an older kernel. I imagine there’s more custom designed hardware with the same problem out there.

You would be amazed how many fortune 500 companies are still using RHEL/CentOS 7 in business critical systems. (I was, anyway.)

By xorcist 2025-11-2316:15 That's .. not the least bit surprising. It's not ancient or anything. It's still under commercial support from the vendor, even if it is sunset.

>If you are not able to upgrade your stuff every 2 to 3 years, then you will not be able to upgrade your stuff after 5, 10 or 15 years.

What happens if your software takes 2 years to develop?

By JackSlateur 2025-11-2321:25 If your software needs the world from stop moving for 2 years so that it can be prepared and released successfully, I am afraid your software will never be released :(

By cyanydeez 2025-11-2313:19 Sure. But infrastructure will always be seen as a one time cost because enshittifiction ensures every company with merit transitions from merit leaders to MBA leaders.

This happens so often its basically a failure of capitalism.

By nebula8804 2025-11-235:1014 reply The person having to maintain this must be in a world of hurt. Unless they found someone who really likes doing this kind of thing? Still, maintaining such an old codebase while the rest of the world moves on...ugh...

Maybe I'm the odd one out but I love doing stuff that has long term stability written all over it. In fact the IT world moving as fast as it does is one of my major frustrations. Professionally I have to keep up so I'm reading myself absolutely silly but it is getting to the point where I expect that one of these days I'll end up being surprised because a now 'well known technique' was completely unknown to me.

By bionsystem 2025-11-2311:061 reply I agree. We are going as far as being asked to release our public app on self-hosted kube cluster in 9 months, with no kube experience and nobody with a CKA in a 2.5 person ops team. "Just do it it's easy" is the name of the game now, if you fail you're bad, if you offer stability and respect delivery dates you are out-fashioned, and the discussion comes back every week and every warning and concern is ignored.

I remember a long time ago one of our client was a bank, they had 2 datacenters with a LACP router, SPARC machines, Solaris, VxFS, Sybase, Java app. They survived 20 years with app, OS and hardware upgrades and 0 second of downtime. And I get lectured by a 3 years old developer that I should know better.

By nubinetwork 2025-11-2312:211 reply > "just do it, its easy"

If its that easy, then why aren't they doing it instead of you? Yeah, I thought so.

By le-mark 2025-11-2313:40 > "just do it, its easy"

This is where devops came from. Developers saw admins and said I can do that in code! Every time egotistical, eager to please developers say something is easy, business says ok, do it.

This is also where agile (developers doing project management) comes from.

By lucideer 2025-11-2311:09 > I love doing stuff that has long term stability written all over it

I also love doing stuff that has long term stability written all over it. In my 20 year career of trying to do that through various roles, I've learnt that it comes with a number of prerequisites:

1. Minimising & controlling your dependencies. Ensuring code you own is stable long term is an entirely different task to ensuring upstream code continues to be available & functional. Pinning only goes sofar when it comes to CVEs.

2. Start from scratch. The effort to bring an inherited codebase that was explicitly not written with longevity in mind into line with your own standards may seem like a fun challenge, but it becomes less fun at a certain scale.

3. Scale. If you're doing anything in (1) & (2) to any extent, keep it small.

Absolutely none of the above is remotely applicable to a project like Ubuntu.

> Unless they found someone who really likes doing this kind of thing?

There are more people like that than one might think.

There's a sizable community of people who still play old video games. There are people who meticulously maintain 100 year old cars, restore 500 year old works of art, and find their passion in exploring 1000 year old buildings.

The HN front page still gets regular posts lamenting loss of the internet culture of the 80s and 90s, trying to bring back what they perceive as lost. I'm sure there are a number of bearded dudes who would commit themselves to keeping an old distro alive, just for the sake of not having to deal with systemd for example.

> There's a sizable community of people who still play old video games.

I went to the effort of reverse engineering part of Rollercoaster Tycoon 3 to add a resizeable windowed mode and fix it's behaviour with high poll rate mice... It can definitely be interesting to make old games behave on newer platforms.

By bfkwlfkjf 2025-11-237:34 Search YouTube for "gog noclip documentary", without quotes. Right up your alley.

By throwaway7356 2025-11-2311:011 reply > I'm sure there are a number of bearded dudes who would commit themselves to keeping an old distro alive, just for the sake of not having to deal with systemd for example.

I don't think so: there are Debian forks that aspire to fight against the horrors of GNOME, systemd, Wayland and Rust, but they don't attract people to work on them.

By bradfa 2025-11-2313:11 That there are so many indicates to me the opposite. There are lots of people who want to work on that kind of thing, just they all have slightly different opinions as to which part is the part they’re fighting against, hence so many different forks.

The forks are all volunteer projects (except Ubuntu), so having slightly different opinions isn’t considering capitalism as a driving force.

By vladak 2025-11-2321:23 One of my colleagues (who has no beard) called this sort of job half jokingly retrocomputing. It has definitely its pros and cons.

On the other hand: dealing with 14.04 is practically cutting edge compared to stuff still using AIX and HPUX, which were outdated even 20 years ago lol

It's because they stopped development in the late 90s. Before Windows 95 (Chicago) came out, HP-UX with VUE was really cutting edge. IBM kinda screwed it up when they created CDE out of it though.

And besides the GUI, all unixes were way more cutting edge than anything windows except NT. Only when that went mainstream with XP it became serious.

I know your 20 year timeframe is after XP's release, but I just wanted to point out there was a time when the unixes were way ahead. You could even get common software like WP, Lotus 123 and even internet explorer and the consumer outlook (i forget the name) for them in the late 90s.

By muterad_murilax 2025-11-2311:211 reply > IBM kinda screwed it up when they created CDE out of it though.

Could you please elaborate?

By wkat4242 2025-11-2311:47 VUE was really "happy", clean. Sans-serif fonts. Cool colours. Funny design like a HP logo and on/off button on the dock.

IBM made it super suit and tie. Geriatric colour schemes with dark colours, formal serif fonts and anything cool removed.

Functionally it was the same (even two or three features were added) but it went from "designed for people" to "designed for business". Like everything that IBM got their hands on in those days (these days they make nothing of consequence anymore anyway, they're just a consulting firm).

It was really disappointing to me when we got the "upgrade". And HP was really dismissive of VUE because they wanted to protect their collaboration deal.

I think 10.30 was peak HP-UX. 11 and 11i were the decline.

By egorfine 2025-11-2312:13 Well I look at it from the relativistic perspective. See, AIX or HPUX are frozen in time and there is no temptation whatsoever within those two environments.

Being stuck in Ubuntu 14.04 you can actually take a look out the window and see what you are missing by being stuck in the past. It hurts.

By pjmlp 2025-11-2314:19 Aix is still getting new releases, don't mix it up with HP-UX.

By asteroidburger 2025-11-237:023 reply You're not adding new features and such like that. Just patching security vulnerabilities in a forked branch.

Sure, you won't get the niceties of modern developments, but at least you have access to all of the source code and a working development environment.

By worthless-trash 2025-11-237:211 reply As someone who actively maintains old rhel, the development environment is something you can drag forward.

The biggest problem is fixing security flaws with patches that dont have 'simple' fixes. I imagine that they are going to have problems with accurately determining vulnerability in older code bases where code is similar, but not the same.

By littlestymaar 2025-11-2310:121 reply > I imagine that they are going to have problems with accurately determining vulnerability in older code bases where code is similar, but not the same.

That sounds like a fun job actually.

If you can find the patches, it's fun to tweak them in the most conservative way possible to apply to the old code base.

However, things get annoying once something ends up on some priority list (like the Known Exploited Vulnerabilities list from CISA), you ship the software in a much older version, and there is no reproducer and no isolated patch. What do you do then? Rebase to get the alleged fix? You can't even tell if the vulnerability was present in the previous version.

By littlestymaar 2025-11-2412:141 reply > However, things get annoying once something ends up on some priority list (like the Known Exploited Vulnerabilities list from CISA), you ship the software in a much older version, and there is no reproducer

There are known exploited vulnerabilities without PoC? TIL and that doesn't sound fun at all indeed.

By fweimer 2025-11-2421:05 Distribution maintainers who do the backports do not necessarily have access to this kind of information. My impression is that open sharing of in-the-wild exploits isn't something that happens regularly anymore (if it ever did), but I'm very much out of the loop these days.

And access to the reproducer is merely a replacement for lack of public vulnerability-to-commit mapping for software that has a public version control repository.

By worthless-trash 2025-11-248:01 This guy backports.

The unfortunate problem is that, the more popular software is, the more it gets looked at, its code worked on. But forked branches as they age, become less and less likely to get a look-at.

Imagine a piece of software that is on some LTS, but it's not that popular. Bash is going to be used extensively, but what about a library used by one package? And the package is used by 10k people worldwide?

Well, many of those people have moved on to a newer version of a distro. So now you're left with 18 people in the world, using 10 year old LTS, so who finds the security vulnerabilities? The distro sure doesn't, distros typically just wait for CVEs.

And after a decade, the codebase is often diverged enough, that vulnerability researchers, looking at newer code, won't be helpful for older code. They're basically unique codebases at that point. Who's going through that unique codebase?

I'd say that a forked, LTS apache2 (just an example) on a 15 year old LTS is likely used by 17 people and someone's dog. So one might ask, would you use software which is a security concern, let's say a http server or what not, if only 18 people in the world looked at the codebase? Used it?

And are around to find CVEs?

This is a problem with any rarely used software. Fewer hands on, means less chance of finding vulnerabilities. 15 year old LTS means all software is rare.

And even though software is rare, if an adversary finds out it is so, they can then play to their heart's content, looking for a vulnerability.

By rlpb 2025-11-2316:04 > I'd say that a forked, LTS apache2 (just an example) on a 15 year old LTS is likely used by 17 people and someone's dog.

Likewise, the number of black hats searching for vulnerabilities in these versions is probably zero, since there isn't a deployment base worth farming.

Unless you're facing something targeted at you that an adversary is going to go to huge expense to try to find fresh vulnerabilities specifically in the stack you're using, you're probably fine.

I agree with your sentiment that no known vulnerabilities doesn't mean no vulnerabilities, but my point is that the risk scales down with the deployment numbers as well.

And always keeping up with the newest thing can be more dangerous in this regard: new vulnerabilities are being introduced all the time, so your total exposure window could well be larger.

By bradfa 2025-11-2313:07 If no one is posting CVE that affects these old Ubuntu versions then Canonical doesn’t have to fix them. I realize that’s not your point, but it almost certainly is a part of Canonical’s business plan for setting the cost of this feature.

The Pro subscription isn’t free and clearly Canonical think they will have enough uptake on old versions to justify the engineering spend. The market will tell them if they’re right soon. It will be interesting to watch. So far it seems clear they have enough Pro customers to think expanding it is profitable.

By fweimer 2025-11-2314:39 You typically need to maintain much newer C++ compilers because things from the browser world can only be maintained through periodic rebases. Chances are that you end up building a contemporary Rust toolchain as well, and possibly more.

(Lucky for you if you excluded anything close to browsers and GUIs from your LTS offering.)

By SoftTalker 2025-11-235:191 reply Some people just want a job, they don’t wrap up their sense of self worth in it.

Nothing to do with self worth, it is a meaningful job, but a fun one?

By wjnc 2025-11-238:25 Clear mission, a well set up team and autonomy in execution can make most jobs fun to do? Stress (due to), lack of autonomy, lack of clear mission and bad teams and management I think are the root of unhappy work?

By cyber_kinetist 2025-11-238:12 Not all jobs are fun, but they can be bearable if meaningful enough (whether that being useful for other people, or even just provide a living wage to support your family)

By al_borland 2025-11-237:01 Most people I know don’t like chasing the latest framework that everyone will forget about in 6 months.

By perlgeek 2025-11-2311:46 When I'm writing new software, I kinda hate having to support old legacy stuff, because it makes my life harder, and means I cannot depend on new library or language features.

But that's not what happens here, this is probably mostly backporting security fixes to older version. I haven't done that to any meaningful amount, but why wouldn't you find a sense of purpose in it? And if you do, why wouldn't it be fun?

By 2b3a51 2025-11-2310:42 I'm wondering how the maintenance effort would be organised.

Would it be existing teams in the main functional areas (networking, file systems, user space tools, kernel, systemd &c) keeping the packages earmarked as 'legacy add-on' as they age out of the usual LTS, old LTS, oldold LTS and so on?

Or would it in fact be a special team so people spending most of their working week on the legacy add-on?

Does Canonical have teams that map to each release, tracking it down through the stages or do they have functional teams that work on streams of packages that age through?

By dehugger 2025-11-2418:02 why would old code be worth less? to me its a great achievement for a codebase to maintain stability and usability over a long timespan.

if you venture even five feet into the world of enterprise software (particularly at non tech companies) you will discover that fifteen years isnt a very long time. when you spend many millions on a core system that is critical to your business you want it to continue working for many, many years.

By ahartmetz 2025-11-239:26 IME (do note, the things I've dealt with were obsolete for a much shorter time), such work isn't particularly ugly even though the idea of it is. Some of it will feel like cheating because you just need to paraphrase a fix, some of it will be difficult because critical parts don't exist yet. Maybe you'll get to implement a tiny version of a new feature.

By Vinnl 2025-11-2315:57 It's extra fun because it's not their own codebase; it's a bunch of upstreams that never planned to support it for that long. If they're lucky, some of them will even receive the bug reports and complaints directly...

By randomtoast 2025-11-2311:411 reply I guess they are betting that AI can semi-auto patch this distro for 15 years.

By kasabali 2025-11-2413:36 They aren't gonna patch it for 15 years, 11 years have already passed.

By xandrius 2025-11-2320:11 For the right price, I wouldn't mind.