CamperBob2

12712

Karma2012-05-06

CreatedRecent Activity

Commented: "GPT Image 1.5"

Inspiring artists =/= involuntarily training privately owned LLM’s that charge for access.

Agreed there, which is why it's important to work for open access to the results. The resulting regime won't look much like present-day copyright law, but if we do it right, it will be better for us all.

In other words, instead of insisting that "No one can have this," or "Only a few can have this," which (again) will not be options for works that you release commercially, it's better IMHO to insist that "Everyone can have this."

I don't think we'll get genuine AGI without long-term memory, specifically in the form of weight adjustment rather than just LoRAs or longer and longer contexts. When the model gets something wrong and we tell it "That's wrong, here's the right answer," it needs to remember that.

Which obviously opens up a can of worms regarding who should have authority to supply the "right answer," but still... lacking the core capability, AGI isn't something we can talk about yet.

LLMs will be a part of AGI, I'm sure, but they are insufficient to get us there on their own. A big step forward but probably far from the last.

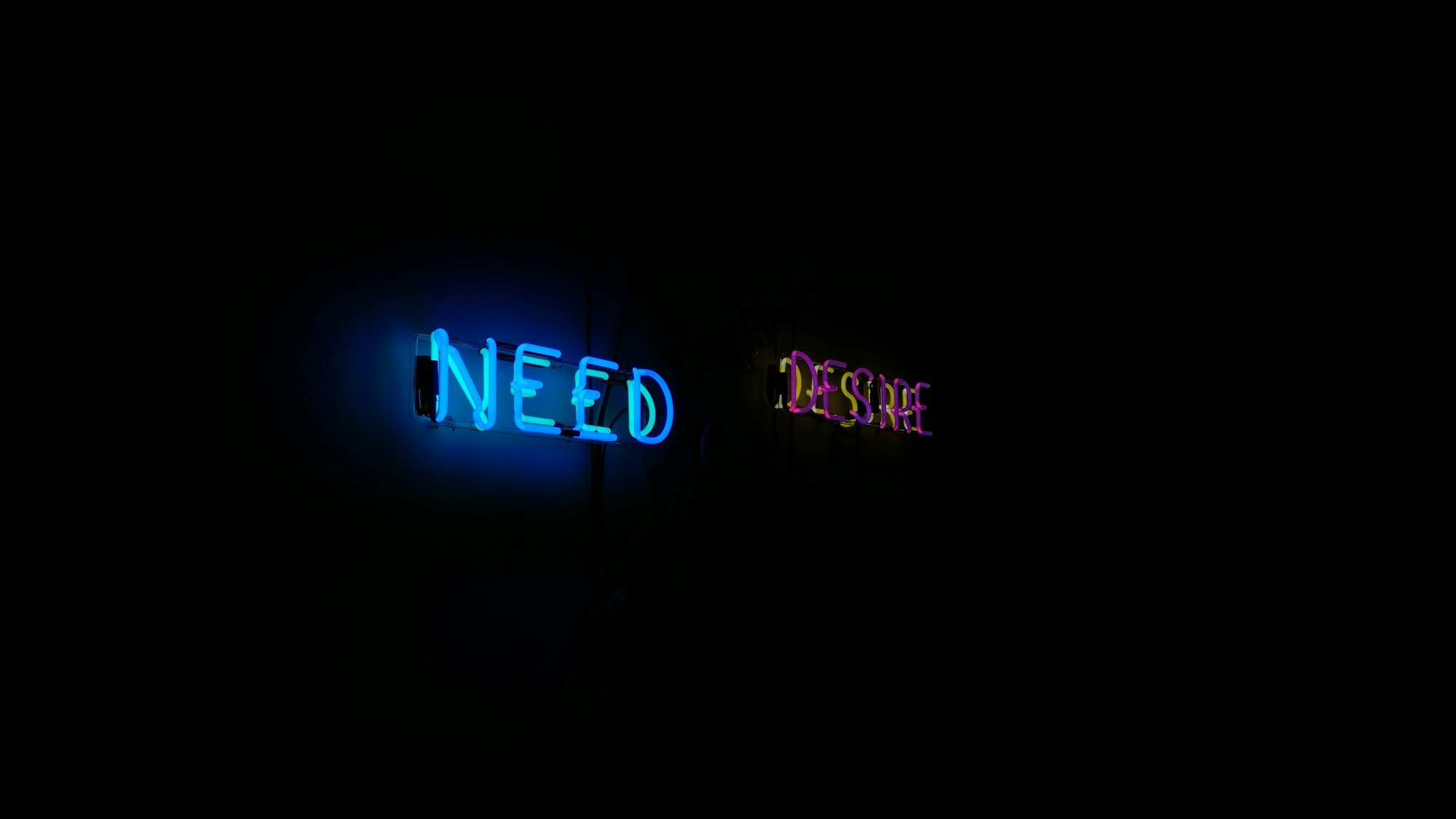

Commented: "Thin desires are eating life"