CES 2025 Announcement from Blur Busters “Area51” UFO Lab – BBOSDI Version 1.01 – Initial Draft Version

Part 1: The CRT Simulation Breakthrough Leads to Plasma TV Simulation and CRT-VRR Experiments

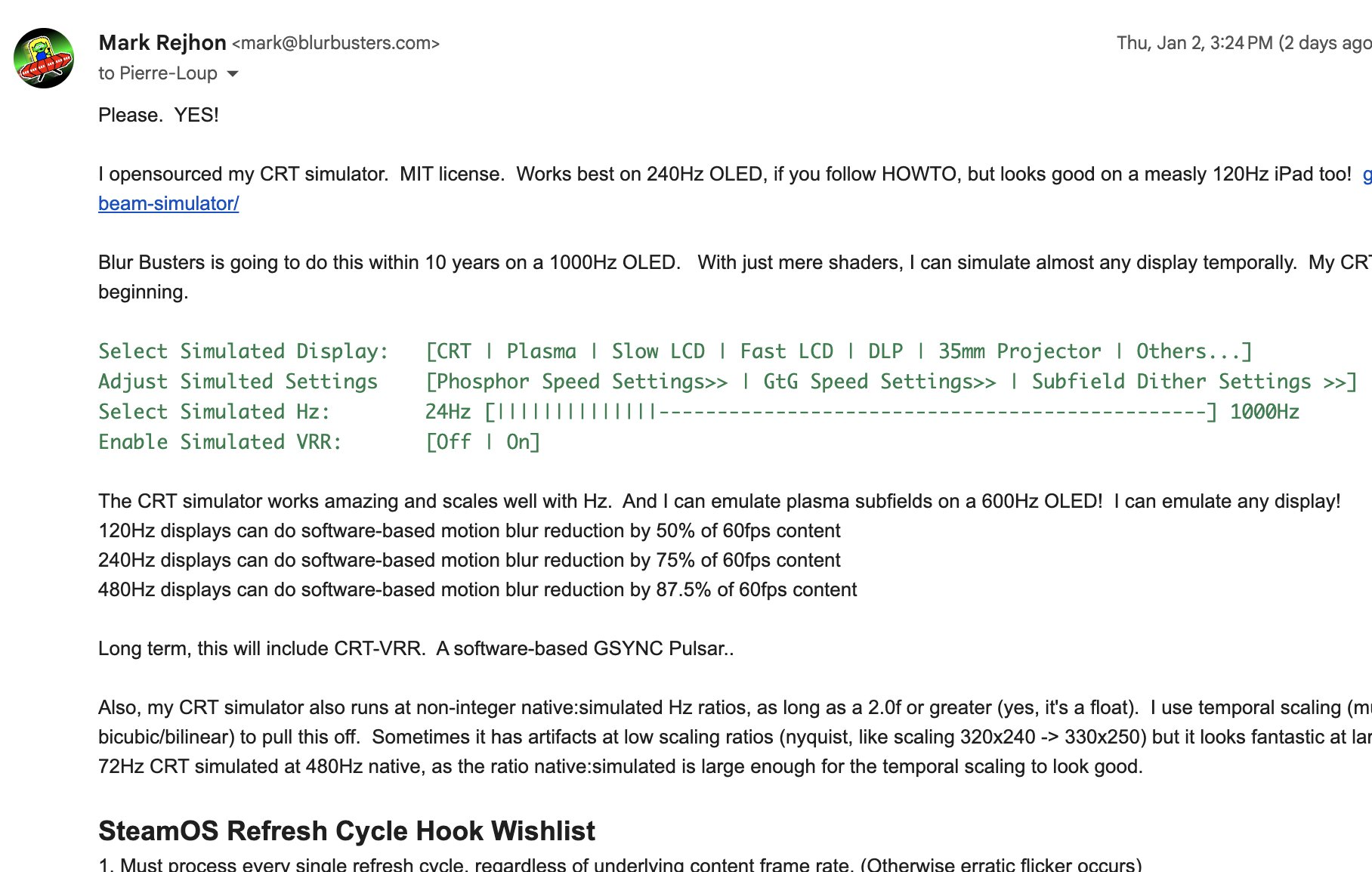

With Blur Busters real-time CRT electron beam simulation breakthrough and our open source code successfully simulating a CRT that successfully reduces motion blur at any simulated refresh rate, including simulated 60Hz for 60fps content (with less flicker than eye-searing squarewave PWM BFI strobe).

The CRT shader does not even require integer-divisible native:simulated Hz ratios, as we do temporal scaling (temporal dimension of good spatial scaling). Later 2025, we plan to release a plasma display simulation shader (frame-stacked 3D dither x-y-time).

Since our CRT shader does not require integer native:simulated Hz ratios, we are now experimenting in CRT-VRR (software based VRR strobing) as well as other display simulation algorithms.

Attracting the Community with Proof of Concept Demonstrations

The retrogaming community has brilliantly skilled open source software developers working in the SPATIAL dimension (CRT filters, scanlines, phosphor simulation, etc).

But Blur Busters? We do the TEMPORAL dimension, since we’re the Hz geniuses. Motion, blur reduction, display simulation, interlace simulation, subfield simulation, BFI, GtG simulators, etc.

With the launch of TestUFO Version 2.1, we have added more display simulators. Now we have extended this to a third dimension, thanks to brute refresh rates making this possible. We can use upcoming 600 Hz displays to simulate a 600 Hz plasma TV, using stacked dithered images for subfields.

Our popular display testing website already has TestUFO interlacing demo (View at 60 Hz + 400% browser zoom), TestUFO color wheel simulator (View at 240Hz+), and TestUFO black frame insertion demo (View at 120Hz+ and TestUFO photo version of BFI (View at 120Hz+), and TestUFO VRR simulator! So we already simulate lineitems of displays. However, as we roar higher in Hz, it enables more simulation superpowers!

IMPORTANT: TestUFO integrity check: Make sure browser is framepacing properly, in single-monitor mode, on the primary monitor, and no red spikes at TestUFO Frame Pacing Tester to prevent erratic flicker problems.

Yes, we’re porting the CRT shadertoy to TestUFO soon too. All display simulators will be built as TestUFO demos, even if some may arrive at shadertoy first.

Why Are We Doing This?

We have been working for years to improve display technology through programmes such as Blur Busters Approved and Blur Busters Verified programme, with successes such as ViewSonic XG2431 and Retrotink 4K.

- Limiting Factor: Display scaler/TCONs are not as programmable as we would like

- Limiting Factor: Modern OLEDs are unable to do flexible-range BFI (e.g. 48Hz-240Hz range, even for fixed-Hz), and unable to do adjustable pulsewidth in software. Some vendors refuse to implement our requests.

- Limiting Factor: Some shaders outperform firmware now. Even Retrotink 4K BFI box-in-middle has less latency than a popular brand’s OLED firmware BFI, because of the incredible partially beam-raced optimizations I helped Retrotink 4K do.

- Limiting Factor: Communication problem between a headquarters and a factory speaking in a different language, making display algorithms extremely difficult to engineer in an era of falling-cost displays.

- Limiting Factor: Generic scaler/TCON is used with severely quality-degrading attributes such as outdated 17×17 overdrive lookup tables, formerly good at 60Hz, that look absolutely awful at 360Hz+ or panels with very asymmetric GtG response (e.g. tiny GtG hotspots that 17×17 OD LUTs cannot cover properly for good strobing).

- Limiting Factor: We can’t do ergonomic creativity in both directions, including an adjustable LCD GtG simulator for OLED panels to make Netflix 24fps stutter less (24fps stutters more on OLED than LCD)

- Limiting Factor: Aging greybeard CRT and display engineers, trained on yesteryear research, needing to collaborate with star shader programmers & modern temporally skilled people, suddenly only recently realizing a 480Hz OLEDs very accurately emulates most temporal aspects of a CRT tube via a GPU shader with nigh-100% population human visibility;

- Limiting Factor: We have both mainstream and niche spinoff benefits display manufacturers aren’t doing for us. As evidenced by software based CRT eventually leading to CRT-VRR, etc.

GPUs are getting more powerful. Why not do some blur busting in a shader instead? A modern RTX 3080 is now powerful enough to do a software-based equivalent of “GSYNC Pulsar” for a future 1000Hz OLED. Much easier software development work! Instead of limited FPGA talent, the world has tons of shader star programmers. In addition, ordinary VRR can now be simulated via brute refresh cycle blending algorithms similar to TestUFO VRR Simulator.

Great Filter-Down of BYOA (Bring Your Own Algorithm)

- Stage 1: Bleeding edge communities (with great shader programmers) creates shaders.

- Stage 2: Shaders gets implemented into software such as RetroArch emulator (which now has CRT simulator), as well as other things like Retrotink 4K and others (which is receiving CRT simulator too)

- Stage 3: Operating systems with refresh cycle hooks can then accept optional plug-in shaders.

- Stage 4: Display manufacturers can port shaders to FPGA if they wish. But we’re not waiting for them.

Blur Busters is currently communicating to Valve, and has now committed to adding a refresh cycle shader system to SteamOS that runs independently of content frame rate.

DRAFT Specification v1.01 – Refresh Cycle Shader

- Operating Systems (e.g. Valve SteamOS, Microsoft Windows, Apple MacOS/iOS, Linux);

- GPU driver vendors (e.g. NVIDIA, AMD, Intel, Qualcomm);

- Display manufacturers (the whole industry);

- Video Processor Vendors and Video Capture Vendors (e.g. Retrotink, Elecard);

- Independent Software Vendors (e.g. RetroArch, Reshade)

We hereafter call this the “core subsystem“.

Possible Use Cases of Refresh Cycle Shaders

There are mainstream and niche use cases.

- Display simulators (CRT simulator, plasma simulator, etc);

- Adjustable pixel response and overdrive (shader-based overdrive algorithm, better LCD overdrive, etc);

- Ergonomic features (deflickering features, destuttering features, etc);

- Motion blur reduction features (softer impulsing-based simulators using phosphor simulators);

- Improved gaming and de-stuttering features (simulated VRR, de-jittering gametimes, etc);

- Improved home theater (Simulating 35mm projector / simulated LCD GtG, less harsh for 24fps OLED);

- Videophile ISF-league features (Color adjustment hooks and preferred-tonemapping hooks);

- High-end esports gaming (Some, not all, algorithms are less lag in refresh cycle shader);

- Easier 3D shutter glasses on ANY 240Hz+ generic display with NO crosstalk;

(Most 240Hz displays works with generic shutter glasses via LEFT/BFI/RIGHT/BFI sequence). - Etc, etc, etc.

Mandatory Driver Subsystem Requirements (RFC2119 “MUST”)

Your software, display, hardware, driver, or operating system MUST:

- Contain a GPU that supports a shader programming language;

(you can use the same GPU used for other purposes such as gaming/streaming. That means an existing GPU in a display used for apps, can also do double duty doing refresh cycle shaders too!) - Support refresh cycle shader processing, independently of content frame rate.

(e.g. CRT simulation on 240Hz OLED must run continually every Hz) - Support plug-in refresh cycle shaders, installed by the end user

(e.g. BOTH open source & proprietary shaders) - Support virtualization of VSYNC, independent of real device VSYNC.

(e.g. 60Hz CRT VSYNC on 240Hz OLED. This allows existing 60fps games/videos to work properly.) - For displays, support linear nits in at least one display mode

(e.g. post-gamma corrected SDR is very common, but ideally I’d like the first 20% windowing of HDR)

This MAY be internal (Retroarch) and/or driver (OS + GPU driver) and/or box-in middle (Video processor box) and/or display side (Display with a built-in GPU).

Recommended Subsystem Requirements (RFC2119 “SHOULD”)

Refresh cycle shaders require real time shader computations that involve energy buffers (linear colorspace) to subdivide refresh cycles into multiple subfields.

Subfields MAY involve CRT scanout, plasma subfields, DLP subfields, BFI frames, simulation of LCD GtG, phosphor simulation, and subfields invented for dream non-existent displays never made in hardware, etc.

In order to facilitate this:

- The subsystem SHOULD be able to communicate a time budget to the shader as a debug or uniform (e.g. 1ms, 2ms, 4ms), to allow the shader to determine if there’s enough time to process the shader.

(e.g. compute-heavy display simulators, versus lightweight display simulators) - The subsystem SHOULD be able to allow configurable virtual refresh rate, complete with virtualized VSYNC’s lower than native VSYNC’s.

(e.g. 72Hz CRT on 240Hz OLED). - The subsystem SHOULD relay as much of the following as shader uniforms to the refresh cycle shader:

– Native Hz of display;

– Virtualized Hz (or if VRR simulation shader, current frametime);

– Framebuffer of last 1 or 2 game frames (frame based);

– Whether it’s a changed frame or not (e.g. no new frame has arrived due to low frame rate);

– Resolution, color depth

– Optional: Game 6dof positionals (e.g. for lagless 10:1 framegen algorithms);

– Optional: Epoch gametime timestamps, if relayed from game engine (e.g. for dejittering duty); – Optional: Epoch time elapsed between game frame and refresh cycle (e.g. for dejittering duty);Where it is not possible, inform shader of limitations, so shader metadata can inform whether it’s possible or not.

- Optional: The subsystem MAY allow virtualized Hz above native Hz.

(e.g. This will permit things like splitting 4K 1000Hz over multiple 4K 120Hz LCoS projectors (using Arduino spinning shutter wheels in front of existing projectors), while projection mapping 8 video outputs, stacking onto the same projector screen, flashing one at a time for an unlimited-bounds refresh rate display.) - Optional: The subsystem MAY NOT assume integer divisors between native Hz and virtual Hz, although this is reasonable initially as a simplification to bootstrap a plug in shader system. Some shaders supports temporal scaling (Temporal version of spatial bilinea

(e.g. Blur Busters CRT simulator can do 24fps Netflix to 72Hz simulated CRT on 280Hz LCD) - The shader SHOULD be able to inform the subsystem of the approximate workload expected.

(e.g. Informing that a shader is 4x more compute-heavy than the other shader) - For displays only:

Due to linearspace requirements of some (not all) refresh cycle shaders, the display subsystem SHOULD relay APL/ABL tonemapping algorithm and/or inform constraints (e.g. linear HDR up to first 10% window).

Note: Currently, we are often relying on SDR+gamma correction to get access to linearspace for correct Talbot Plateau Law mathematics in refresh cycle shaders such as CRT simulators.

While no mandatory requirement exists, if your shader is based on widely available knowledge with no patents, it is RECOMMEDED that generic open source refresh cycle shaders be provided in a reasonably permissive source code license (MIT/Apache) permitting integration into both GPL2/GPL3 projects and proprietary/commercial projects, for fastest & maximum industry spread.

Otherwise, the hardware industry suffers with inferior little-used or little-known entrenched algorithms that gets worse quickly over time (e.g. outdated 17×17 LCD overdrive LUTs from yesteryear, still used in 360Hz+ panels) as refresh cycles progress to stratospheric levels and creates GtG heatmapping issues.

Recommended Testing Displays

- Faster displays perform better.

Example: OLED performs display simulators better than VA LCDs - High bit-depth displays perform better.

Example: Most TN panels are only 6-bit, interfering with temporal brightness spreading algorithms. - More native:simulated Hz ratios helps refresh cycle shaders. More Hz is better.

Example: CRT simulator benefits from more Hz:

– CRT sim on 120Hz sample-and-hold reduces 60fps 60Hz motion blur by up to 50%

– CRT sim on 240Hz sample-and-hold reduces 60fps 60Hz motion blur by up to 75%

– CRT sim on 480Hz sample-and-hold reduces 60fps 60Hz motion blur by up to 87.5%

While the now-released CRT simulator showed noticeable benefit (Better than BFI) on a 120Hz IPS LCD, the best testing results occured with 480 Hz OLEDs. Test using much Hz as you can. That being said, 240Hz OLED generally produces very good results with the CRT simulators, especially on retro resolutions (e.g. Super Mario).

Researchers have noticed that for OLED instead of LCD, 120 Hz vs 480 Hz OLED is more human visible than 60Hz vs 120Hz. The OLED’s instant pixel response (GtG = like a slow moving camera shutter) enables unfiltered refresh rate benefits to human eyes, similar to a 1/120sec camera shutter versus 1/480sec camera shutter. As of January 5th, 480Hz OLEDs are available, as are 750Hz LCDs coming soon.

Specification is Evolving

The founder Mark Rejhon has experience creating specifications (since Mark is born deaf, he worked on XMPP XEP-0301 Real Time Text). However, we release this specification as a Blur Busters page, to bring this specification much more mainstream, much more quickly.

With our reputation (30+ peer reviewed citations in papers and industry textbook reading), we welcome collaborators to join our growing consortium for a formalized Version 1.1 specification of this Blur Busters Open Source Display Initiative, to get ready for the Bring Your Own Algorithm (BYOA) Open Source Display Revolution.