New to Honeycomb? Get your free account today.

In a really broad sense, the history of observability tools over the past couple of decades have been about a pretty simple concept: how do we make terabytes of heterogeneous telemetry data comprehensible to human beings? New Relic did this for the Rails revolution, Datadog did it for the rise of AWS, and Honeycomb led the way for OpenTelemetry.

The loop has been the same in each case. New abstractions and techniques for software development and deployment gain traction, those abstractions make software more accessible by hiding complexity, and that complexity requires new ways to monitor and measure what’s happening. We build tools like dashboards, adaptive alerting, and dynamic sampling. All of these help us compress the sheer amount of stuff happening into something that’s comprehensible to our human intelligence.

In AI, I see the death of this paradigm. It’s already real, it’s already here, and it’s going to fundamentally change the way we approach systems design and operation in the future.

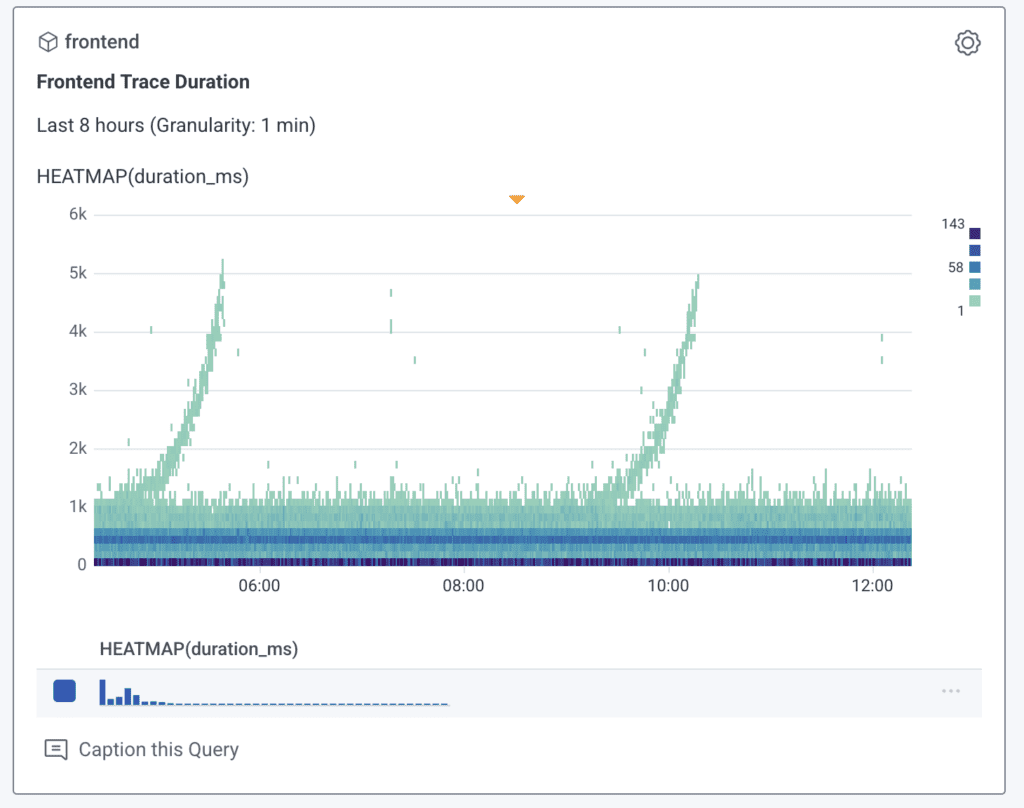

I’m going to tell you a story. It’s about this picture:

If you’ve ever seen a Honeycomb demo, you’ve probably seen this image. We love it, because it’s not only a great way to show a real-world problem—it’s something that plays well to our core strengths of enabling investigatory loops. Those little peaks you see in the heatmap represent slow requests in a frontend service that rise over time before suddenly resetting. They represent a small percentage of your users experiencing poor performance—and we all know what this means in the real world: lost sales, poor experience, and general malaise at the continued enshittification of software.

In a Honeycomb demo, we show you how easy it is to use our UI to understand what those spikes actually mean. You draw a box around them, and we run BubbleUp to detect anomalies by analyzing the trace data that’s backing this visualization, showing you what’s similar and what’s different between the spikes and the baseline. Eventually, you can drill down to the specific service and even method call that’s causing the problem. It’s a great demo, and it really shows the power of our platform.

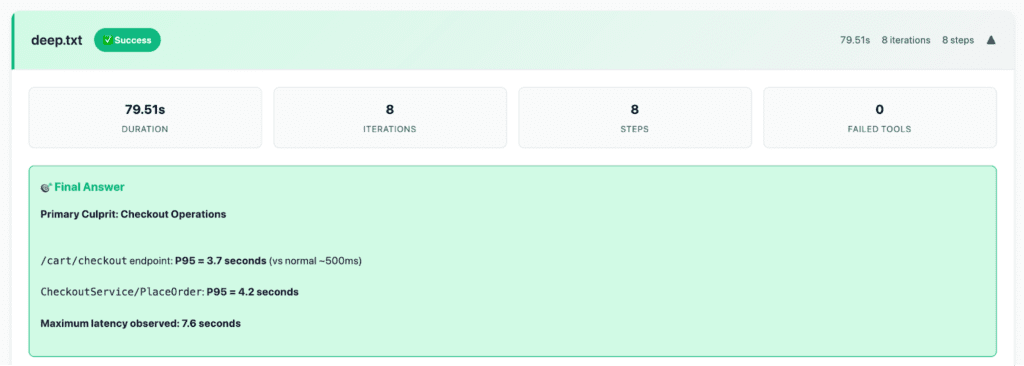

Last Friday, I showed a demo at our weekly internal Demo Day. It started with what I just showed you, and then I ran a single prompt through an AI agent that read as follows:

Please investigate the odd latency spikes in the frontend service that happen every four hours or so, and tell me why they’re happening.

The screenshot here elides the remainder of the response from the LLM (please find the entire text at the end of this post), but there’s a few things I want to call out. First, this wasn’t anything too special. The agent was something I wrote myself in a couple of days; it’s just an LLM calling tools in a loop. The model itself is off-the-shelf Claude Sonnet 4. The integration with Honeycomb is our new Model Context Protocol (MCP) server. It took 80 seconds, made eight tool calls, and not only did it tell me why those spikes happened, it figured it out in a pretty similar manner to how we’d tell you to do it with BubbleUp.

This isn’t a contrived example. I basically asked the agent the same question we’d ask you in a demo, and the agent figured it out with no additional prompts, training, or guidance. It effectively zero-shot a real-world scenario.

And it did it for sixty cents.

I want to be clear, this was perhaps the least optimized version of this workflow. Inference costs are only going down, and we can certainly make our MCP server more efficient. There are ways to reduce the amount of input tokens even more. We can play around with more tailored aggregations and function calls that return LLM-optimized query results. It’s an exciting new era!

It also should serve as a wakeup call to the entire industry. This is a seismic shift in how we should conceptualize observability tooling. If your product’s value proposition is nice graphs and easy instrumentation, you are le cooked. An LLM commoditizes the analysis piece, OpenTelemetry commoditizes the instrumentation piece. The moats are emptying.

I’m not gonna sit here and say this destroys the idea of humans being involved in the process, though. I don’t think that’s true. The rise of the cloud didn’t destroy the idea of IT. The existence of Rails doesn’t mean we don’t need server programmers. Productivity increases expand the map. There’ll be more software, of all shapes and sizes. We’re going to need more of everything.

The question, then, is: what does this require from us? Where does observability sit in a world where code is cheap, refactors are cheap, and analysis is a constant factor?

I’m gonna put a marker out there: the only thing that really matters is fast, tight feedback loops at every stage of development and operations. AI thrives on speed—it’ll outrun you every time. To succeed, you need tools that move at the speed of AI as well. The slower your analysis engine, the worse your results. LLMs will generate, test, and discard hypotheses faster than you ever can. They might be wrong a dozen times before they get it right, but again, we’re talking about fractional pennies here.

I don’t see this as a reason to despair, but as something to celebrate. The things that AI-assisted development and operations can lead us to are the things we’ve always been about. Honeycomb’s entire modus operandi is predicated on fast feedback loops, collaborative knowledge sharing, and treating everything as an experiment. We’re built for the future that’s here today, on a platform that allows us to be the best tool for tomorrow.

The exact contours of what this industry looks like in two, five, or ten years are out of my view. I don’t think there’s anyone that can credibly tell you what things will look like. I’m also not really in the business of making predictions. What I can say, though, is that I think we’re going to see a spectrum of AI assistance in development and operational tasks across the entire software development lifecycle:

- AI agents can run passively as you write and deploy code, suggesting fixes to improve code quality based on real-world observations from production.

- Emergent system behavior can be detected and addressed by AI, with unattended agents identifying impacts to users, investigating them, compiling reports, and presenting those to human operators for approval and tweaks before making changes.

- Bleeding-edge organizations can adopt entirely unattended SWE/SRE roles, powered by tools like Honeycomb and friends, optimizing and writing software to meet business needs and goals.

In all of these cases, what matters most is speed. Traditional monitoring and observability tools don’t have what it takes to compete in this world. Pretty dashboards, pre-built alerts, and magic integrations aren’t sufficient for the future we’re building today. Sub-second query performance, unified data storage, and collaborative workflows between humans and AI are the keys to success in the future of observability.

Let’s go do something cool.

Addendum: Here’s the full output from the investigation screenshot earlier.

Perfect! Now I have a clear picture of what’s happening. Based on my investigation, I can explain the latency spikes you’re seeing in your frontend service.

🔍 Investigation Results: Frontend Latency Spikes

Root Cause Identified: Checkout Service Performance Issues

The latency spikes you’re experiencing every ~4 hours are NOT actually periodic—this appears to be a load testing or synthetic traffic pattern rather than a scheduled system issue. Here’s what I found:

📊 Key Findings:

- Primary Culprit: Checkout Operations

- /cart/checkout endpoint: P95 = 3.7 seconds (vs normal ~500ms)

- CheckoutService/PlaceOrder: P95 = 4.2 seconds

- Maximum latency observed: 7.6 seconds

- Spike Pattern Analysis:

- Spikes are concentrated in checkout operations only

- Other endpoints remain relatively stable

- 485 slow checkout operations in the last 24 hours

- Pattern suggests load testing or batch checkout processing

- Spikes are concentrated in checkout operations only

- Downstream Service Impact:

- CheckoutService/PlaceOrder: P95 = 4.6 seconds

- getDiscounts operation: P95 = 4.2 seconds

- ShippingService/ShipOrder: P95 = 4.0 seconds

- CheckoutService/PlaceOrder: P95 = 4.6 seconds

🕵️ Evidence from Trace Analysis:

Looking at the sample traces, I can see:

- User Agent Pattern: python-requests/2.28.2 and python-requests/2.31.0

- Consistent Client IPs: 52.45.250.5 and internal IPs

- High Cart Values: $6,861, $7,036, $259 (suggesting test scenarios)

- Same User IDs: Multiple requests from user 20109 with identical cart totals

💡 Why This is Happening:

Most Likely Cause: Load Testing or Synthetic Monitoring

- The pattern suggests automated testing rather than real user traffic

- Python requests library indicates scripted traffic

- High cart values and repeated scenarios point to test data

- The “every 4 hours” pattern is likely your load testing schedule

Technical Root Cause:

- Checkout service bottleneck during high-volume operations

- Discount calculation delays (4.2s P95)

- Shipping service delays during order processing

- Possible database contention or external API timeouts

🎯 Recommendations:

- Immediate Actions:

- Verify if this is load testing—check with your testing team

- Monitor checkout service resources during spike periods

- Check discount service performance and external dependencies

- Performance Optimization:

- Optimize discount calculation logic

- Add caching for shipping calculations

- Implement circuit breakers for external payment/shipping APIs

- Scale checkout service if this represents real user load

- Optimize discount calculation logic

- Monitoring Improvements:

- Separate alerts for synthetic vs. real user traffic

- Add SLI tracking specifically for checkout operations

- Monitor downstream dependencies (payment, shipping, discount services)

🚨 Action Items:

- Confirm if the 4-hour pattern matches your load testing schedule

- If it’s real traffic, investigate checkout service capacity and dependencies

- Consider implementing checkout-specific performance monitoring and alerting

The good news is that your other services are performing well—this appears to be an isolated issue with the checkout flow, likely exacerbated by load testing scenarios.