I’ve had preview access to the new GPT-5 model family for the past two weeks (see related video) and have been using GPT-5 as my daily-driver. It’s my new favorite …

7th August 2025

I’ve had preview access to the new GPT-5 model family for the past two weeks (see related video) and have been using GPT-5 as my daily-driver. It’s my new favorite model. It’s still an LLM—it’s not a dramatic departure from what we’ve had before—but it rarely screws up and generally feels competent or occasionally impressive at the kinds of things I like to use models for.

I’ve collected a lot of notes over the past two weeks, so I’ve decided to break them up into a series of posts. This first one will cover key characteristics of the models, how they are priced and what we can learn from the GPT-5 system card.

Key model characteristics

Let’s start with the fundamentals. GPT-5 in ChatGPT is a weird hybrid that switches between different models. Here’s what the system card says about that (my highlights in bold):

GPT-5 is a unified system with a smart and fast model that answers most questions, a deeper reasoning model for harder problems, and a real-time router that quickly decides which model to use based on conversation type, complexity, tool needs, and explicit intent (for example, if you say “think hard about this” in the prompt). [...] Once usage limits are reached, a mini version of each model handles remaining queries. In the near future, we plan to integrate these capabilities into a single model.

GPT-5 in the API is simpler: it’s available as three models—regular, mini and nano—which can each be run at one of four reasoning levels: minimal (a new level not previously available for other OpenAI reasoning models), low, medium or high.

The models have an input limit of 272,000 tokens and an output limit (which includes invisible reasoning tokens) of 128,000 tokens. They support text and image for input, text only for output.

I’ve mainly explored full GPT-5. My verdict: it’s just good at stuff. It doesn’t feel like a dramatic leap ahead from other LLMs but it exudes competence—it rarely messes up, and frequently impresses me. I’ve found it to be a very sensible default for everything that I want to do. At no point have I found myself wanting to re-run a prompt against a different model to try and get a better result.

Here are the OpenAI model pages for GPT-5, GPT-5 mini and GPT-5 nano. Knowledge cut-off is September 30th 2024 for GPT-5 and May 30th 2024 for GPT-5 mini and nano.

Position in the OpenAI model family

The three new GPT-5 models are clearly intended as a replacement for most of the rest of the OpenAI line-up. This table from the system card is useful, as it shows how they see the new models fitting in:

| Previous model | GPT-5 model |

|---|---|

| GPT-4o | gpt-5-main |

| GPT-4o-mini | gpt-5-main-mini |

| OpenAI o3 | gpt-5-thinking |

| OpenAI o4-mini | gpt-5-thinking-mini |

| GPT-4.1-nano | gpt-5-thinking-nano |

| OpenAI o3 Pro | gpt-5-thinking-pro |

That “thinking-pro” model is currently only available via ChatGPT where it is labelled as “GPT-5 Pro” and limited to the $200/month tier. It uses “parallel test time compute”.

The only capabilities not covered by GPT-5 are audio input/output and image generation. Those remain covered by models like GPT-4o Audio and GPT-4o Realtime and their mini variants and the GPT Image 1 and DALL-E image generation models.

Pricing is aggressively competitive

The pricing is aggressively competitive with other providers.

- GPT-5: $1.25/million for input, $10/million for output

- GPT-5 Mini: $0.25/m input, $2.00/m output

- GPT-5 Nano: $0.05/m input, $0.40/m output

GPT-5 is priced at half the input cost of GPT-4o, and maintains the same price for output. Those invisible reasoning tokens count as output tokens so you can expect most prompts to use more output tokens than their GPT-4o equivalent (unless you set reasoning effort to “minimal”).

The discount for token caching is significant too: 90% off on input tokens that have been used within the previous few minutes. This is particularly material if you are implementing a chat UI where the same conversation gets replayed every time the user adds another prompt to the sequence.

Here’s a comparison table I put together showing the new models alongside the most comparable models from OpenAI’s competition:

| Model | Input $/m | Output $/m |

|---|---|---|

| Claude Opus 4.1 | 15.00 | 75.00 |

| Claude Sonnet 4 | 3.00 | 15.00 |

| Grok 4 | 3.00 | 15.00 |

| Gemini 2.5 Pro (>200,000) | 2.50 | 15.00 |

| GPT-4o | 2.50 | 10.00 |

| GPT-4.1 | 2.00 | 8.00 |

| o3 | 2.00 | 8.00 |

| Gemini 2.5 Pro (<200,000) | 1.25 | 10.00 |

| GPT-5 | 1.25 | 10.00 |

| o4-mini | 1.10 | 4.40 |

| Claude 3.5 Haiku | 0.80 | 4.00 |

| GPT-4.1 mini | 0.40 | 1.60 |

| Gemini 2.5 Flash | 0.30 | 2.50 |

| Grok 3 Mini | 0.30 | 0.50 |

| GPT-5 Mini | 0.25 | 2.00 |

| GPT-4o mini | 0.15 | 0.60 |

| Gemini 2.5 Flash-Lite | 0.10 | 0.40 |

| GPT-4.1 Nano | 0.10 | 0.40 |

| Amazon Nova Lite | 0.06 | 0.24 |

| GPT-5 Nano | 0.05 | 0.40 |

| Amazon Nova Micro | 0.035 | 0.14 |

(Here’s a good example of a GPT-5 failure: I tried to get it to output that table sorted itself but it put Nova Micro as more expensive than GPT-5 Nano, so I prompted it to “construct the table in Python and sort it there” and that fixed the issue.)

More notes from the system card

As usual, the system card is vague on what went into the training data. Here’s what it says:

Like OpenAI’s other models, the GPT-5 models were trained on diverse datasets, including information that is publicly available on the internet, information that we partner with third parties to access, and information that our users or human trainers and researchers provide or generate. [...] We use advanced data filtering processes to reduce personal information from training data.

I found this section interesting, as it reveals that writing, code and health are three of the most common use-cases for ChatGPT. This explains why so much effort went into health-related questions, for both GPT-5 and the recently released OpenAI open weight models.

We’ve made significant advances in reducing hallucinations, improving instruction following, and minimizing sycophancy, and have leveled up GPT-5’s performance in three of ChatGPT’s most common uses: writing, coding, and health. All of the GPT-5 models additionally feature safe-completions, our latest approach to safety training to prevent disallowed content.

Safe-completions is later described like this:

Large language models such as those powering ChatGPT have traditionally been trained to either be as helpful as possible or outright refuse a user request, depending on whether the prompt is allowed by safety policy. [...] Binary refusal boundaries are especially ill-suited for dual-use cases (such as biology or cybersecurity), where a user request can be completed safely at a high level, but may lead to malicious uplift if sufficiently detailed or actionable. As an alternative, we introduced safe- completions: a safety-training approach that centers on the safety of the assistant’s output rather than a binary classification of the user’s intent. Safe-completions seek to maximize helpfulness subject to the safety policy’s constraints.

So instead of straight up refusals, we should expect GPT-5 to still provide an answer but moderate that answer to avoid it including “harmful” content.

OpenAI have a paper about this which I haven’t read yet (I didn’t get early access): From Hard Refusals to Safe-Completions: Toward Output-Centric Safety Training.

Sycophancy gets a mention, unsurprising given their high profile disaster in April. They’ve worked on this in the core model:

System prompts, while easy to modify, have a more limited impact on model outputs relative to changes in post-training. For GPT-5, we post-trained our models to reduce sycophancy. Using conversations representative of production data, we evaluated model responses, then assigned a score reflecting the level of sycophancy, which was used as a reward signal in training.

They claim impressive reductions in hallucinations. In my own usage I’ve not spotted a single hallucination yet, but that’s been true for me for Claude 4 and o3 recently as well—hallucination is so much less of a problem with this year’s models.

One of our focuses when training the GPT-5 models was to reduce the frequency of factual hallucinations. While ChatGPT has browsing enabled by default, many API queries do not use browsing tools. Thus, we focused both on training our models to browse effectively for up-to-date information, and on reducing hallucinations when the models are relying on their own internal knowledge.

The section about deception also incorporates the thing where models sometimes pretend they’ve completed a task that defeated them:

We placed gpt-5-thinking in a variety of tasks that were partly or entirely infeasible to accomplish, and rewarded the model for honestly admitting it can not complete the task. [...]

In tasks where the agent is required to use tools, such as a web browsing tool, in order to answer a user’s query, previous models would hallucinate information when the tool was unreliable. We simulate this scenario by purposefully disabling the tools or by making them return error codes.

Prompt injection in the system card

There’s a section about prompt injection, but it’s pretty weak sauce in my opinion.

Two external red-teaming groups conducted a two-week prompt-injection assessment targeting system-level vulnerabilities across ChatGPT’s connectors and mitigations, rather than model-only behavior.

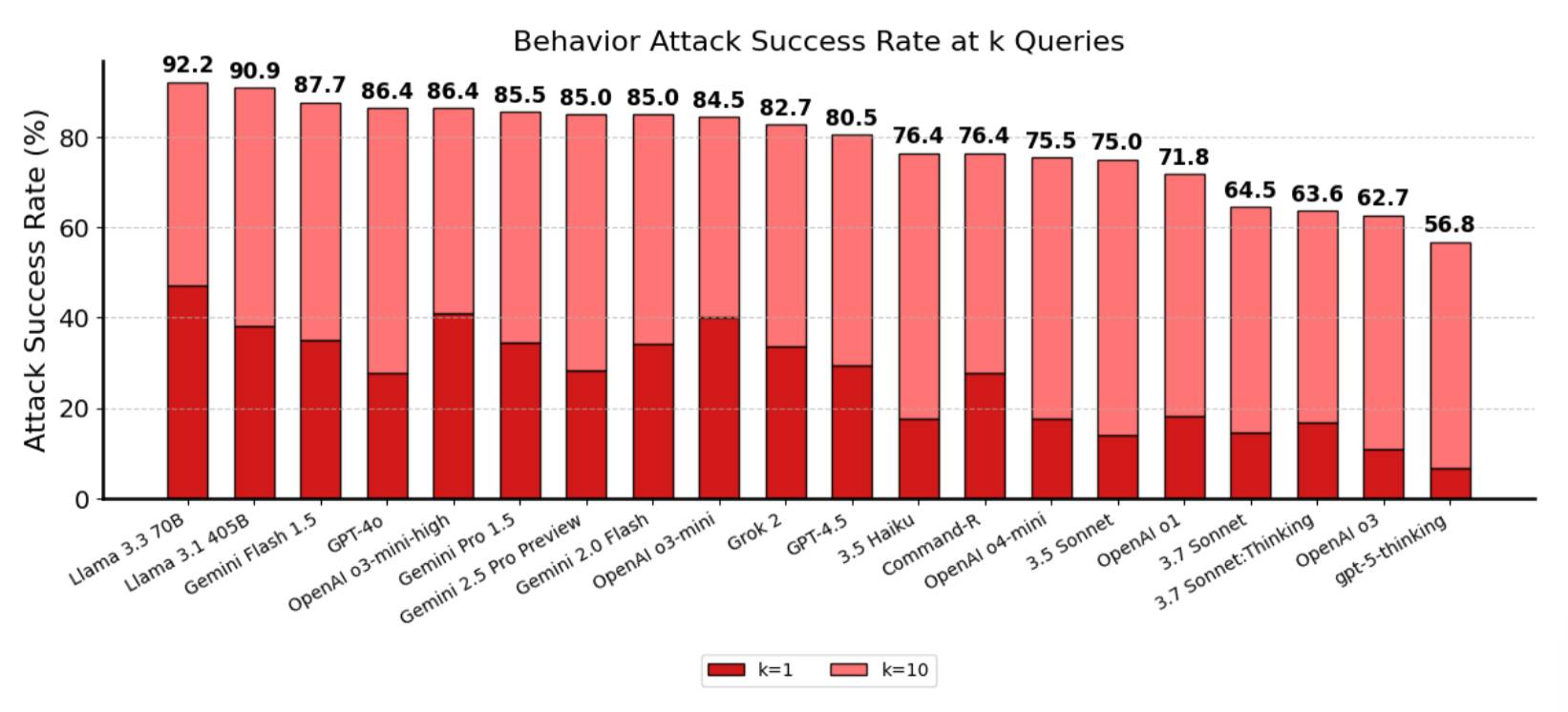

Here’s their chart showing how well the model scores against the rest of the field. It’s an impressive result in comparison—56.8 attack success rate for gpt-5-thinking, where Claude 3.7 scores in the 60s (no Claude 4 results included here) and everything else is 70% plus:

On the one hand, a 56.8% attack rate is cleanly a big improvement against all of those other models.

But it’s also a strong signal that prompt injection continues to be an unsolved problem! That means that more than half of those k=10 attacks (where the attacker was able to try up to ten times) got through.

Don’t assume prompt injection isn’t going to be a problem for your application just because the models got better.

Thinking traces in the API

I had initially thought that my biggest disappointment with GPT-5 was that there’s no way to get at those thinking traces via the API... but that turned out not to be true. The following curl command demonstrates that the responses API "reasoning": {"summary": "auto"} is available for the new GPT-5 models:

curl https://api.openai.com/v1/responses \

-H "Authorization: Bearer $(llm keys get openai)" \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-5",

"input": "Give me a one-sentence fun fact about octopuses.",

"reasoning": {"summary": "auto"}

}'Here’s the response from that API call.

Without that option the API will often provide a lengthy delay while the model burns through thinking tokens until you start getting back visible tokens for the final response.

OpenAI offer a new reasoning_effort=minimal option which turns off most reasoning so that tokens start to stream back to you as quickly as possible.

And some SVGs of pelicans

Naturally I’ve been running my “Generate an SVG of a pelican riding a bicycle” benchmark. I’ll actually spend more time on this in a future post—I have some fun variants I’ve been exploring—but for the moment here’s the pelican I got from GPT-5 running at its default “medium” reasoning effort:

It’s pretty great! Definitely recognizable as a pelican, and one of the best bicycles I’ve seen yet.

Here’s GPT-5 mini:

And GPT-5 nano:

Read the original article

Comments

It's cool and I'm glad it sounds like it's getting more reliable, but given the types of things people have been saying GPT-5 would be for the last two years you'd expect GPT-5 to be a world-shattering release rather than incremental and stable improvement.

It does sort of give me the vibe that the pure scaling maximalism really is dying off though. If the approach is on writing better routers, tooling, comboing specialized submodels on tasks, then it feels like there's a search for new ways to improve performance(and lower cost), suggesting the other established approaches weren't working. I could totally be wrong, but I feel like if just throwing more compute at the problem was working OpenAI probably wouldn't be spending much time on optimizing the user routing on currently existing strategies to get marginal improvements on average user interactions.

I've been pretty negative on the thesis of only needing more data/compute to achieve AGI with current techniques though, so perhaps I'm overly biased against it. If there's one thing that bothers me in general about the situation though, it's that it feels like we really have no clue what the actual status of these models is because of how closed off all the industry labs have become + the feeling of not being able to expect anything other than marketing language from the presentations. I suppose that's inevitable with the massive investments though. Maybe they've got some massive earthshattering model release coming out next, who knows.

It reminds me of the latest, most advanced steam locomotives from the beginning of the 20th century.

They become extremely complex and sophisticated machines to squeeze a few more percent of efficiency compared to earlier models. Then diesel, and eventually electric locomotive arrived, much better and also much simpler than those late steam monsters.

I feel like that's where we are with LLM: extremely smart engineering to marginally improve quality, while increasing cost and complexity greatly. At some point we'll need a different approach if we want a world-shattering release.

By nyrikki 2025-08-0814:20 Even more connections, the UPs Big Boy was designed to replace double header or helper engines to reduce labor costs because they were using the cheapest bismuth coal.

It wasn't until after WWII when coal and labor costs and the development of good diesel electric that things changed.

The UP expended huge amounts of money to daylight tunnels and replacing bridges in a single year to support the big boys.

Just like AI it was meant to replace workers to improve investors returns.

By anshumankmr 2025-08-0810:473 reply Just to add to this, if we see F1 cars a way to measure the cutting edge of cars being developed, we can see cars haven't become insanely faster than they were 10-20 years ago, just more "efficient",reliable and definitely safer along with quirks like DRS. Of course shaving off a second or two from laptimes is notable, but not an insane delta like say if you compared a car from post 2000s GP to 1950s GP.

I feel after a while we will have specialized LLMs great for one particular task down the line as well, cut off updates, 0.something better than the SOTA on some benchmark and as compute gets better, cheaper to run at scale.

By Anonyneko 2025-08-0811:38 To be fair, the speed of F1 cars is mostly limited by regulations that are meant to make the sport more competitive and entertaining. With fewer restrictions on engines and aerodynamics we could have had much faster cars within a year.

But even setting safety issues aside, the insane aero wash would make it nearly impossible to follow another car, let alone overtake it, hence the restrictions and the big "rule resets" every few years that slow down the cars, compensating for all of the tricks the teams have found over that time.

(I agree with the general thoughts on the state of LLMs though, just a bit too much into open-wheel cars going vroom vroom in circles for two hours at a time)

By jakubmazanec 2025-08-0915:32 > if we see F1 cars a way to measure the cutting edge of cars being developed

As other commenters noted, F1 regulations are made to make the racing competitive and interesting to watch. But you can design car that would be much faster [1] and even undrivable for humans due to large Gs.

> we can see cars haven't become insanely faster than they were 10-20 years ago

I don't think this is a good example, because the regulations in F1 are largely focused on slowing the cars.

It's an engineering miracle that 2025 cars can be competitive with previous generation cars on so many tracks.

By anshumankmr 2025-08-0817:16 >I don't think this is a good example, because the regulations in F1 are largely focused on slowing the cars.

I do agree with this, wish we got to see the V10s race with slicks :(

The quiet revolution is happening in tool use and multimodal capabilities. Moderate incremental improvements on general intelligence, but dramatic improvements on multi-step tool use and ability to interact with the world (vs 1 year ago), will eventually feed back into general intelligence.

By thomasfromcdnjs 2025-08-083:54 100%

1) Build a directory of X (a gazillion) amount of tools (just functions) that models can invoke with standard pipeline behavior (parallel, recursion, conditions etc)

2) Solve the "too many tools to select from" problem (a search problem), adjacently really understand the intent (linguistics/ToM) of the user or agents request

3) Someone to pay for everything

4) ???

The future is already here in my opinion, the LLM's are good-enough™, it's just the ecosystem needs to catch up. Companies like Zapier or whatever, taken to their logical extreme, connecting any software to any thing (not just sass products), combined with an LLM will be able to do almost anything.

Even better basic tool composition around language will make it's simple replies better too.

By darkhorse222 2025-08-0720:311 reply Completely agree. General intelligence is a building block. By chaining things together you can achieve meta programming. The trick isn't to create one perfect block but to build a variety of blocks and make one of those blocks a block-builder.

By SecretDreams 2025-08-082:471 reply > The trick isn't to create one perfect block but to build a variety of blocks and make one of those blocks a block-builder.

This has some Egyptian pyramids building vibes. I hope we treat these AGIs better than the deal the pyramid slaves got.

By z0r 2025-08-083:32 We don't have AGI and the pyramids weren't built by slaves.

By copularent 2025-08-0811:331 reply I think we have reached a user schism in terms of benefits going forward.

I am completely floored by GPT-5. I only tried it a half hour ago and have a whole new data analysis pipeline. I thought it must be hallucinating badly at first but all the papers it referenced are real and I had just never heard of these concepts.

This is for an area that has 200 papers on arxiv and I have read all of them so thought I knew this area well.

I don't see how the average person benefits much going forward though. They simply don't have questions to ask in order to have the model display its intelligence.

By gnowlescentic 2025-08-0815:43 What do you think are the chances that they used data collected from users for the past couple of years and are propping up performance in those use cases instead of the promised generality?

By coolKid721 2025-08-0720:471 reply [flagged]

By dang 2025-08-0720:52 Can you please make your substantive points thoughtfully? Thoughtful criticism is welcome but snarky putdowns and onliners, etc., degrade the discussion for everyone.

You've posted substantive comments in other threads, so this should be easy to fix.

If you wouldn't mind reviewing https://news.ycombinator.com/newsguidelines.html and taking the intended spirit of the site more to heart, we'd be grateful.

By maoberlehner 2025-08-084:164 reply I mostly use Gemini 2.5 Pro. I have a “you are my editor” prompt asking it to proofread my texts. Recently it pointed out two typos in two different words that just weren’t there. Indeed, the two words each had a typo but not the one pointed out by Gemini.

The real typos were random missing letters. But the typos Gemini hallucinated were ones that are very common typos made in those words.

The only thing transformer based LLMs can ever do is _faking_ intelligence.

Which for many tasks is good enough. Even in my example above, the corrected text was flawless.

But for a whole category of tasks, LLMs without oversight will never be good enough because there simply is no real intelligence in them.

I'll show you a few misspelled words and you tell me (without using any tools or thinking it through) which bits in the utf8 encoded bytes are incorrect. If you're wrong, I'll conclude you are not intelligent.

LLMs don't see letters, they see tokens. This is a foundational attribute of LLMs. When you point out that the LLM does not know the number of R's in the word "Strawberry", you are not exposing the LLM as some kind of sham, you're just admitting to being a fool.

By LetsGetTechnicl 2025-08-0813:151 reply Damn, if only something called a "language model" could model language accurately, let alone live up to its creators' claims that it possesses near-human intelligence. But yeah we can call getting some basic facts a "feature not a bug" if you want

So people that can't read or write have no language? If you don't know an alphabet and its rules, you won't know how many letters are in words. Does that make you unable to model language accurately?

By tripzilch 2025-08-1112:53 So first of, people who _can't_ read or write have a certain disability (blindness or developmental, etc). That's not a reasonable comparison for LLMs/AI (especially since text is the main modality of an LLM).

I'm assuming you meant to ask about people who haven't _learned_ to read or write, but would otherwise be capable.

Is your argument then, that a person who hasn't learned to read or write is able to model language as accurately as one who did?

Wouldn't you say that someone who has read a whole ton of books would maybe be a bit better at language modelling?

Also, perhaps most importantly: GPT (and pretty much any LLM I've talked to) does know the alphabet and its rules. It knows. Ask it to recite the alphabet. Ask it about any kind of grammatical or lexical rules. It knows all of it. It can also chop up a word from tokens into letters to spell it correctly, it knows those rules too. Now ask it about Chinese and Japanese characters, ask it any of the rules related to those alphabets and languages. It knows all the rules.

This to me shows the problem is that it's mainly incapable of reasoning and putting things together logically, not so much that it's trained on something that doesn't _quite_ look like letters as we know them. Sure it might be slightly harder to do, but it's not actually hard, especially not compared to the other things we expect LLMs to be good at. But especially especially not compared to the other things we expect people to be good at if they are considered "language experts".

If (smart/dedicated) humans can easily learn the Chinese, Japanese, Latin and Russian alphabets, then why can't LLMs learn how tokens relate to the Latin alphabet?

Remember that tokens were specifically designed to be easier and more regular to parse (encode/decode) than the encodings used in human languages ...

By maoberlehner 2025-08-0815:481 reply So LLMs don’t know the alphabet and its rules?

You know about ultraviolet but that doesn't help you see ultraviolet light

By maoberlehner 2025-08-0820:491 reply Yes. And I know I can’t see it and don’t pretend I can, and that it in fact is green.

By LordDragonfang 2025-08-0823:38 Not green, no.

But actually, you can see an intense enough source of (monochromatic) near-UV light, our lenses only filter out the majority of it.

And if you did, your brain would hallucinate it as purplish-blueish white. Because that's the closest color to those inputs based on your what your neural network (brain) was trained on. It's encountering something uncommon, so it guesses and present it as fact.

From this, we can determine either that you (and indeed all humans) are not actually intelligent, or alternatively, intelligence and cognition are complicated and you can't conclude its absence from the first time someone behaves in a way you're not trained to expect from your experience of intelligence.

Being confused as to how LLMs see tokens is just a factual error.

I think the more concerning error GP makes is how he makes deductions on fundamental nature of the intelligence of LLMs by looking at "bugs" in current iterations of LLMs. It's like looking at a child struggling to learn how to spell, and making broad claims like "look at the mistakes this child made, humans will never attain any __real__ intelligence!"

So yeah at this point I'm often pessimistic whether humans have "real" intelligence or not. Pretty sure LLMs can spot the logical mistakes in his claims easily.

By maoberlehner 2025-08-0815:451 reply Your explanation perfectly captures another big differences between human / mammal intelligence and LLM intelligence: A child can make mistakes and (few shot) learn. A LLM can’t.

And even a child struggling with spelling won’t make a mistake like the one I have described. It will spell things wrong and not even catch the spelling mistake. But it won’t pretend and insist there is a mistake where there isn’t (okay, maybe it will, but only to troll you).

Maybe talking about “real” intelligence was not precise enough and it’s better to talk about “mammal like intelligence.”

I guess there is a chance LLMs can be trained to a level where all the questions where there is a correct answer for (basically everything that can be benchmarked) will be answered correctly. Would this be incredibly useful and make a lot of jobs obsolete? Yes. Still a very different form of intelligence.

By LordDragonfang 2025-08-0823:43 > A child can make mistakes and (few shot) learn. A LLM can’t.

Considering that we literally call the process of giving an llm several attempts at a problem "few-shot reasoning", I do not understand your reasoning here.

And LLM absolutely can "gain acquire knowledge of or skill in (something)" of things within its context window (i.e. learning). And then you can bake those understandings in by making a LoRa, or further training.

If this is really your distinction that makes intelligence, the only difference between llms and human brains is that human brains have a built-in mechanism to convert short-term memory to long-term, and llms haven't fully evolved that.

By tripzilch 2025-08-1112:39 If I had learned to read utf8 bytes instead of Latin alphabet, this would be trivial. In fact give me a (paid) week to study utf8 for reading and I am sure I could do it. (yes I already know how utf8 works)

And the token/strawberry thing is a non-excuse. They just can't count. I can count the number of syllables in a word, regardless of how it's spelled, that's also not based on letters. Or if you want a sub-letter equivalent, I could also count the number of serifs, dots or curves in a word.

It's really not so much that the strawberry thing is a "gotcha", or easily explained by "they see tokens instead", because the same reasoning errors happen all the time in LLMs also in places where "it's because of tokens" can't possibly be the explanation. It's just that the strawberry thing is one of the easiest ways to show it just can't reason reliably.

> When you point out that the LLM does not know the number of R's in the word "Strawberry", you are not exposing the LLM as some kind of sham, you're just admitting to being a fool.

I'm sorry but that's not reasonable. Yes, I understand what you mean on an architectural level, but if a product is being deployed to the masses you are the fool if you expect every user to have a deep architectural understanding of it.

If it's being sold as "this model is a PhD-level expert on every topic in your pocket", then the underlying technical architecture and its specific foibles are irrelevant. What matters is the claims about what it's capable of doing and its actual performance.

Would it matter if GPT-5 couldn't count the number of r's in a specific word if the marketing claims being made around it were more grounded? Probably not. But that's not what's happening.

By withinboredom 2025-08-0815:54 > If it's being sold as "this model is a PhD-level expert on every topic in your pocket",

The thing that pissed me off about them using this line is that they prevented the people who actually pull that off one day from using it.

By maoberlehner 2025-08-0815:29 I think we’re saying the same thing using different words. What LLMs do and what human brains do are very different things. Therefore human / biological intelligence is a different thing than LLM intelligence.

Is this phrasing something you can agree with?

I had this too last week. It pointed out two errors that simply weren’t there. Then completely refused to back down and doubled down on its own certainty, until I sent it a screenshot of the original prompt. Kind of funny.

By BartjeD 2025-08-089:37 [flagged]

By morganf 2025-08-0812:07 One of my main uses for LLMs is copy editing and it is incredible to me how terrible all of them are at that.

do you really think that an architecture that struggles to count r in strawberry is a good choice for proofreading? It perceives words very differently from us.

Counting letters in words and identifying when words are misspelled are two different tasks - it can be good at one and bad at the other.

Interestingly, spell checking is something models have been surprisingly bad at in the past - I remember being shocked at how bad Claude 3 was at spotting typos.

This has changed with Claude 4 and o3 from what I've seen - another example of incremental model improvements swinging over a line in terms of things they can now be useful for.

Shill harder, Simon!

Otherwise they may refuse to ask you back for their next PR chucklefest.

Wasn't expecting a "you're a shill" accusation to show up on a comment where I say that LLMs used to suck at spell check but now they can just about do it.

By gnowlescentic 2025-08-0815:41 So 2 trillion dollars to do what Word could do in 1995... and trying to promote that as an advancement is not propaganda? Sure let's double the amount of resources a couple more times who knows what it will be able to take on after mastering spelling.

By maoberlehner 2025-08-0811:46 Yes, actually I think it works really well for me considering that I’m not a native speaker and one thing I’m after is correcting technical correct but non-idiomatic wording.

I think the big question is if/when investors will start giving money to those who have been predicting this (with evidence) and trying other avenues.> It does sort of give me the vibe that the pure scaling maximalism really is dying off thoughReally though, why put all your eggs in one basket? That's what I've been confused about for awhile. Why fund yet another LLMs to AGI startup. Space is saturated with big players and has been for years. Even if LLMs could get there that doesn't mean something else won't get there faster and for less. It also seems you'd want a backup in order to avoid popping the bubble. Technology S-Curves and all that still apply to AI

Though I'm similarly biased, but so is everyone I know with a strong math and/or science background (I even mentioned it in my thesis more than a few times lol). Scaling is all you need just doesn't check out

I started such an alternative project just before GPT-3 was released, it was really promising (lots of neuroscience inspired solutions, pretty different to Transformers) but I had to put it on hold because the investors I approached seemed like they would only invest in LLM-stuff. Now a few years later I'm trying to approach investors again, only to find now they want to invest in companies USING LLMs to create value and still don't seem interested in new foundational types of models... :/

I guess it makes sense, there is still tons of value to be created just by using the current LLMs for stuff, though maybe the low hanging fruits are already picked, who knows.

I heard John Carmack talk a lot about his alternative (also neuroscience-inspired) ideas and it sounded just like my project, the main difference being that he's able to self-fund :) I guess funding an "outsider" non-LLM AI project now requires finding someone like Carmack to get on board - I still don't think traditional investors are that disappointed yet that they want to risk money on other types of projects..

By godelski 2025-08-081:18

And I think this is a big problem. Especially since these investments tend to be a lot cheaper than the existing ones. Hell, there's stuff in my PhD I tabled and several models I made that I'm confident I could have doubled performance with less than a million dollars worth of compute. My methods could already compete while requiring less compute, so why not give them a chance to scale? I've seen this happen to hundreds of methods. If "scale is all you need" then shouldn't the belief that any of those methods would also scale?> I guess funding an "outsider" non-LLM AI project now requires finding someone like Carmack to get on boardMarkets are very good and squeezing profit out of sometimes ludicrous ventures, not good at all for foundational research.

It's the problem of having organised our economic life in this way, or rather, exclusively this way.

By godelski 2025-08-0818:01

I think an important part is to recognize that fundamental research is extremely foundational. We often don't recognize the impacts because by the time we see them they have passed through other layers. Maybe in the same way that we forget about the ground existing and being the biggest contributor to Usain Bolt's speed. Can't run if there's no ground.> exclusively this way.But to see economic impact, I'll make the bet that a single mathematical work (technically two) had a greater economic impact than all technologies in the last 2 centuries. Calculus. I haven't run the calculations (seems like it'd be hard and I'd definitely need calculus to do them), but I'd be willing to bet that every year Calculus results in a greater economic impact than FAANG, MANGO, or whatever the hot term is these days, does.

It seems almost silly to say this and it is obviously so influential. But things like this fade away into the background the same way we almost never think about the ground beneath our feet.

I have to say this because we're living in a time where people are arguing we shouldn't build roads because cars are the things that get us places. But this is just framing, and poor framing at that. Frankly, part of it is that roads are typically built through public funds and cars through private. It's this way because the road is able to make much higher economic impact by being a public utility rather than a private one. Incidentally, this makes the argument to not build roads self-destructive. It's short sighted. Just like actual roads, research has to be continuously performed. The reality is more akin to those cartoon scenes where a character is laying down the railroad tracks just in front of the speeding train.[0] I guess if you're not Gromit placing down the tracks it is easy to assume they just exist.

But unlike actual roads, research is relatively cheap. Sure, maybe a million mathematicians don't produce anything economically viable for that year and maybe not 20, but one will produce something worth trillions. And they do this at a mathematician's salary! You can hire research mathematicians at least 2 to 1 for a junior SWE. 10 to 1 for a staff SWE. It's just crazy to me that we're arguing we don't have the money for these kinds of things. I mean just look at the impact of Tim Berners Lee and his team. That alone offsets all costs for the foreseeable future. Yet somehow his net worth is in the low millions? I think we really should question this notion that wealth is strongly correlated to impact.

[0] Why does a 10hr version of this exist... https://www.youtube.com/watch?v=fwJHNw9jU_U

I'm pretty curious about the same thing.

I think a somewhat comparable situation is in various online game platforms now that I think about it. Investors would love to make a game like Fortnite, and get the profits that Fortnite makes. So a ton of companies try to make Fortnite. Almost all fail, and make no return whatsoever, just lose a ton of money and toss the game in the bin, shut down the servers.

On the other hand, it may have been more logical for many of them to go for a less ambitious (not always online, not a game that requires a high player count and social buy-in to stay relevant) but still profitable investment (Maybe a smaller scale single player game that doesn't offer recurring revenue), yet we still see a very crowded space for trying to emulate the same business model as something like Fortnite. Another more historical example was the constant question of whether a given MMO would be the next "WoW-killer" all through the 2000's/2010's.

I think part of why this arises is that there's definitely a bit of a psychological hack for humans in particular where if there's a low-probability but extremely high reward outcome, we're deeply entranced by it, and investors are the same. Even if the chances are smaller in their minds than they were before, if they can just follow the same path that seems to be working to some extent and then get lucky, they're completely set. They're not really thinking about any broader bubble that could exist, that's on the level of the society, they're thinking about the individual, who could be very very rich, famous, and powerful if their investment works. And in the mind of someone debating what path to go down, I imagine a more nebulous answer of "we probably need to come up with some fundamentally different tools for learning and research a lot of different approaches to do so" is a bit less satisfying and exciting than a pitch that says "If you just give me enough money, the curve will eventually hit the point where you get to be king of the universe and we go colonize the solar system and carve your face into the moon."

I also have to acknowledge the possibility that they just have access to different information than I do! They might be getting shown much better demos than I do, I suppose.

I'm pretty sure the answer is people buying into the scaling is all you need argument. Because if you have that framing then it can be solved through engineering, right? I mean there's still engineering research and it doesn't mean there's no reason to research but everyone loves the simple and straight forward path, right?

I think it is common in many industries. The weird thing is that being too risk adverse creates more risk. There's a balance that needs to be struck. Maybe another famous one is movies. They go on about pirating and how Netflix is winning but most of the new movies are rehashes or sequels. Sure, there's a lot of new movies, but few get nearly the same advertising budgets and so people don't even hear about it (and sequels need less advertising since there's a lot of free advertising). You'd think there'd be more pressure to find the next hit that can lead to a few sequels but instead they tend to be too risk adverse. That's the issue of monopolies though... or any industry where the barrier to entry is high...> I think a somewhat comparable situation is in various online game platforms

While I'm pretty sure this plays a role (along with other things like blind hope) I think the bigger contributor is risk aversion and observation bias. Like you say, it's always easier to argue "look, it worked for them" then "this hasn't been done before, but could be huge." A big part of the bias is that you get to oversimplify the reasoning for the former argument compared to the latter. The latter you'll get highly scrutinized while the former will overlook many of the conditions that led to success. You're right that the big picture is missing. Especially that a big part of the success was through the novelty (not exactly saying Fortnite is novel via gameplay...). For some reason the success of novelty is almost never seen as motivation to try new things.> psychological hackI think that's the part that I find most interesting and confusing. It's like an aversion of wanting to look just one layer deeper. We'll put in far more physical and mental energy to justify a shallow thought than what would be required to think deeper. I get we're biased towards being lazy, so I think this is kinda related to us just being bad at foresight and feeling like being wrong is a bad thing (well it isn't good, but I'm pretty sure being wrong and not correcting is worse than just being wrong).

Very interesting way of thinking through it, I agree on pretty much all the points you've stated.

That aversion is really fascinating to dig into, I wonder how much of it is cultural vs something innate to people.

By godelski 2025-08-0817:37

I wonder about this too because it seems to have to do with long term planning. Which on one hand seems to be one of the greatest feats humans have accomplished and sets us apart from other animals (we don't just plan for hibernation) but at the same time we're really bad at it and kinda always have been. I think there is an element of the "Marshmallow experiment" here. Rewards now or more rewards in the future. People do act like there's an obvious answer but there's also the old saying "one in the hand is worth two in the bush." But I do think we're currently off balance and hyper focused on one in the hand. Frankly, I don't think the saying is as meaningful if we're talking about berries instead of birds lol.> cultural vs something innate

>I think part of why this arises is that there's definitely a bit of a psychological hack for humans in particular where if there's a low-probability but extremely high reward outcome, we're deeply entranced by it, and investors are the same.

Venture capital is all about low-probability high-reward events.

Get a normal small business loan if you don't want to go big or go home.

By godelski 2025-08-081:25 So you agree with us? Should we instead be making the argument that this is an illogical move? Because IME the issue has been that it appears as too risky. I'd like to know if I should just lean into that rather than try to argue it is not as risky as it appears (yet still has high reward, albeit still risky).

By eru 2025-08-0723:54 We see both things: almost all games are 'not fortnite'. But that doesn't (commercially) invalidate some companies' quest for building the next fortnite.

Of course, if you limit your attention to these 'wanabe fortnites', then you only see these 'wannabe fortnites'.

>Really though, why put all your eggs in one basket? That's what I've been confused about for awhile.

I mean that's easy lol. People don't like to invest in thin air, which is what you get when you look at non-LLM alternatives to General Intelligence.

This isn't meant as a jab or snide remark or anything like that. There's literally nothing else that will get you GPT-2 level performance, never-mind an IMO Gold Medalist. Invest in what else exactly? People are putting their eggs in one basket because it's the only basket that exists.

>I think the big question is if/when investors will start giving money to those who have been predicting this (with evidence) and trying other avenues.

Because those people have still not been proven right. Does "It's an incremental improvement over the model we released a few months ago, and blows away the model we released 2 years ago." really scream, "See!, those people were wrong all along!" to you ?

I disagree with this. There are a good ideas that are worth pursuit. I'll give you that few, if any, have been shown to work at scale but I'd say that's a self-fulfilling prophecy. If your bar is that they have to be proven at scale then your bar is that to get investment you'd have to have enough money to not need investment. How do you compete if you're never given the opportunity to compete? You could be the greatest quarterback in the world but if no one will let you play in the NFL then how can you prove that?> which is what you get when you look at non-LLM alternatives to General Intelligence.On the other hand, investing in these alternatives is a lot cheaper, since you can work your way to scale and see what fails along the way. This is more like letting people try their stuff out in lower leagues. The problem is there's no ladder to climb after a certain point. If you can't fly then how do you get higher?

I assume you don't work in ML research? I mean that's okay but I'd suspect that this claim would come from someone not on the inside. Though tbf, there's a lot of ML research that is higher level and not working on alternative architectures. I guess the two most well known are Mamba and Flows. I think those would be known by the general HN crowd. While I think neither will get us to AGI I think both have advantages that shouldn't be ignored. Hell, even scaling a very naive Normalizing Flow (related to Flow Matching) has been shown to compete and beat top diffusion models[0,1]. The architectures aren't super novel here but they do represent the first time a NF was trained above 200M params. That's a laughable number by today's standards. I can even tell you from experience that there's a self-fulfilling filtering for this kind of stuff because having submitted works in this domain I'm always asked to compare with models >10x my size. Even if I beat them on some datasets people will still point to the larger model as if that's a fair comparison (as if a benchmark is all the matters and doesn't need be contextualized).> Invest in what else exactly? ... it's the only basket that exists.

You're right. But here's the thing. *NO ONE HAS BEEN PROVEN RIGHT*. That condition will not exist until we get AGI.> Because those people have still not been proven right.

Let me ask you this. Suppose people are saying "x is wrong, I think we should do y instead" but you don't get funding because x is currently leading. Then a few years later y is proven to be the better way of doing things, everything shifts that way. Do you think the people who said y was right get funding or do you think people who were doing x but then just switched to y after the fact get funding? We have a lot of history to tell us the most common answer...> scream, "See!, those people were wrong all along!" to you ?>I disagree with this. There are a good ideas that are worth pursuit. I'll give you that few, if any, have been shown to work at scale but I'd say that's a self-fulfilling prophecy. If your bar is that they have to be proven at scale then your bar is that to get investment you'd have to have enough money to not need investment. How do you compete if you're never given the opportunity to compete? You could be the greatest quarterback in the world but if no one will let you play in the NFL then how can you prove that? On the other hand, investing in these alternatives is a lot cheaper, since you can work your way to scale and see what fails along the way. This is more like letting people try their stuff out in lower leagues. The problem is there's no ladder to climb after a certain point. If you can't fly then how do you get higher?

I mean this is why I moved the bar down from state of the art.

I'm not saying there are no good ideas. I'm saying none of them have yet shown enough promise to be called another basket in it's own right. Open AI did it first because they really believed in scaling, but anyone (well not literally, but you get what I mean) could have trained GPT-2. You didn't need some great investment, even then. It's that level of promise I'm saying doesn't even exist yet.

>I guess the two most well known are Mamba and Flows.

I mean, Mamba is a LLM ? In my opinion, it's the same basket. I'm not saying it has to be a transformer or that you can't look for ways to improve the architecture. It's not like Open AI or Deepmind aren't pursuing such things. Some of the most promising tweaks/improvements - Byte Latent Transformer, Titans etc are from those top labs.

Flows research is intriguing but it's not another basket in the sense that it's not an alternative to the 'AGI' these people are trying to build.

> Let me ask you this. Suppose people are saying "x is wrong, I think we should do y instead" but you don't get funding because x is currently leading. Then a few years later y is proven to be the better way of doing things, everything shifts that way. Do you think the people who said y was right get funding or do you think people who were doing x but then just switched to y after the fact get funding? We have a lot of history to tell us the most common answer...

The funding will go to players positioned to take advantage. If x was leading for years then there was merit in doing it, even if a better approach came along. Think about it this way, Open AI now have 700M Weekly active users for ChatGPT and millions of API devs. If this superior y suddenly came along and materialized and they assured you there were pivoting, why wouldn't you invest in them over players starting from 0, even if they championed y in the first place? They're better positioned to give you a better return on your money. Of course, you can just invest in both.

Open AI didn't get nearly a billion weekly active users off the promise of future technology. They got it with products that exist here and now. Even if there's some wall, this is clearly a road with a lot of merit. The value they've already generated (a whole lot) won't disappear if LLMs don't reach the heights some people are hoping they will.

If you want people to invest in y instead then x has to stall or y has to show enough promise. It didn't take transformers many years to embed themselves everywhere because they showed a great deal of promise right from the beginning. It shouldn't be surprising if people aren't rushing to put money in y when neither has happened yet.

Can you clarify what this threshold is?> I'm saying none of them have yet shown enough promise to be called another basket in it's own right.I know that's one sentence, but I think it is the most important one in my reply. It is really what everything else comes down to. There's a lot of room between even academic scale and industry scale. There's very few things with papers in the middle.

Sure, I'll buy that. LLM doesn't mean transformer. I could have been more clear but I think it would be from context as that means literally any architecture is an LLM if it is large and models language. Which I'm fine to work with.> I mean, Mamba is a LLMThough with that, I'd still disagree that LLMs will get us to AGI. I think the whole world is agreeing too as we're moving into multimodal models (sometimes called MMLMs) and so I guess let's use that terminology.

To be more precise, let's say "I think there are better architectures out there than ones dominated by Transformer Encoders". It's a lot more cumbersome but I don't want to say transformers or attention can't be used anywhere in the model or we'll end up having to play this same game. Let's just work with "an architecture that is different than what we usually see in existing LLMs". That work?

I wouldn't put your argument this way. As I understand it, your argument is about timing. I agree with most of what you said tbh.> The funding will go to players positioned to take advantage.To be clear my argument isn't "don't put all your money in the 'LLM' basket, put it in this other basket" by argument is "diversify" and "diversification means investing at many levels of research." To clarify that latter part I really like the NASA TRL scale[0]. It's wrong to make a distinction between "engineering vs research" and better to see it as a continuum. I agree, most money should be put into higher levels but I'd be amiss if I didn't point out that we're living in a time where a large number of people (including these companies) are arguing that we should not be funding TRL 1-3 and if we're being honest, I'm talking about stuff in currently in TRL 3-5. I mean it is a good argument to make if you want to maintain dominance, but it is not a good argument if you want to continue progress (which I think is what leads to maintaining dominance as long as that dominance isn't through monopoly or over centralization). Yes, most of the lower level stuff fails. But luckily the lower level stuff is much cheaper to fund. A mathematician's salary and a chalk board is at least half as expensive as the salary of a software dev (and probably closer to a magnitude if we're considering the cost of hiring either of them).

But I think that returns us to the main point: what is that threshold?

My argument is simply "there should be no threshold, it should be continuous". I'm not arguing for a uniform distribution either, I explicitly said more to higher TRLs. I'm arguing that if you want to build a house you shouldn't ignore the foundation. And the fancier the house, the more you should care about the foundation. Least you risk it all falling down

[0] https://www.nasa.gov/directorates/somd/space-communications-...

>Can you clarify what this threshold is? I know that's one sentence, but I think it is the most important one in my reply. It is really what everything else comes down to. There's a lot of room between even academic scale and industry scale. There's very few things with papers in the middle.

Something like GPT-2. Something that even before being actually useful or particularly coherent, was interesting enough to spark articles like these. https://slatestarcodex.com/2019/02/19/gpt-2-as-step-toward-g... So far, only LLM/LLM adjacent stuff fulfils this criteria.

To be clear, I'm not saying general R&D must meet this requirement. Not at all. But if you're arguing about diverting millions/billions in funds from x that is working to y then it has to at least clear that bar.

> My argument is simply "there should be no threshold, it should be continuous".

I don't think this is feasible for large investments. I may be wrong, but i also don't think other avenues aren't being funded. They just don't compare in scale because....well they haven't really done anything to justify such scale yet.

I got 2 things to say here> Something like GPT-21) There's plenty of things that can achieve similar performance to GPT-2 these days. We mentioned Mamba, they compared to GPT-3 in their first paper[0]. They compare with the open sourced version and you'll also see some other architectures referenced there like Hyena and H3. It's the GPT-Neo and GPT-J models. Remember GPT-3 is pretty much just a scaled up GPT-2.

2) I think you are underestimating the costs to train some of these things. I know Karpathy said you can now train GPT-2 for like $1k[1] but a single training run is a small portion of the total costs. I'll reference StyleGAN3 here just because the paper has good documentation on the very last page[2]. Check out the breakdown but there's a few things I want to specifically point out. The whole project cost 92 V100 years but the results of the paper only accounted for 5 of those. That's 53 of the 1876 training runs. Your $1k doesn't get you nearly as far as you'd think. If we simplify things and say everything in that 5 V100 years cost $1k then that means they spent $85k before that. They spent $18k before they even went ahead with that project. If you want realistic numbers, multiply that by 5 because that's roughly what a V100 will run you (discounted for scale). ~$110k ain't too bad, but that is outside the budget of most small labs (including most of academia). And remember, that's just the cost of the GPUs, that doesn't pay for any of the people running that stuff.

I don't expect you to know any of this stuff if you're not a researcher. Why would you? It's hard enough to keep up with the general AI trends, let alone niche topics lol. It's not an intelligence problem, it's a logistics problem, right? A researcher's day job is being in those weeds. You just get a lot more hours in the space. I mean I'm pretty out of touch of plenty of domains just because time constraints.

So I'm trying to say, I think your bar has been met.> I don't think this is feasible for large investments. I may be wrong, but i also don't think other avenues aren't being funded.And I think if we are actually looking at the numbers, yeah, I do not think these avenues are being funded. But don't take it from me, take it from FeiFei Li[3]

I'm not sure if you're a researcher or not, you haven't answered that question. But I think if you were you'd be aware of this issue because you'd be living with it. If you were a PhD student you would see the massive imbalance of GPU resources given to those working closely with big tech vs those trying to do things on their own. If you were a researcher you'd also know that even inside those companies that there aren't much resources given to people to do these things. You get them on occasion like the StarFlow and TarFlow I pointed out before, but these tend to be pretty sporadic. Even a big reason we talk about Mamba is because of how much they spent on it.| Not a single university today can train a ChatGPT modelBut if you aren't a researcher I'd ask why you have such confidence that these things are being funded and that these things cannot be scaled or improved[4]. History is riddled with examples of inferior tech winning mostly due to marketing. I know we get hyped around new tech, hell, that's why I'm a researcher. But isn't that hype a reason we should try to address this fundamental problem? Because the hype is about the advance of technology, right? I really don't think it is about the advancement of a specific team, so if we have the opportunity for greater and faster advancement, isn't that something we should encourage? Because I don't understand why you're arguing against that. An exciting thing of working at the bleeding edge is seeing all the possibilities. But a disheartening thing about working at the bleeding edge is seeing many promising avenues be passed by for things like funding and publicity. Do we want meritocracy to win out or the dollar?

I guess you'll have to ask yourself: what's driving your excitement?

[0] I mean the first Mamba paper, not the first SSM paper btw: https://arxiv.org/abs/2312.00752

[1] https://github.com/karpathy/llm.c/discussions/677

[2] https://arxiv.org/abs/2106.12423

[3] https://www.ft.com/content/d5f91c27-3be8-454a-bea5-bb8ff2a85...

[4] I'm not saying any of this stuff is straight de fact better. But there definitely is an attention imbalance and you have to compare like to like. If you get to x in 1000 man hours and someone else gets there in 100, it may be worth taking a look deeper. That's all.

I'm not a researcher.

I acknowledge Mamba, RWKV, Hyena and the rest but like I said, they fall under the LLM bucket. All these architectures have 7B+ models trained too. That's not no investment. They're not "winning" over transformers because they're not slam dunks, not because no-one is investing in them. They bring improvements in some areas but with detractions that make switching not a straightforward "this is better", which is what you're going to need to divert significant funds from an industry leading approach that is still working.

What happens when you throw away state information vital for a future query ? Humans can just re-attend (re-read that book, re-watch that video etc), Transformers are always re-attending, but SSMs, RWKV ? Too bad. A lossy state is a big deal when you can not re-attend.

Plus some of those improvements are just theoretical. Improved inference-time batching and efficient attention (flash, windowed, hybrid, etc.) have allowed transformers to remain performant over some of these alternatives rendering even the speed advantage moot, or at least not worth switching over. It's not enough to simply match transformers.

>Because I don't understand why you're arguing against that.

I'm not arguing anything. You asked why the disproportionate funding. Non-transformer LLMs aren't actually better than transformers and non-LLM options are non-existent.

By godelski 2025-08-0822:54 So fair, they fall under the LLM bucket but I think most things can. Still, my point is about that there's a very narrow exploration of techniques. Call it what you want, that's the problem.

And I'm not arguing there's zero investment, but it is incredibly disproportionate and there's a big push for it to be more disproportionate. It's not about all or none, it is about the distribution of those "investments" (including government grants and academic funding).

With the other architectures I think you're being too harsh. Don't let perfection get in the way of good enough. We're talking about research. More specifically, about what warrants more research. Where would transformers be today if we made similar critiques? Hell, we have a real life example with diffusion models. Sohl-Dickstein's paper came out at a year after Goodfellow's GAN paper and yet it took 5 years for DDPM to come out. The reason this happened is because at the time GANs were better performing and so the vast majority of effort was over there. At least 100x more effort if not 1000x. So the gap just widened. The difference in the two models really came down to scale and the parameterization of the diffusion process, which is something mentioned in the Sohl-Dickstein paper (specifically as something that should be further studied). 5 years really because very few people were looking. Even at that time it was known that the potential of diffusion models was greater than GANs but the concentration went to what worked better at that moment[0]. You can even see a similar thing with ViTs if you want to go look up Cordonnier's paper. The time gap is smaller but so is the innovation. ViT barely changes in architecture.

There's lots of problems with SSM and other architectures. I'm not going to deny that (I already stated as much above). The ask is to be given a chance to resolve those problems. An important part of that decision is understanding the theoretical limits of these different technologies. The question is "can these problems be overcome?" It's hard to answer, but so far the answer isn't "no". That's why I'm talking about diffusion and ViTs above. I could even bring in Normalizing Flows and Flow Matching which are currently undergoing this change.

I think you're both right and wrong. And I think you agree unless you are changing your previous argument.> It's not enough to simply match transformers.Where I think you're right is that the new thing needs to show capabilities that the current thing can't. Then you have to provide evidence that its own limitations can be overcome in such a way that overall it is better. I don't say strictly because there is no global optima. I want to make this clear because there will always be limitations or flaws. Perfection doesn't exist.

Where I think you're wrong is a matter of context. If you want the new thing to match or be better than SOTA transformer LLMs then I'll refer you back to the self-fulfilling prophecy problem from my earlier comment. You never give anything a chance to become better because it isn't better from the get go.

I know I've made that argument before, but let me put it a different way. Suppose you want to learn the guitar. Do you give up after you first pick it up and find out that you're terrible at it? No, that would be ridiculous! You keep at it because you know you have the capacity to do more. You continue doing it because you see progress. The logic is the exact same here. It would be idiotic of me to claim that because you can only play Mary Had A Little Lamb that you'll never be able to play a song that people actually want to listen to. That you'll never amount to anything and should just give up playing now.

My argument here is don't give up. To look how far you've come. Sure, you can only play Mary Had A Little Lamb, but not long ago you couldn't play a single cord. You couldn't even hold the guitar the right way up! Being bad at things is not a reason to give up on them. Being bad at things is the first step to being good at them. The reason to give up on things is because they have no potential. Don't confuse lack of success with lack of potential.

I guess you don't realize it, but you are making an argument. You were trying to answer my question, right? That is an argument. I don't think we're "arguing" in the bitter or upset way. I'm not upset with you and I hope you aren't upset with me. We're learning from each other, right? And there's not a clear answer to my original question either[1]. But I'm making my case for why we should have a bit more of what we currently use so that we get more in the future. It sounds scary, but we know that by sacrificing some of our food that we can use it to make even more food next year. I know it's in the future, but we can't completely sacrifice the future for the present. There needs to be balance. And research funding is just like crop planning. You have to plan with excess in mind. If you're lucky, you have a very good year. But if you're unlucky, at least everyone doesn't starve. Given that we're living in those fruitful lucky years, I think it is even more important to continue the trend. We have the opportunity to have so many more fruitful years ahead. This is how we avoid crashes and those cycles that tech so frequently goes through. It's all there written in history. All you have to do is ask what led to these fruitful times. You cannot ignore that a big part was that lower level research.> I'm not arguing anything. You asked why the disproportionate funding.[0] Some of this also has to do with the publish or perish paradigm but this gets convoluted and itself is related to funding because we similarly provide more far more funding to what works now compared to what has higher potential. This is logical of course, but the complexity of the conversation is that it has to deal with the distribution.

[1] I should clarify, my original question was a bit rhetorical. You'll notice that after asking it I provided an argument that this was a poor strategy. That's framing of the problem. I mean I live in this world, I am used to people making the case from the other side.

By csomar 2025-08-082:34 The current money made its money following the market. They do not have the capacity for innovation or risk taking.

> Really though, why put all your eggs in one basket? That's what I've been confused about for awhile. Why fund yet another LLMs to AGI startup.

Funding multiple startups means _not_ putting your eggs in one basket, doesn't it?

Btw, do we have any indication that eg OpenAI is restricting themselves to LLMs?

By godelski 2025-08-081:21

Different basket hierarchy.> Funding multiple startups means _not_ putting your eggs in one basket, doesn't it?Also, yes. They state this and given how there are plenty of open source models that are LLMs and get competitive performance it at least indicates that anyone not doing LLMs is doing so in secret.

If OpenAI isn't using LLMs then doesn't that support my argument?

By hnuser123456 2025-08-0719:381 reply I agree, we have now proven that GPUs can ingest information and be trained to generate content for various tasks. But to put it to work, make it useful, requires far more thought about a specific problem and how to apply the tech. If you could just ask GPT to create a startup that'll be guaranteed to be worth $1B on a $1k investment within one year, someone else would've already done it. Elbow grease still required for the foreseeable future.

In the meantime, figuring out how to train them to make less of their most common mistakes is a worthwhile effort.

Certainly, yes, plenty of elbow grease required in all things that matter.

The interesting point as well to me though, is that if it could create a startup that was worth $1B, that startup wouldn't be worth $1B.

Why would anyone pay that much to invest in the startup if they could recreate the entire thing with the same tool that everyone would have access to?

> if they could recreate the entire thing with the same tool

"Within one year" is the key part. The product is only part of the equation.

If a startup was launched one year ago and is worth $1B today, there is no way you can launch the same startup today and achieve the same market cap in 1 day. You still need customers, which takes time. There are also IP related issues.

Facebook had the resources to create an exact copy of Instagram, or WhatsApp, but they didn't. Instead, they paid billions of dollars to acquire those companies.

By disgruntledphd2 2025-08-0810:35 > Facebook had the resources to create an exact copy of Instagram

They tried this first (Camera I believe it was called) and failed.

By RossBencina 2025-08-0723:021 reply If you created a $1B startup using LLMs, would you be advertising it? or would you be creating more $1B startups.

By morleytj 2025-08-0723:36 Comment I'm replying to poses the following scenario:

"If you could just ask GPT to create a startup that'll be guaranteed to be worth $1B on a $1k investment within one year"

I think if the situation is that I do this by just asking it to make a startup, it seems unlikely that no one else would be aware that they could just ask it to make a startup

By BoiledCabbage 2025-08-0719:383 reply Performance is doubling roughly every 4-7 months. That trend is continuing. That's insane.

If your expectations were any higher than that then, then it seems like you were caught up in hype. Doubling 2-3 times per year isn't leveling off my any means.

By morleytj 2025-08-0720:00 I wouldn't say model development and performance is "leveling off", and in fact didn't write that. I'd say that tons more funding is going into the development of many models, so one would expect performance increases unless the paradigm was completely flawed at it's core, a belief I wouldn't personally profess to. My point was moreso the following: A couple years ago it was easy to find people saying that all we needed was to add in video data, or genetic data, or some other data modality, in the exact same format that the models trained on existing language data were, and we'd see a fast takeoff scenario with no other algorithmic changes. Given that the top labs seem to be increasingly investigating alternate approaches to setting up the models beyond just adding more data sources, and have been for the last couple years(Which, I should clarify, is a good idea in my opinion), then the probability of those statements of just adding more data or more compute taking us straight to AGI being correct seems at the very least slightly lower, right?

Rather than my personal opinion, I was commenting on commonly viewed opinions of people I would believe to have been caught up in hype in the past. But I do feel that although that's a benchmark, it's not necessarily the end-all of benchmarks. I'll reserve my final opinions until I test personally, of course. I will say that increasing the context window probably translates pretty well to longer context task performance, but I'm not entirely convinced it directly translates to individual end-step improvement on every class of task.

By andrepd 2025-08-0722:48 We can barely measure "performance" in any objective sense, let alone claim that it's doubling every 4 months.....

By "performance" I guess you mean "the length of task that can be done adequately"?

It is a benchmark but I'm not very convinced it's the be-all, end-all.

By nomel 2025-08-0722:17 > It is a benchmark but I'm not very convinced it's the be-all, end-all.

Who's suggesting it is?

>you'd expect GPT-5 to be a world-shattering release rather than incremental and stable improvement.

Compared to the GPT-4 release which was a little over 2 years ago (less than the gap between 3 and 4), it is. The only difference is we now have multiple organizations releasing state of the art models every few months. Even if models are improving at the same rate, those same big jumps after every handful of months was never realistic.

It's an incremental stable improvement over o3, which was released what? 4 months ago.

By morleytj 2025-08-0723:18 The benchmarks certainly seem to be improving from the presentation. I don't think they started training this 4 months ago though.

There's gains, but the question is, how much investment for that gain? How sustainable is that investment to gain ratio? The things I'm curious about here are more about the amount of effort being put into this level of improvement, rather than the time.

By brandall10 2025-08-0720:531 reply To be fair, this is one of the pathways GPT-5 was speculated to take as far back at 6 or so months ago - simply being an incremental upgrade from a performance perspective, but a leap from a product simplification approach.

At this point it's pretty much given it's a game of inches moving forward.

> a leap from a product simplification approach.

According to the article, GPT-5 is actually three models and they can be run at 4 levels of thinking. Thats a dozen ways you can run any given input on "GPT-5", so its hardly a simple product line up (but maybe better than before).

By brandall10 2025-08-0723:54 It's a big improvement from an API consumer standpoint - everything is now under a single product family that is logically stratified... up until yesterday people were using o3, o4-mini, 4o, 4.1, o3, and all their variants as valid choices for new products, now those are moved off the main page as legacy or specialized options for the few things GPT-5 doesn't do.

It's even more simplified for the ChatGPT plan, It's just GPT-5 thinking/non-thinking for most accounts, and then the option of Pro for the higher end accounts.

By eru 2025-08-0723:55 A bit like Google Search uses a lot of different components under the hood?

> Maybe they've got some massive earthshattering model release coming out next, who knows.

Nothing in the current technology offers a path to AGI. These models are fixed after training completes.

Why do you think that AGI necessitates modification of the model during use? Couldn’t all the insights the model gains be contained in the context given to it?

Because time marches on and with it things change.

You could maybe accomplish this if you could fit all new information into context or with cycles of compression but that is kinda a crazy ask. There's too much new information, even considering compression. It certainly wouldn't allow for exponential growth (I'd expect sub linear).

I think a lot of people greatly underestimate how much new information is created every day. It's hard if you're not working on any research and seeing how incremental but constant improvement compounds. But try just looking at whatever company you work for. Do you know everything that people did that day? It takes more time to generate information than process information so that's on you side, but do you really think you could keep up? Maybe at a very high level but in that case you're missing a lot of information.

Think about it this way: if that could be done then LLM wouldn't need training or tuning because you could do everything through prompting.

The specific instance doesn’t need to know everything happening in the world at once to be AGI though. You could feed the trained model different contexts based on the task (and even let the model tell you what kind of raw data it wants) and it could still hypothetically be smarter than a human.

I’m not saying this is a realistic or efficient method to create AGI, but I think the argument „Model is static once trained -> model can’t be AGI“ is fallacious.

By godelski 2025-08-0723:37 I think that makes a lot of assumptions about the size of data and what can be efficiently packed into prompts. Even if we're assuming all info in a prompt is equal while in context and that it compresses information into the prompts before it falls out of context, then you're going to run into the compounding effects pretty quickly.

You're right, you don't technically need infinite, but we are still talking about exponential growth and I don't think that effectively changes anything.

Like I already said, the model can remember stuff as long as it’s in the context. LLMs can obviously remember stuff they were told or output themselves, even a few messages later.

AGI needs to genuinely learn and build new knowledge from experience, not just generate creative outputs based on what it has already seen.

LLMs might look “creative” but they are just remixing patterns from their training data and what is in the prompt. They cant actually update themselves or remember new things after training as there is no ongoing feedback loop.

This is why you can’t send an LLM to medical school and expect it to truly “graduate”. It cannot acquire or integrate new knowledge from real-world experience the way a human can.

Without a learning feedback loop, these models are unable to interact meaningfully with a changing reality or fulfill the expectation from an AGI: Contribute to new science and technology.

I agree that this is kind of true with a plain chat interface, but I don’t think that’s an inherent limit of an LLM. I think OpenAI actually has a memory feature where the LLM can specify data it wants to save and can then access later. I don’t see why this in principle wouldn’t be enough for the LLM to learn new data as time goes on. All possible counter arguments seem related to scale (of memory and context size), not the principle itself.