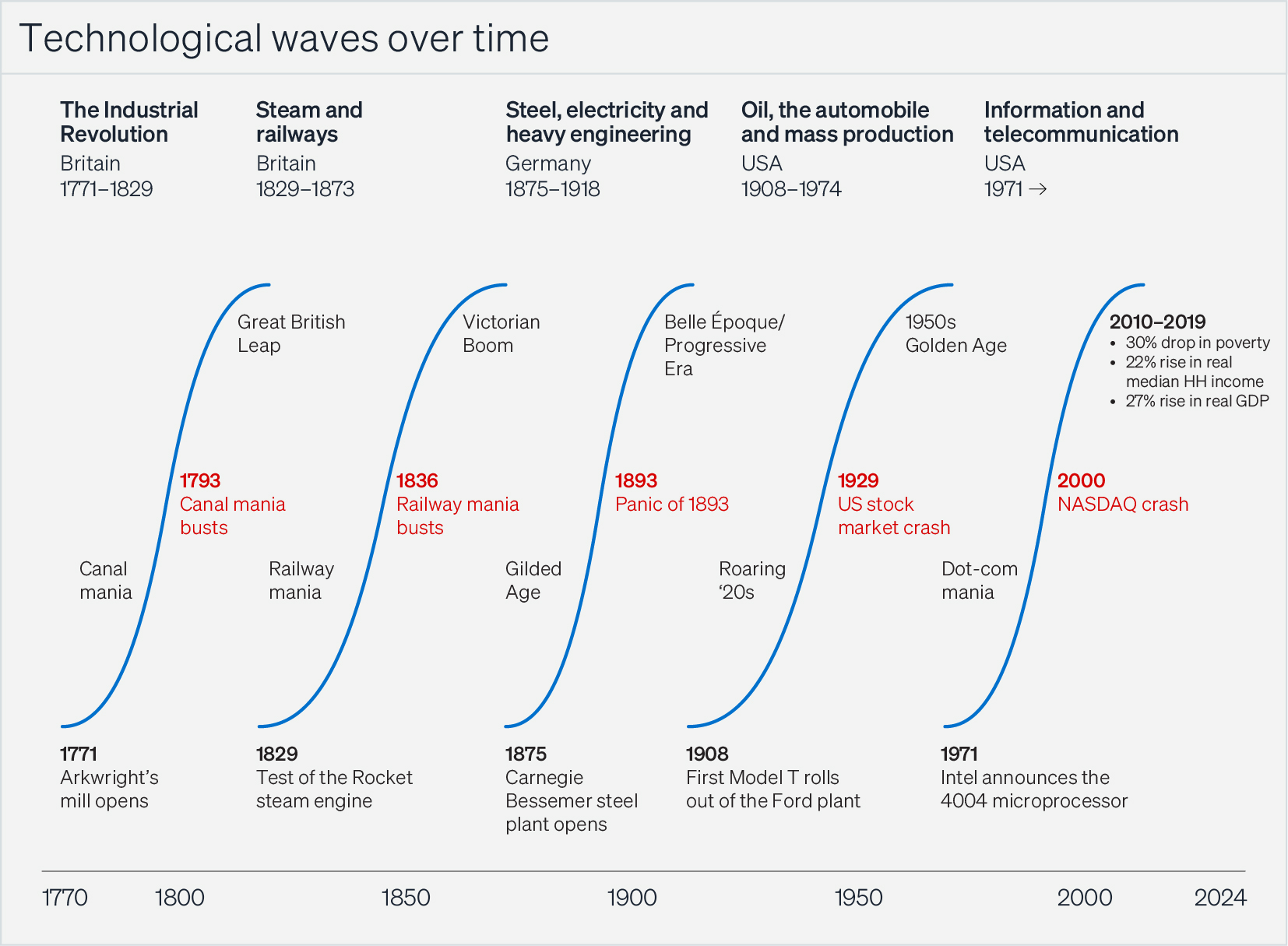

Fortunes are made by entrepreneurs and investors when revolutionary technologies enable waves of innovative, investable companies. Think of the railroad, the Bessemer process, electric power, the internal combustion engine, or the microprocessor—each of which, like a stray spark in a fireworks factory, set off decades of follow-on innovations, permeated every part of society, and catapulted a new set of inventors and investors into power, influence, and wealth.

Yet some technological innovations, though societally transformative, generate little in the way of new wealth; instead, they reinforce the status quo. Fifteen years before the microprocessor, another revolutionary idea, shipping containerization, arrived at a less propitious time, when technological advancement was a Red Queen’s race, and inventors and investors were left no better off for non-stop running.

Anyone who invests in the new new thing must answer two questions: First, how much value will this innovation create? And second, who will capture it? Information and communication technology (ICT) was a revolution whose value was captured by startups and led to thousands of newly rich founders, employees, and investors. In contrast, shipping containerization was a revolution whose value was spread so thin that in the end, it made only a single founder temporarily rich and only a single investor a little bit richer.

Is generative AI more like the former or the latter? Will it be the basis of many future industrial fortunes, or a net loser for the investment community as a whole, with a few zero-sum winners here and there?

There are ways to make money investing in the fruits of AI, but they will depend on assuming the latter—that it is once again a less propitious time for inventors and investors, that AI model builders and application companies will eventually compete each other into an oligopoly, and that the gains from AI will accrue not to its builders but to customers. A lot of the money pouring into AI is therefore being invested in the wrong places, and aside from a couple of lucky early investors, those who make money will be the ones with the foresight to get out early.

The microprocessor was revolutionary, but the people who invented it at Intel in 1971 did not see it that way—they just wanted to avoid designing desktop calculator chipsets from scratch every time. But outsiders realized they could use the microprocessor to build their own personal computers, and enthusiasts did. Thousands of tinkerers found configurations and uses that Intel never dreamed of. This distributed and permissionless invention kicked off a “great surge of development,” as the economist Carlota Perez calls it, triggered by technology but driven by economic and societal forces.[1]

There was no real demand for personal computers in the early 1970s; they were expensive toys. But the experimenters laid the technical groundwork and built a community. Then, around 1975, a step-change in the cost of microprocessors made the personal computer market viable. The Intel 8080 had an initial list price of $360 ($2,300 in today’s dollars). MITS could barely turn a profit on its Altair at a bulk price of $75 each ($490 today). But when MOS Technologies started selling its 6502 for $25 ($150 today), Steve Wozniak could afford to build a prototype Apple. The 6502 and the similarly priced Zilog Z80 forced Intel’s prices down. The nascent PC community started spawning entrepreneurs and a score of companies appeared, each with a slightly different product.

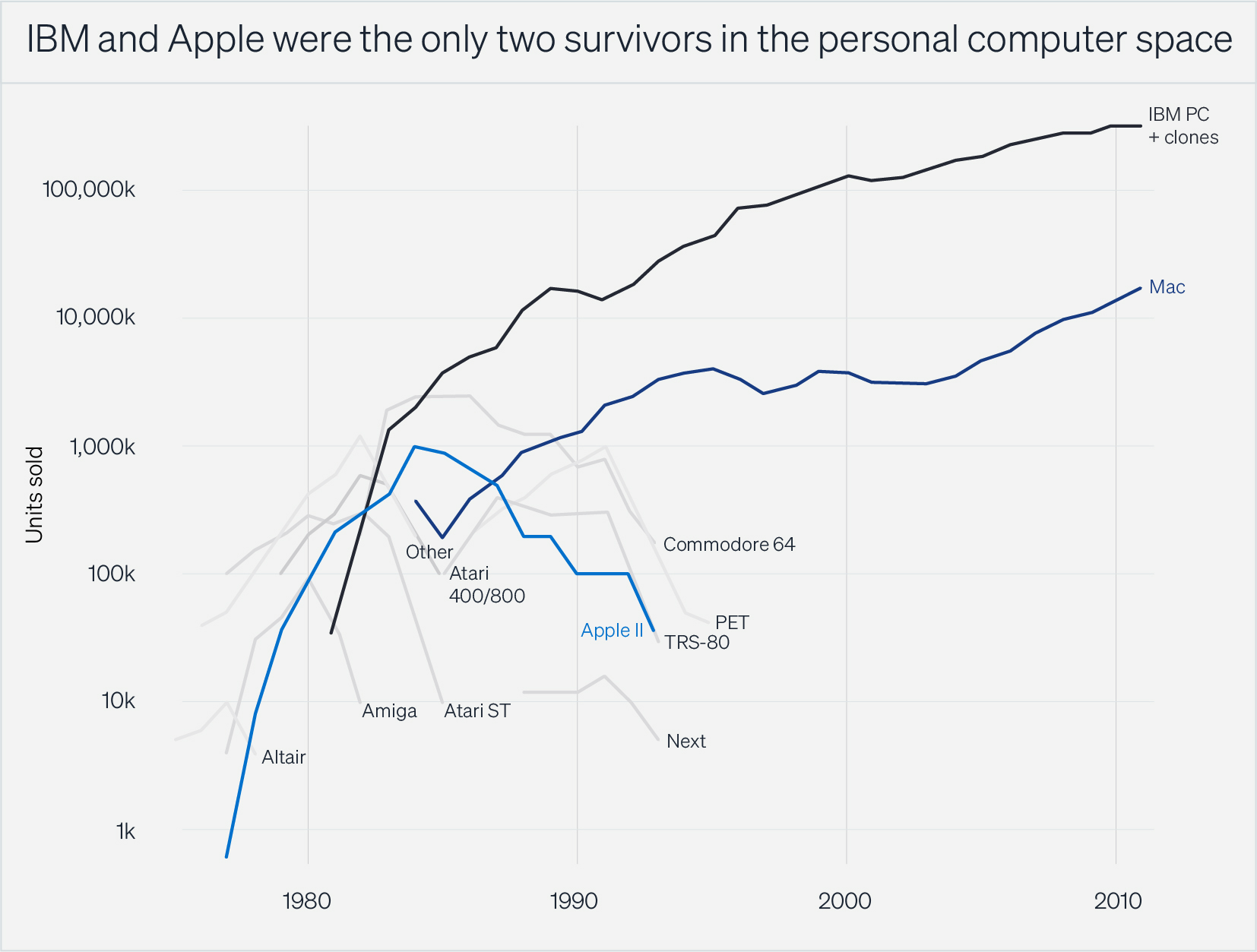

You couldn’t have known in the mid-1970s that the PC (and PC-like products, such as ATMs, POS terminals, smartphones, etc.) would revolutionize everything. While Steve Jobs was telling investors that every household would someday have a personal computer (a wild underestimate, as it turned out), others questioned the need for personal computers at all. As late as 1979, Apple’s ads didn’t tell you what a personal computer could do—it asked what you did with it.[2] The established computer manufacturers (IBM, HP, DEC) had no interest in a product their customers weren’t asking for. Nobody “needed” a computer, and so PCs weren’t bought—they were sold. Flashy startups like Apple and Sinclair used hype to get noticed, while companies with footholds in consumer electronics like Atari, Commodore, and Tandy/RadioShack used strong retail connections to put their PCs in front of potential customers.

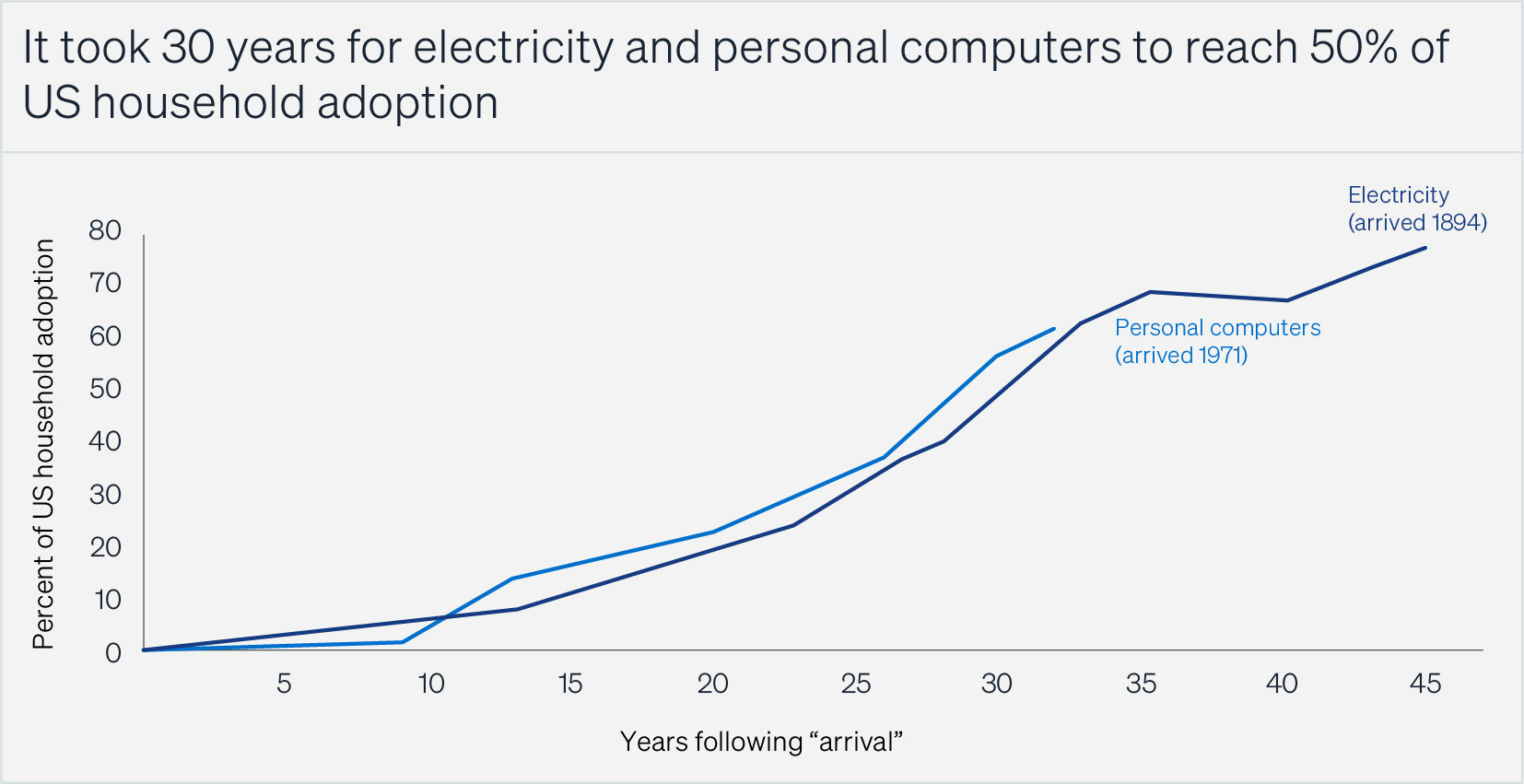

The market grew slowly at first, accelerating only as experiments led to practical applications like the spreadsheet, introduced in 1979. As use grew, observation of use caused a reduction in uncertainty, leading to more adoption in a self-reinforcing cycle. This kind of gathering momentum takes time in every technological wave: It took almost 30 years for electricity to reach half of American households, for example, and it took about the same amount of time for personal computers.[3] When a technological revolution changes everything, it takes a huge amount of innovation, investment, storytelling, time, and plain old work. It also sucks up all the money and talent available. Like Kuhn’s paradigms in science, any technology not part of the wave’s techno-economic paradigm will seem like a sideshow.[4]

The nascent growth of PCs attracted investors—venture capitalists—who started making risky bets on new companies. This development incentivized more inventors, entrepreneurs, and researchers, which in turn drew in more speculative capital.

Companies like IBM, the computing behemoth before the PC, saw poor relative performance. They didn’t believe the PC could survive long enough to become capable in their market and didn’t care about new, small markets that wanted a cheaper solution.

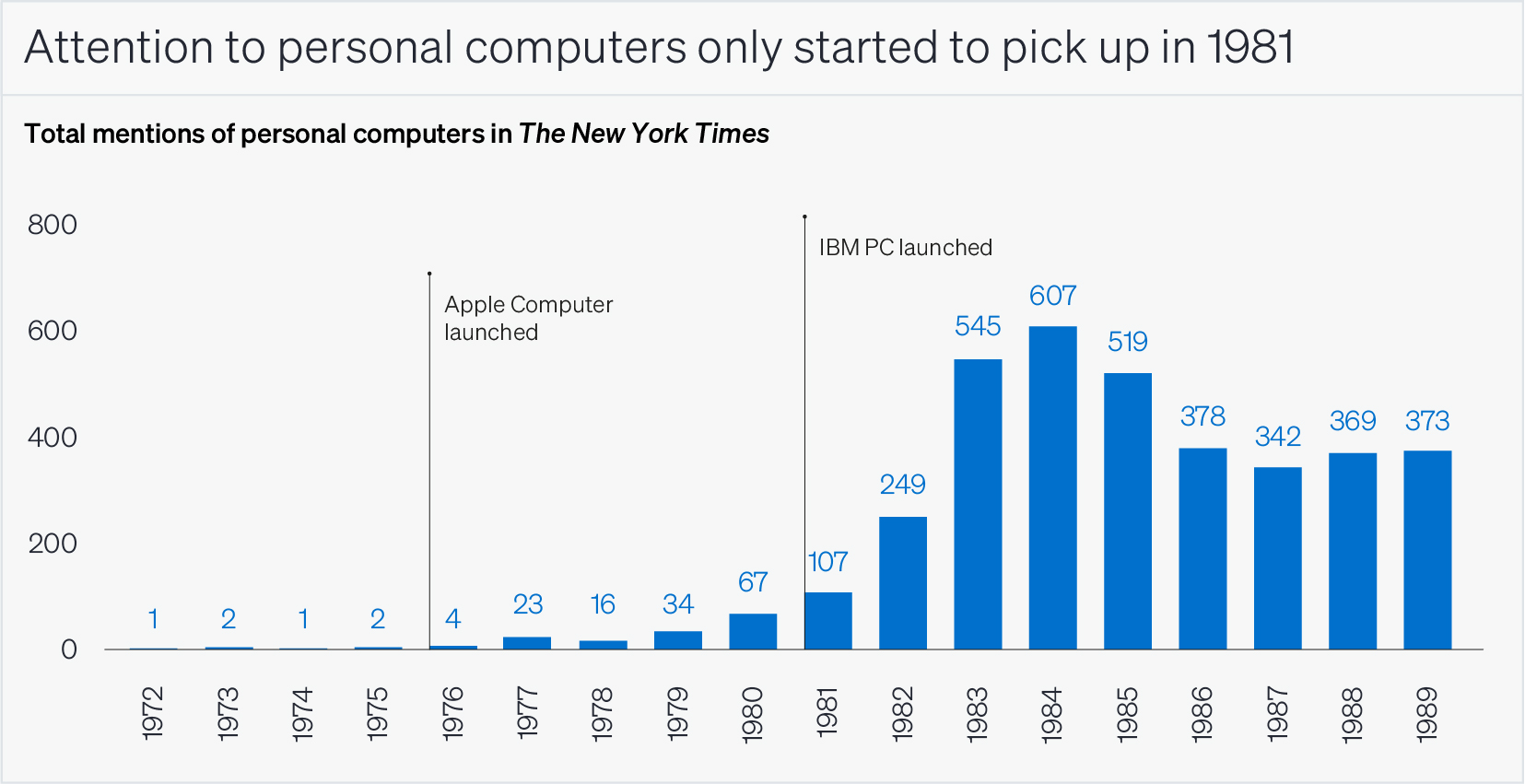

Retroactively, we give the PC pioneers the powers of prophets rather than visionaries. But at the time, nobody outside of a small group of early adopters paid any attention. Establishment media like The New York Times didn’t take the PC seriously until after IBM’s was introduced in August 1981. In the entire year of 1976, when Apple Computer was founded, the NYT mentioned PCs only four times.[5] Apparently, only the crazy ones, the misfits, the rebels, and the troublemakers were paying attention.

It’s the element of surprise that should strike us most forcefully when we compare the early days of the computer revolution to today. No one took note of personal computers in the 1970s. In 2025, AI is all we seem to talk about.

Big companies hate surprises. That’s why uncertainty makes a perfect moat for startups. Apple would never have survived IBM entering the market in 1979, and only lived to compete another day after raising $100 million in its 1980 IPO. It was the only remaining competitor after the IBM-induced winnowing.[6]

As the tech took hold and started to show promise, innovations in software, memory, and peripherals like floppy disk drives and modems joined it. They reinforced one another, with each advance putting pressure on the technologies adjacent to it. When any part of the system held back the other parts, investors rushed to fund that sector. As increases in PC memory allowed more complicated software, for example, there became a need for more external storage, which caused VC Dave Marquardt to invest in disk drive manufacturer Seagate in 1980. Seagate gave Marquardt a 40x return when it went public in 1981. Other investors noticed, and some $270 million was plowed into the industry in the following three years.[7]

Money also poured into the underlying infrastructure—fiber optic networks, chip making, etc.—so that capacity was never a bottleneck. Companies which used the new technological system to outperform incumbents began to take market share, and even staid competitors realized they needed to adopt the new thing or die. The hype became a froth which became an investment bubble: the dot-com frenzy of the late 1990s. The ICT wave was therefore similar to previous ones—like the investment mania of the 1830s and the Roaring ‘20s, which followed the infrastructure buildout of canals and railways, respectively—in which the human response to each stage predictably generated the next.

When the dot-com bubble popped, society found it disapproved of the excesses in the sector and governments found they had the popular support to reassert authority over the tech companies and their investors. This put a brake on the madness. Instead of the reckless innovation of the bubble, companies started to expand into proven markets, and financiers moved from speculating to investing. Entrepreneurs began to focus on finding applications rather than on innovating the underlying technologies. Technological improvements continued, but change became more evolutionary than revolutionary.

As change slowed, companies gained the confidence to invest for the longer term. They began to combine various parts of the system in new ways to create value for a wider group of users. The massive overbuilding of fiber optic telecom networks and other infrastructure during the frenzy left plenty of cheap capacity, keeping the costs of expansion down. It was a great time to be a businessperson and investor.

In contrast, society did not need a bubble to pop to start excoriating AI. Given that the backlash to tech has been going on for a decade, this seems normal to us. But the AI backlash differs from the general high regard, earlier in the cycle, enjoyed by the likes of Bill Gates, Steve Jobs, Jeff Bezos, and others who built big tech businesses. The world hates change, and only gave tech a pass in the ‘80s and ‘90s because it all still seemed reversible: it could be made to go away if it turned out badly. This gave the early computer innovators some leeway to experiment. Now that everyone knows computers are here to stay, AI is not allowed the same wait-and-see attitude. It is seen as part of the ICT revolution.

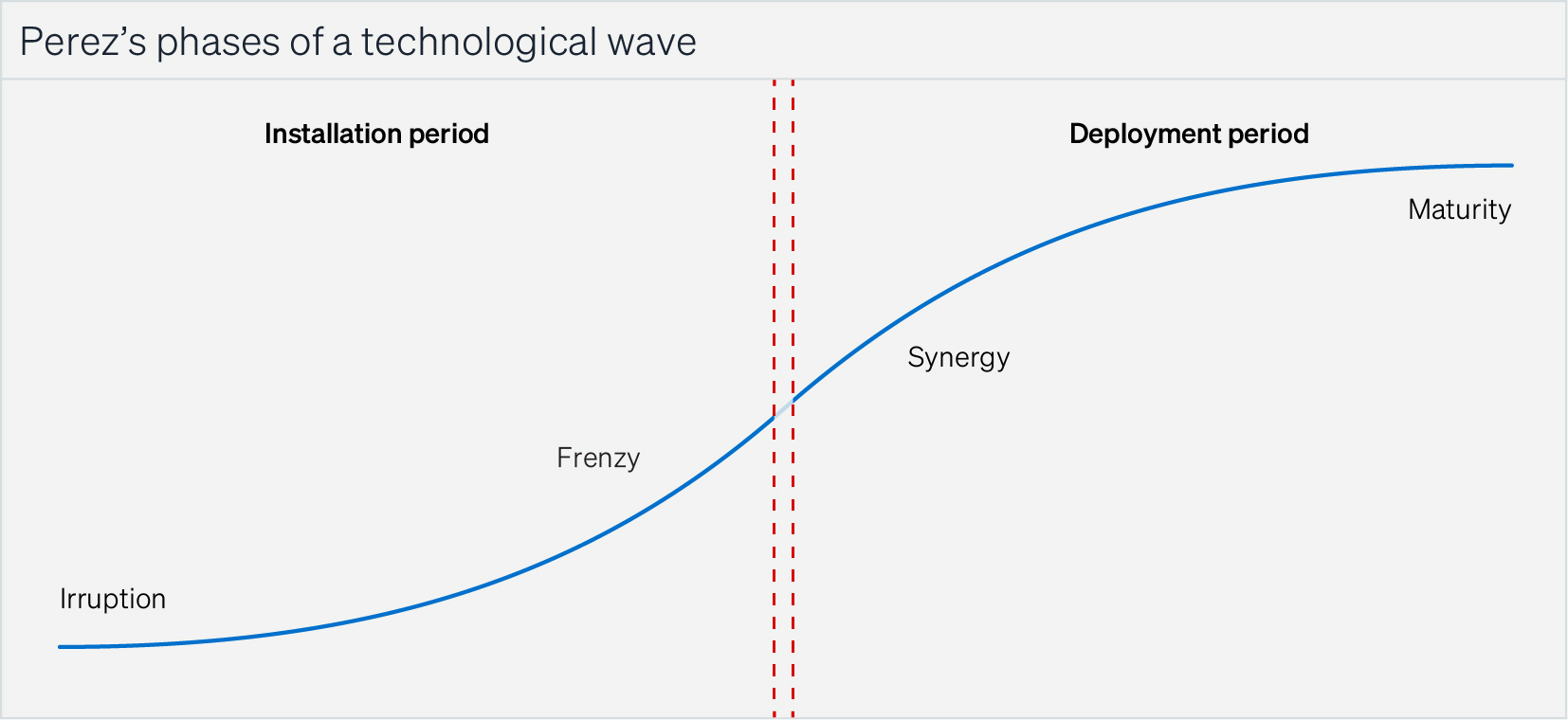

Perez, the economist, breaks each technological wave into four predictable phases: irruption, frenzy, synergy, and maturity. Each has a characteristic investment profile.

The middle two, frenzy and synergy, are the easy ones for investors. Frenzy is when everyone piles in and investors are rewarded for taking big risks on unproven ideas, culminating in the bubble, when paper profits disappear. When rationality returns, the synergy phase begins, as companies make their products usable and productive for a wide array of users. Synergy pays those who are patient, picky, and can bring more than just money to the table.

Irruption and maturity are more difficult to invest in.

Investing in the 1970s was harder than it might look in hindsight. To invest from 1971 through 1975, you had to be either a true believer or a conglomerator with a knuckle-headed diversification strategy. Intel was a great investment, though it looked at first like a previous-wave electronics company. MOS Technologies was founded in 1969 to compete with Texas Instruments but sold a majority of itself to Allen-Bradley to stay afloat. Zilog was funded in 1975 by Exxon (Exxon!). Apple was a great investment, but it had none of the hallmarks of what VCs look for, as the PC was still a solution in search of a problem.

It was later irruption, in the early 1980s, when great opportunities proliferated: PC makers (Compaq, Dell), software and operating systems (Microsoft, Electronic Arts, Adobe), peripherals (Seagate), workstations (Sun), and computer stores (Businessland), among others. If you invested in the winners, you did well. But there was still more money than ideas, which meant that it was no golden age for investing. By 1983, there were more than 70 companies competing in the disk drive sector alone, and valuations collapsed. There were plenty of people whose fortunes were established in the 1970s and 1980s, and many VCs made their names in that era. But the biggest advantage to being an irruption-stage investor was building institutional knowledge to invest early and well in the frenzy and synergy phases.

Investing in the maturity phase is even more difficult. In irruption, it’s hard to see what will happen; in maturity, nothing much happens at all. The uncertainty about what will work and how customers and society will react is almost gone. Things are predictable, and everyone acts predictably.

The lack of dynamism allows the successful synergy companies to remain entrenched (see: the Nifty 50 and FAANG), but growth becomes harder. They start to enter each other’s markets, conglomerate, raise prices, and cut costs. The era of products priced to entice new customers ends, and quality suffers. The big companies continue to embrace the idea of revolutionary innovation, but feel the need to control how their advances are used. R&D spending is redirected from product and process innovation toward increasingly fruitless attempts to find ways to extend the current paradigm. Companies frame this as a drive to win, but it’s really a fear of losing.

Innovation can happen during maturity, sometimes spectacularly. But because these innovations only find support if they fit into the current wave’s paradigm, they are easily captured in the dominant companies’ gravity wells. This means making money as an entrepreneur or investor in them is almost impossible. Generative AI is clearly being captured by the dominant ICT companies, which raises the question of whether this time will be different for inventors and investors—a different question from whether AI itself is a revolutionary technology.

Shipping containerization was a late-wave innovation that changed the world, kicked off our modern era of globalization, resulted in profound changes to society and the economy, and contributed to rapid growth in well-being. But there were, perhaps, only one or two people who made real money investing in it.

The year 1956 was late in the previous wave. But that year, the company soon to be known as SeaLand revolutionized freight shipping with the launch of the first containership, the Ideal-X. SeaLand’s founder, Malcom McLean, had an epiphany that the job to be done by truckers, railroads, and shipping lines was to move goods from shipper to destination, not to drive trucks, fill boxcars, or lade boats. SeaLand allowed freight to transfer seamlessly from one mode to another, saving time, making shipping more predictable, and cutting costs—both the costs of loading, unloading, and reloading, and the cost of a ship sitting idly in port as it was loaded and unloaded.[8]

The benefits of containerization, if it could be made to happen, were obvious. Everybody could see the efficiencies, and customers don’t care how something gets to where they can buy it, as long as it does. But longshoremen would lose work, politicians would lose the votes of those who lost work, port authorities would lose the support of the politicians, federal regulators would be blamed for adverse consequences, railroads might lose freight to shipping lines, shipping lines might lose freight to new shipping lines, and it would all cost a mint. Most thought McLean would never be able to make it work.

McLean squeezed through the cracks of the opposition he faced. He bought and retrofitted war surplus ships, lowering costs. He went after the coastal shipping trade, a dying business in the age of the new interstates, to avoid competition. He set up shop in Newark, NJ, rather than the shipping hub of Hell’s Kitchen, to get buy-in from the port authority and avoid Manhattan congestion. And he made a deal with the New York longshoremen’s union, which was only possible because he was a small player whom they figured was not a threat.

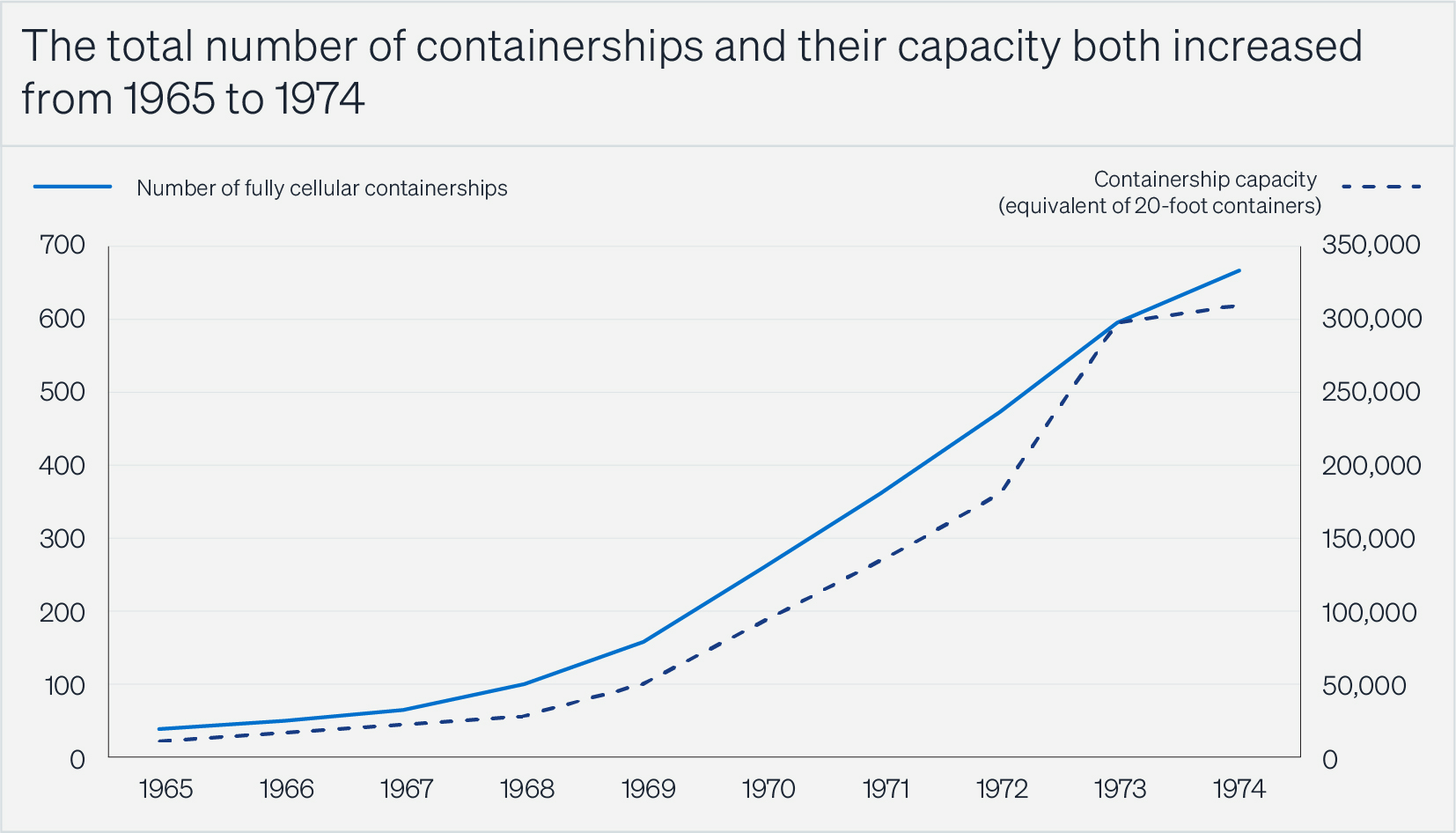

But competitors and regulators moved too quickly for McLean to seize the few barriers to entry that might have been available to him: domination of the ports, exclusive agreements with shippers or other forms of transportation, standardization on proprietary technology, etc.[9] When it started to look like it might work, around 1965, the obvious advantages of containerization meant that every large shipping line entered the business, and competition took off. Even though containerized freight was less than 1% of total trade by 1968, the number of containerships was already ramping fast.[10] Capacity outstripped demand for years.

The increase in competition led to a rate war, which led to squeezed profits, which in turn led to consolidation and cartels. Meanwhile, the cost of building ever-larger container ships and the port facilities to deal with them meant the business became hugely capital intensive. McLean saw the writing on the wall and sold SeaLand to R.J. Reynolds in January 1969. He was, perhaps, the only entrepreneur to get out unscathed.

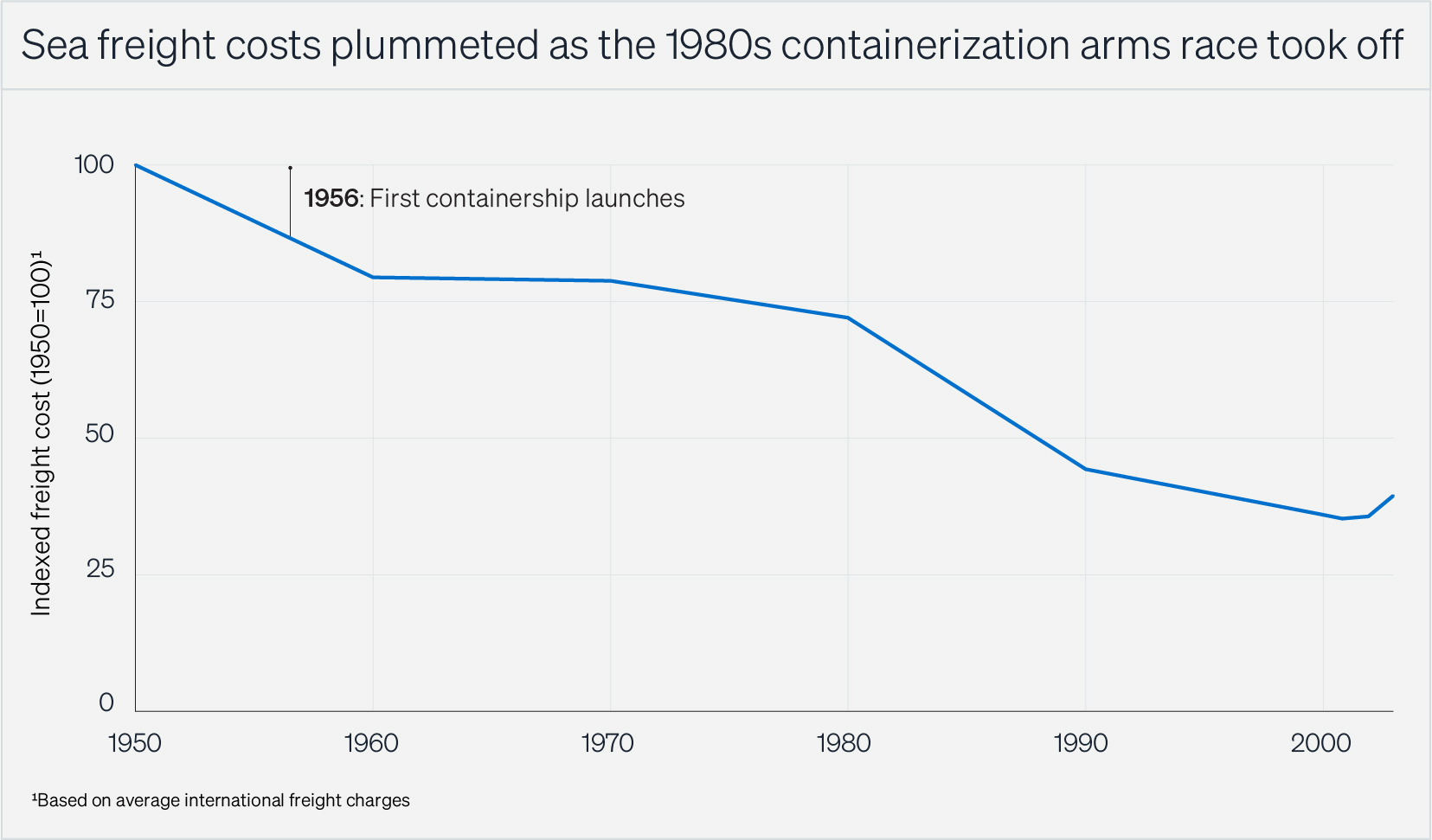

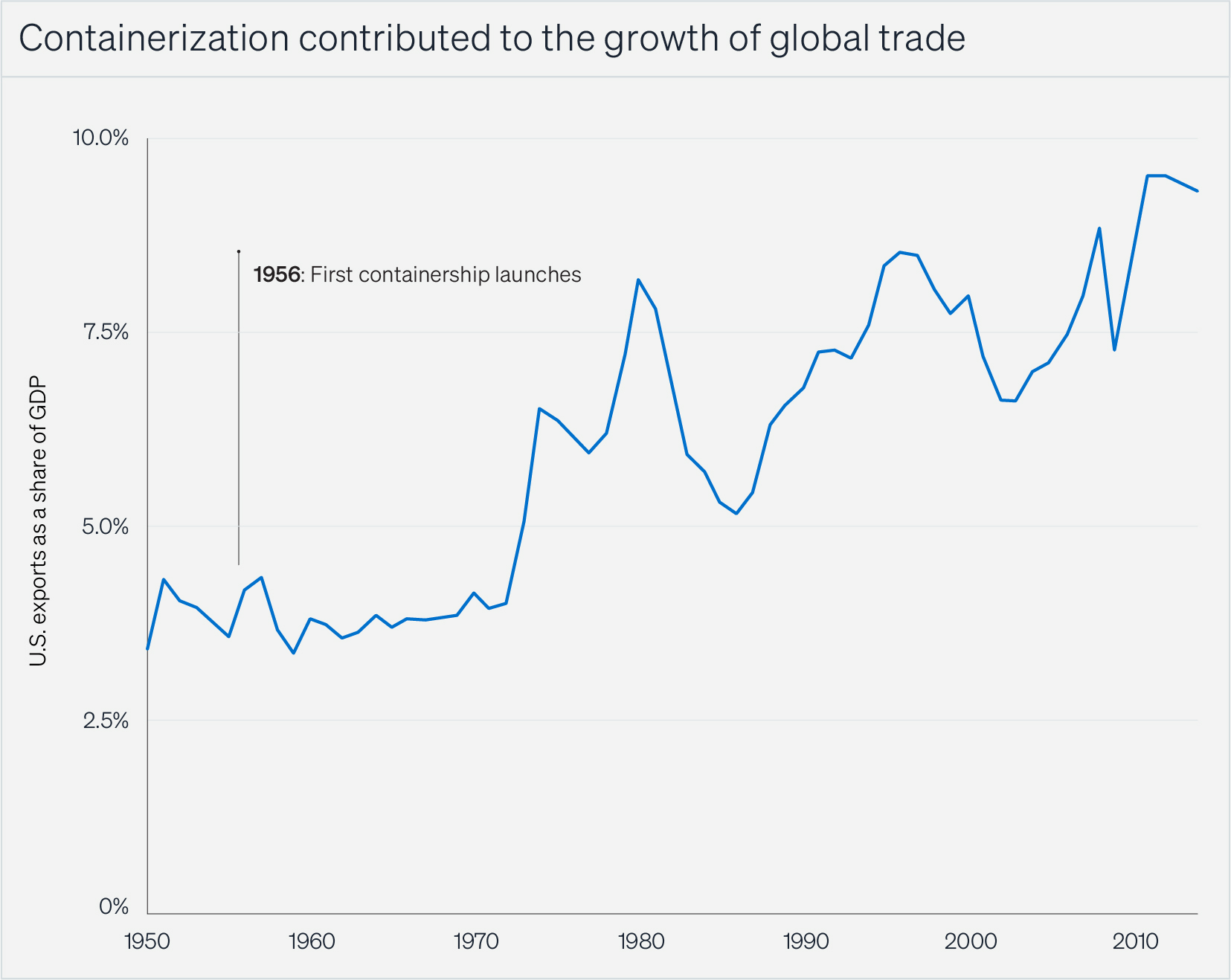

It took a long time for the end-to-end vision to be realized. But around 1980, a dramatic drop began in the cost of sea freight.[11] This contributed to a boom in international trade[12] and allowed manufacturers to move away from higher-wage to lower-wage countries, making containerization irreversible.

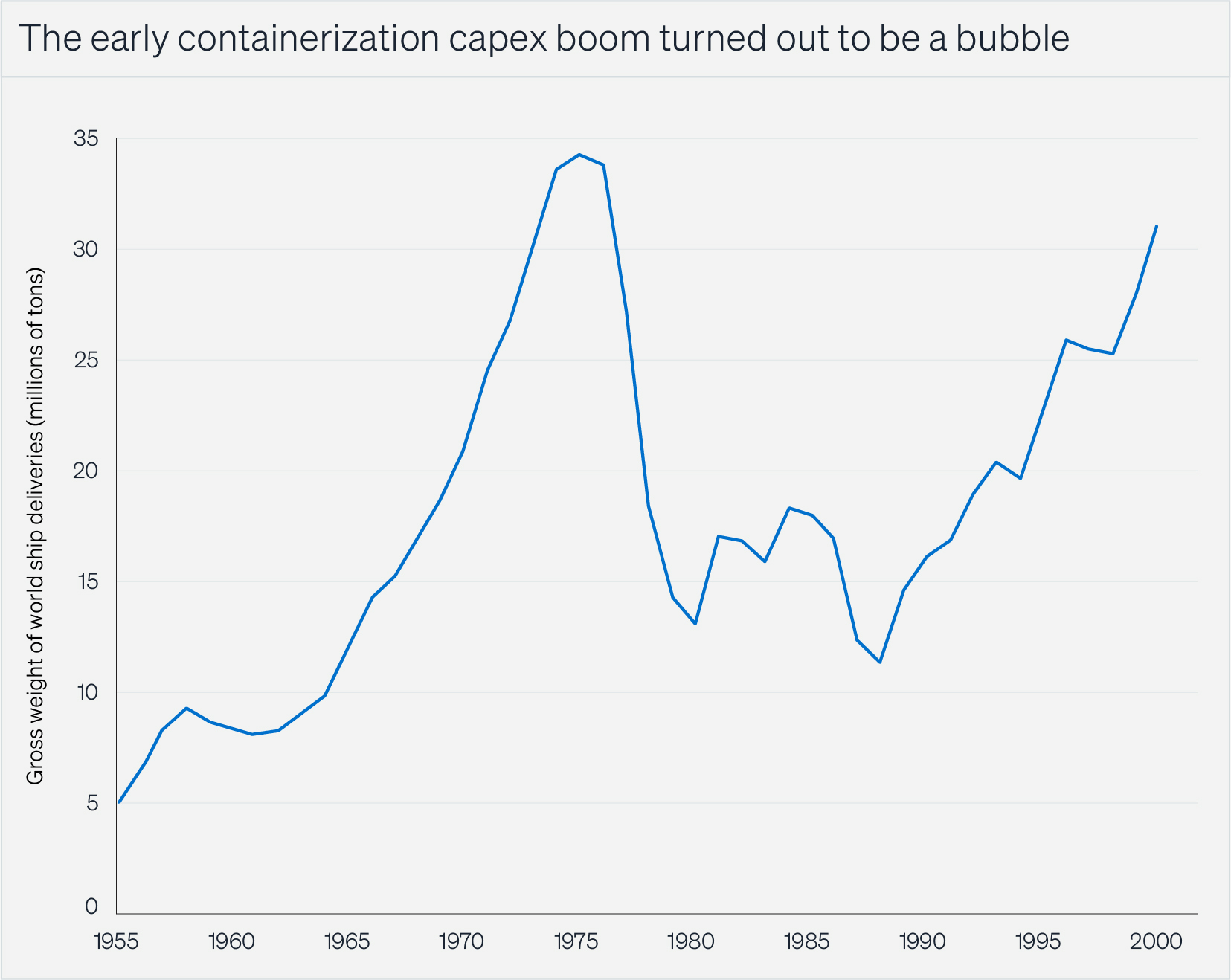

Some people did make money, of course; someone always does. McLean did, as did shipping magnate Daniel Ludwig, who had invested $8.5 million in SeaLand’s predecessor, McLean Industries, at $8.50 per share in 1965 and sold in 1969 for $50 per share.[13] Shipbuilders made money, too: between 1967 and 1972, some $10 billion ($80 billion in 2025 dollars) was spent building containerships. The contractors that built the new container ports also made money. And, later, shipping lines that consolidated and dominated the business, like Maersk and Evergreen, became very large. But, “for R.J. Reynolds, and for other companies that had chased fast growth by buying into container shipping in the late 1960s, their investments brought little but disappointment.”[14] Aside from McLean and Ludwig, it is hard to find anyone who became rich from containerization itself, because competition and capex costs made it hard to grow fast or achieve high margins.

The business ended up being dominated primarily by the previous incumbents, and the margins went to the companies shipping goods, not the ones they shipped through. Companies like IKEA benefited from cheap shipping, going from a provincial Scandinavian company in 1972 to the world’s largest furniture retailer by 2008; container shipping was a perfect fit for IKEA’s flat-pack furniture. Others, like Walmart, used the predictability enabled by containerization to lower inventory and its associated costs.

With hindsight, it’s easy to see how you could have invested in containerization: not in the container shipping industry itself, but in the industries that benefited from containerization. But even here, the success of companies like Walmart, Costco, and Target was coupled with the failure of others. The fallout from containerization set Sears and Woolworth on downward spirals, put the final nail in the coffin of Montgomery Ward and A&P, and drove Macy’s into bankruptcy before it was rescued and downsized by Federated. Meanwhile, in North Carolina, “the furniture capital of the world,” furniture makers tried to compete with IKEA by importing cheap pieces from China. They ended up being replaced by their suppliers.[15]

If there had been more time to build moats, there might have been a few dominant containerization companies, and the people behind them would be at the top of the Forbes 400, while their investors would be legendary. But moats take time to build and, unlike the personal computer, the adoption of containerization wasn’t a surprise—every business with interests at stake had a strategic plan immediately.

The economist Joseph Schumpeter said “perfect competition is and always has been temporarily suspended whenever anything new is being introduced.”[16] But containerization shows this isn’t true at the end of tech waves. And because there is no economic profit during perfect competition, there is no money to be made by innovators during maturity. Like containerization, the introduction of AI did not lead to a period of protected profits for its innovators. It led to an immediate competitive free-for-all.

Let’s grant that generative AI is revolutionary (but also that, as is becoming increasingly clear, this particular tech is now already in an evolutionary stage). It will create a lot of value for the economy, and investors hope to capture some of it. When, who, and how depends on whether AI is the end of the ICT wave, or the beginning of a new one.

If AI had started a new wave, there would have been an extended period of uncertainty and experimentation. There would have been a population of early adopters experimenting with their own models. When thousands or millions of tinkerers use the tech to solve problems in entirely new ways, its uses proliferate. But because they are using models owned by the big AI companies, their ability to fully experiment is limited to what’s allowed by the incumbents, who have no desire to permit an extended challenge to the status quo.

This doesn’t mean AI can’t start the next technological revolution. It might, if experimentation becomes cheap, distributed and permissionless—like Wozniak cobbling together computers in his garage, Ford building his first internal combustion engine in his kitchen, or Trevithick building his high-pressure steam engine as soon as James Watt’s patents expired. When any would-be innovator can build and train an LLM on their laptop and put it to use in any way their imagination dictates, it might be the seed of the next big set of changes—something revolutionary rather than evolutionary. But until and unless that happens, there can be no irruption.

AI is instead the epitome of the ICT wave. The computing visionaries of the 1960s set out to build a machine that could think, which their successors eventually did, by extending gains in algorithms, chips, data, and data center infrastructure. Like containerization, AI is an extension of something that came before, and therefore no one is surprised by what it can and will do. In the 1970s, it took time for people to wrap their heads around the desirability of powerful and ubiquitous computing. But in 2025, machines that think better than previous machines are easy for people to understand.

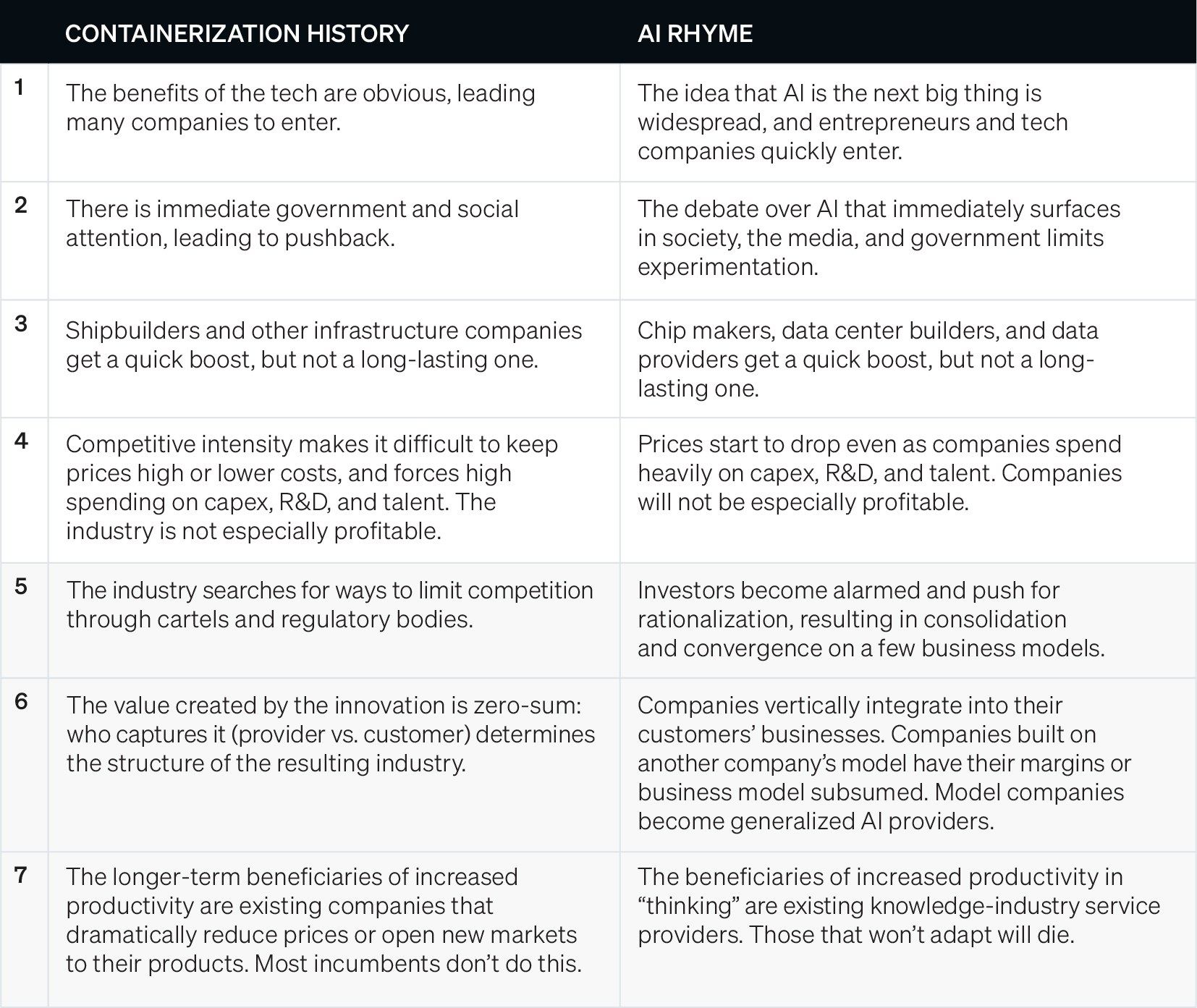

Consider the extent to which the progress of AI rhymes with the business evolution of containerization:

In the “AI rhymes” column, the first four items are already underway. How you should invest depends on whether you believe Nos. 5–7 are next.

Economists are predicting that AI will increase global GDP somewhere between 1%[17] to more than 7%[18] over the next decade, which is $1–7 trillion of new value created. The big question is where that money will stick as it flows through the value chain.

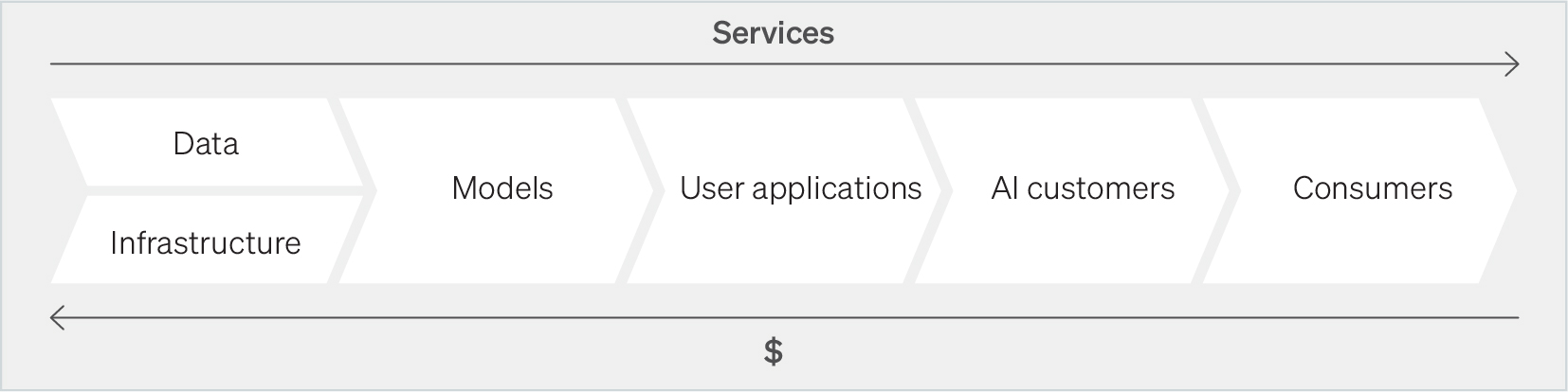

Most AI market overviews have a score or more categories, breaking each of them into customer and industry served. But these will change dramatically over the next few years. You could, instead, just follow the money to simplify the taxonomy of companies:

What the history of containerization suggests is that, if you aren’t already an investor in a model company, you shouldn’t bother. Sam Altman and a few other early movers may make a fortune, as McLean and Ludwig did. But the huge costs of building and running a model, coupled with intense competition, means there will, in the end, be only a few companies, each funded and owned by the largest tech companies. If you’re already an investor, congratulations: There will be consolidation, so you might get an exit.

Domain-specific models—like Cursor or Harvey—will be part of the consolidation. These are probably the most valuable models. But fine-tuning is relatively cheap, and there are big economies of scope. On the other hand, just as Google had to buy Invite Media in 2010 to figure out how to sell to ad agencies, domain-specific model companies that have earned the trust of their customers will be prime acquisition targets. And although it seems possible that models which generate things other than language—like Midjourney or Runway—might use their somewhat different architecture to carve out a separate technological path, the LLM companies have easily entered this space as well. Whether this applies to companies like Osmo remains to be seen.

While it’s too late to invest in the model companies, the profusion of those using the models to solve specific problems is ongoing: Perplexity, InflectionAI, Writer, Abridge, and a hundred others. But if any of these become very valuable, the model companies will take their earnings, either through discriminatory pricing or vertical integration. Success, in other words, will mean defeat—always a bad thesis. At some point, model companies and app companies will converge: There will simply be AI companies, and only a few of them. There will be some winners, as always, but investments in the app layer as a whole will lose money.

The same caveat applies, however: If an app company can build a customer base or an amazing team, it might be acquired. But these companies aren’t really technology companies at all; they are building a market on spec and have to be priced as such. A further caveat is that there will be investors who make a killing arbitraging FOMO-panicked acquirors willing to massively overpay. But this is not really “investing.”

There might be an investment opportunity in companies that manage the interface between the AI giants and their customers, or protect company data from the model companies—like Hugging Face or Glean—because these businesses are by nature independent of the models. But no analogue in the post-containerization shipping market became very large. Even the successful intermediation companies in the AI space will likely end up mid-sized because the model companies will not allow them to gain strategic leverage—another consequence of the absence of surprise.

When an industry is going to be big but there is uncertainty about how it will play out, it often makes sense to swim upstream to the industry’s suppliers. In the case of AI, this means the chip providers, data companies, and cloud/data center companies: SambaNova, Scale AI, and Lambda, as well as those that have been around for a long time, like Nvidia and Bloomberg.

The case for data is mixed. General data—i.e., things most people know, including everything anyone knew more than, say, 10 years ago, and most of what was learned after that—is a commodity. There may be room for a few companies to do the grunt work of collating and tagging it, but since the collating and tagging might best be done by AI itself, there will not be a lot of pricing leverage. Domain-specific models will need specialist data, and other models will try to answer questions about the current moment. Specific, timely, and hard to reproduce data will be valuable. This is not a new market, of course—Bloomberg and others have done well by it. A more concentrated customer base will lower prices for this data, while wider use will raise revenues. On balance, this will probably be a plus for the industry, though not a huge one. There will be new companies built, but only a couple worth investing in.

The high capex of AI companies will primarily be spent with the infrastructure companies. These companies are already valued with this expectation, so there won’t be an upside surprise. But consider that shipbuilding benefited from containerization from 1965 until demand collapsed after about 1973.[19] If AI companies consolidate or otherwise act in concert, even a slight downturn that forces them to conserve cash could turn into a serious, sudden, and long-lasting decline in infrastructure spending. This would leave companies like Nvidia and its emerging competitors—who must all make long-term commitments to suppliers and for capacity expansion—unable to lower costs to match the new, smaller market size. Companies priced for an s-curve are overpriced if there’s a peak and decline.

All of which means that investors shouldn’t swim upstream, but fish downstream: companies whose products rely on achieving high-quality results from somewhat ambiguous information will see increased productivity and higher profits. These sectors include professional services, healthcare, education, financial services, and creative services, which together account for between a third and a half of global GDP and have not seen much increased productivity from automation. AI can help lower costs, but as with containerization, how individual businesses incorporate lower costs into their strategies—and what they decide to do with the savings—will determine success. To put it bluntly, using cost savings to increase profits rather than grow revenue is a loser’s game.

The companies that will benefit most rapidly are those whose strategies are already conditional on lowering costs. IKEA’s longtime strategy was to sell quality furniture for low prices and make it up on volume. After containerization made it possible for them to go worldwide, IKEA became the world’s largest retailer and Ingvar Kamprad (the IK of IKEA) became a billionaire. Similarly, Walmart, whose strategy was high volume and low prices in underserved markets, benefited from both cost savings and just-in-time supply chains, allowing increased product variety and lower inventory costs.

Today’s knowledge-work companies that already prioritize the same values are the least risky way to bet on AI, but new companies will form or re-form with a high-volume, low-cost strategy, just as Costco did in the early 1980s. New companies will compete with the incumbents, but with a clean slate and hindsight. Regardless, there are few barriers to entry, so each of these firms will face stiff competition and operate in fragmented markets. Experienced management and flawless execution will be key.

Being an entrepreneur will be a fabulous proposition in these sectors. Being an investor will be harder. Companies will not need much private capital—IKEA never needed to raise risk capital, and Costco raised only one round in 1983 before going public in 1985—because implementing cost-savings technology is not capital intensive. As with containerization, there will be a long lag between technology trigger and the best investments. The opportunities will be later.

Stock pickers will also make money, but they need to be choosy. At the high end of projections, an additional 7% in GDP growth over ten years within one third of the economy gives a tailwind of only about 2% per year to these companies—even less if productivity growth from older ICT products abates. The primary value shift will be to companies that are embracing the strategic implications of AI from companies that are not, the way Walmart benefited from Sears, which took advantage of cheaper goods prices but did not reinvent itself.

Consumers, however, will be the biggest beneficiaries. Previous waves of mechanization benefited labor productivity in manufacturing, driving prices down and saving consumers money. But increased labor productivity in manufacturing also led to higher manufacturing wages. Wages in services businesses had to rise to compete, even though these businesses did not benefit from productivity gains. This caused the price of services to rise.[20] The share of household spending on food and clothing went from 55% in 1918 to 16% in 2023,[21] but the cost of knowledge-intensive services like healthcare and education have grown well above inflation.

Something similar will happen with AI: Knowledge-intensive services will get cheaper, allowing consumers to buy more of them, while services that require person-to-person interaction will get more expensive, taking up a greater percentage of household spending. This points to obvious opportunities in both. But the big news is that most of the new value created by AI will be captured by consumers, who should see a wider variety of knowledge-intensive goods at reasonable prices, and wider and more affordable access to services like medical care, education, and advice.

There is nothing better than the beginning of a new wave, when the opportunities to envision, invent, and build world-changing companies leads to money, fame, and glory. But there is nothing more dangerous for investors and entrepreneurs than wishful thinking. The lessons learned from investing in tech over the last 50 years are not the right ones to apply now. The way to invest in AI is to think through the implications of knowledge workers becoming more efficient, to imagine what markets this efficiency unlocks, and to invest in those. For decades, the way to make money was to bet on what the new thing was. Now, you have to bet on the opportunities it opens up.

Jerry Neumann is a retired venture investor, writing and teaching about innovation.