What is OpenAI? I realize you might say "a foundation model lab" or "the company that runs ChatGPT," but that doesn't really give the full picture of everything it’s promised, or claimed, or leaked…

What is OpenAI?

I realize you might say "a foundation model lab" or "the company that runs ChatGPT," but that doesn't really give the full picture of everything it’s promised, or claimed, or leaked that it was or would be.

No, really, if you believe its leaks to the press...

- OpenAI is a social media company, this week launching Sora 2, a social feed entirely made up of generative video.

- OpenAI is a workplace productivity company, allegedly working on its own productivity suite to compete with Microsoft.

- OpenAI is a jobs portal, announcing in September it was "developing an AI-powered hiring platform," which it will launch 'by mid-2026.

- OpenAI is an ads company, and is apparently trying to hire an an ads chief, with the (alleged) intent to start showing ads in ChatGPT "by 2026."

- OpenAI is a company that would sell AI compute like Microsoft Azure or Amazon Web Services, or at least is considering being one, with CFO Sarah Friar telling Bloomberg in August that it is not "actively looking" at such an effort today but will "think about it as a business down the line, for sure."

- OpenAI is a fabless semiconductor design company, launching its own AI chips in, again, 2026 with Broadcom, but only for internal use.

- OpenAI is a consumer hardware company, preparing to launch a device by the end of 2026 or early 2027 and hiring a bunch of Apple people to work on it, as well as considering — again, it’s just leaking random stuff at this point to pump up its value — a smart speaker, a voice recorder and AR glasses.

- OpenAI is also working on its own browser, I guess.

To be clear, many of these are ideas that OpenAI has leaked specifically so the media can continue to pump up its valuation and continue to raise the money it needs — at least $1 Trillion over the next four or five years, and I don't believe the theoretical (or actual) costs of many of the things I've listed are included.

OpenAI wants you to believe it is everything, because in reality it’s a company bereft of strategy, focus or vision. The GPT-5 upgrade for ChatGPT was a dud — an industry-wide embarrassment for arguably the most-hyped product in AI history, one that (as I revealed a few months ago) costs more to operate than its predecessor, not because of any inherent capability upgrade, but how it actually processes the prompts its user provides — and now it's unclear what it is that this company does.

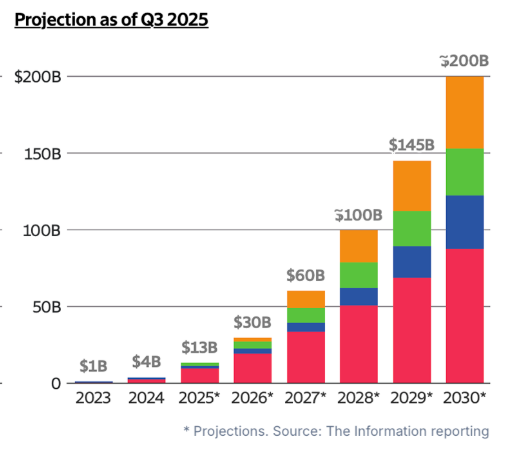

Does it make hardware? Software? Ads? Is it going to lease you GPUs to use for your own AI projects? Is it going to certify you as an AI expert? Notice how I've listed a whole bunch of stuff that isn't ChatGPT, which will, if you look at The Information's reporting of its projections, remain the vast majority of its revenue until 2027, at which point "agents" and "new products including free user monetization" will magically kick in.

OpenAI Is A Boring (and Bad) Business

In reality, OpenAI is an extremely boring (and bad!) software business. It makes the majority of its revenue selling subscriptions to ChatGPT, and apparently had 20 million paid subscribers (as of April) and 5 million business subscribers (as of August, though 500,000 of them are Cal State University seats paid at $2.50 a month).

It also loses incredibly large amounts of money.

OpenAI's Pathetic API Sales Have Effectively Turned It Into Any Other AI Startup

Yes, I realize that OpenAI also sells access to its API, but as you can see from the chart above, it is making a teeny tiny sliver of revenue from it in 2025, though I will also add that this chart has a little bit of green for "agent" revenue, which means it's very likely bullshit. Operator, OpenAI's so-called agent, is barely functional, and I have no idea how anyone would even begin to charge money for it outside of "please try my broken product."

In any case, API sales appear to be a very, very small part of OpenAI's revenue stream, and that heavily suggests a lack of interest in integrating its models at scale.

Worse still, this effectively turns OpenAI into an AI startup.

Think about it: if OpenAI can't make the majority of its money through "innovating" in the development of large language models (LLMs), then it’s just another company plugging LLMs into its software. While ChatGPT may be a very popular product, it is, by definition (and in its name!) a GPT wrapper, with the few differences being that OpenAI pays its own immediate costs, has the people necessary to continue improving its own models, and also continually makes promises to convince people it’s anything other than just another AI startup.

In fact, the only real difference is the amount of money backing it. Otherwise, OpenAI could be literally any foundation model company, and with a lack of real innovation within those models, it’s just another startup trying to find ways to monetize generative AI, an industry that only ever seems to lose money.

As a result, we should start evaluating OpenAI as just another AI startup, as its promises do not appear to mesh with any coherent strategy, other than "we need $1 trillion dollars." There does not seem to be much of a plan on a day-to-day basis, nor does there seem to be one about what OpenAI should be, other than that OpenAI will be a consumer hardware, consumer software, enterprise SaaS and data center operator, as well as running a social network.

As I've discussed many times, LLMs are inherently flawed due to their probabilistic nature."Hallucinations" — when a model authoritatively states something is true when it isn't (or takes an action that seems the most likely course of action, even if it isn't the right one) — are a "mathematically inevitable" according to OpenAI's own research feature of the technology, meaning that there is no fixing their most glaring, obvious problem, even with "perfect data."

I'd wager the reason OpenAI is so eager to build out so much capacity while leaking so many diverse business lines is an attempt to get away from a dark truth: that when you peel away the hype, ChatGPT is a wrapper, every product it makes is a wrapper, and OpenAI is pretty fucking terrible at making products.

Today I'm going to walk you through a fairly unique position: that OpenAI is just another boring AI startup lacking any meaningful product roadmap or strategy, using the press as a tool to pump its bags while very rarely delivering on what it’s promised. It is a company with massive amounts of cash, industrial backing, and brand recognition, and otherwise is, much like its customers, desperately trying to work out how to make money selling products built on top of Large Language Models.

OpenAI lives and dies on its mythology as the center of innovation in the world of AI, yet reality is so much more mediocre. Its revenue growth is slowing, its products are commoditized, its models are hardly state-of-the-art, the overall generative AI industry has lost its sheen, and its killer app is a mythology that has converted a handful of very rich people and very few others.

OpenAI spent, according to The Information, 150% ($6.7 billion in costs) of its H1 2025 revenue ($4.3 billion) on research and development, producing the deeply-underwhelming GPT-5 and Sora 2, an app that I estimate costs it upwards of $5 for each video generation, based on Azure's published rates for the first Sora model, though it's my belief that these rates are unprofitable, all so that it can gain a few more users.

To be clear, R&D is good, and useful, and in my experience, the companies that spend deeply on this tend to be the ones that do well. The reason why Huawei has managed to outpace its American rivals in several key areas — like automotive technology and telecommunications — is because it spends around a quarter of its revenue on developing new technologies and entering new markets, rather than stock buybacks and dividends.

The difference is that said R&D spending is both sustainable and useful, and has led to Huawei becoming much a stronger business, even as it languishes on a Treasury Department entity list that effectively cuts it off from US-made or US-origin parts or IP. Considering that OpenAI’s R&D spending was 38.28% of its cash-on-hand by the end of the period (totalling $17.5bn, which we’ll get to later), and what we’ve seen as a result, it’s hard to describe it as either sustainable or useful.

OpenAI isn't innovative, it’s exploitative, a giant multi-billion dollar grift attempting to hide how deeply unexciting it is, and how nonsensical it is to continue backing it. Sam Altman is an excellent operator, capable of spreading his mediocre, half-baked mantras about how 2025 was the year AI got smarter than us, or how we'll be building 1GW data centers each week (something that, by my estimations, takes 2.5 years), taking advantage of how many people in the media, markets and global governments don't know a fucking thing about anything.

OpenAI is also getting desperate.

Beneath the surface of the media hype and trillion-dollar promises is a company struggling to maintain relevance, its entire existence built on top of hype and mythology.

And at this rate, I believe it’s going to miss its 2025 revenue projections, all while burning billions more than anyone has anticipated.

Read the original article

Comments

Fully disagree. OpenAI has 800 millions active users and has effectively democratized cutting-edge AI to an amazing number of people everywhere. It took much longer for the Internet or Mobile Internet to have such an impact.

So "boring" ? Definitely not.

And it's up to a $1bn+ monthly revenue run rate, with no ads turned on. It's the first major consumer tech brand to launch since Facebook. It's an incredible business.

> first major consumer tech brand to launch since Facebook

from my recollection, post-FB $75B+ market cap consumer tech companies (excluding financial ones like Robinhood and Coinbase) include:

Uber, Airbnb, Doordash, Spotify (all also have ~$1bn+ monthly revenue run rate)

Fair comment, I will not fight you on it.

I propose oAI is the first one likely to enter the ranks of Apple, Google, Facebook, though. But it's just a proposal. FWIW they are already 3x Uber's MAU.

By poopiokaka 2025-10-0319:491 reply They aren’t making money so they haven’t entered any rank. They have users and revenue but cannot last at current setup. Ticking time bomb

Uber became profitable only very recently: https://www.theverge.com/2024/2/8/24065999/uber-earnings-pro...

By beAbU 2025-10-048:11 Yes and look at how much their service has degraded to get there.

By scarface_74 2025-10-0317:592 reply Spotify goes back and forth from barely profitable to losing money every quarter. They have to give 70% of their revenue to the record labels and that doesn’t count operating expenses.

As Jobs said about Dropbox, music streaming is a feature not a product

By myroon5 2025-10-0318:08 I listed multiple candidates so disputing one wouldn't dispute my main point ;)

Hyperbole to say no major consumer tech brands have launched for decades

> back and forth from barely profitable to losing money every quarter

I would be shocked if OpenAI was not in a similar (or worse) position.

By degamad 2025-10-064:02 I would be shocked if OpenAI was profitable in any quarter...

By ElijahLynn 2025-10-0319:211 reply So so so happy about the "no ads" part and do really hope there is a paid option to keep no ads forever. And hopefully the paid subscriptions keep the ads off the free plans for this who aren't as privileged to pay for it.

By somethoughts 2025-10-0319:321 reply My hot take is that it will probably follow the Netflix model of pricing once the VC money wants to turn on the profit switch.

Originally Netflix was a single tier at $9.99 with no ads. As ZIRP ended and investors told Netflix its VC-like honeymoon period was over - ads were introduced at $6.99 and the basic no ad tier went to $15.99 and the Premium went to 19.99.

Currently Netflix ad supported is $7.99, add free is $17.99 and Premium is $24.99.

Mapping that on to OpenAI pricing - ChatGPT will be ~$17.99 for ad supported, ~$49.99 for ad free and ~$599 for Pro.

By bryanlarsen 2025-10-0320:081 reply Netflix has lots of submarine (product placement) ads that you get even on ad-free plans. I expect OpenAI to follow that model too, except it'll be much worse.

By warkdarrior 2025-10-0320:26 Likely ChatGPT's answers will be slightly modified to mention (in the right context) references to their advertisers. It will be subtle, non-obvious.

By throw219080123 2025-10-0317:484 reply Zero moat.

I don't completely agree. Brand value is huge. Product culture matters.

But say you're correct, and follow the reasoning from there: posit "All frontier model companies are in a red queen's race."

If it's a true red queen's race, then some firms (those with the worst capital structure / costs) will drop out. The remaining firms will trend toward 10%-ish net income - just over cost of capital, basically.

Do you think inference demand and spend will stay stable, or grow? Raw profits could increase from here: if inference demand 8x, then oAI, as margins go down from 80% to 10%, would keep making $10bn or so a year in FCF at current spend; they'd decide if they wanted that to go into R&D or just enjoy it, or acquire smaller competitors.

Things you'd have to believe for it to be a true red queen's race:

* There is no liftoff - AGI and ASI will not happen; instead we'll just incrementally get logarithmically better.

* There is no efficiency edge possible for R&D teams to create/discover that would make for a training / inference breakaway in terms of economics

* All product delivery will become truly commoditized, and customers will not care what brand AI they are delivered

* The world's inference demand will not be a case of Jevon's paradox as competition and innovation drives inference costs down, and therefore we are close to peak inference demand.

Anyway, based on my answers to the above questions, oAI seems like a nice bet, and I'd make it if I could. The most "inference doomerish" scenario: capital markets dry up, inference demand stabilizes, R&D progress stops still leaves oAI in a very, very good position in the US, in my opinion.

By dabockster 2025-10-0318:181 reply The moat, imo, is mostly the tooling on top of the model. ChatGPT's thinking and deep research modes are still superior to the competition. But as the models themselves get more and more efficient to run, you won't necessarily need to rent them or rent a data center to run them. Alibaba's Qwen mixture of experts models are living proof that you can have GPT levels of raw inference on a gaming computer right now. How are these AI firms going to adapt once someone is able to run about 90% of raw OpenAI capability on a quad core laptop at 250-300 watts max power consumption?

By vessenes 2025-10-0319:17 I think one answer is that they'll have moved farther up the chain; agent training is this year, agent-managing-agents training is next year. The bottom of the chain inference could be Qwen or whatever for certain tasks, but you're going to have a hard and delayed time getting the open models to manage this stuff.

Futures like that are why Anthropic and oAI put out stats like how long the agents can code unattended. The dream is "infinite time".

Huge brand moat. Consumers around the world equate AI with ChatGPT. That kind of recognition is an extremely difficult thing to pull off, and also hard to unseat as long as they play their cards right.

"Brand moat" is not an actual economic concept. Moats indicate how easy/hard it is to switch to a competitor. If OpenAI does something user-adversarial, it takes two seconds to switch to Anthropic/Gemini (the exception being Enterprise contracts/lock-in, which is exactly why AI companies prioritize that). The entire reason that there are race-to-the-bottom price wars among LLM companies is that it's trivial for most people to switch to whatever's cheapest.

Brand loyalty and users not having sufficient incentive by default to switch to a competitor is something else. OpenAI has lost a lot of money to ensure no such incentive forms.

By corentin88 2025-10-0318:573 reply McDonald’s has brand moat. So does Coca-Cola. And many more products. The switching cost is null, but the brand does it all.

Again, that's brand loyalty, not a brand moat.

Moats, as noted in Google's "We Have no Moat, and Neither Does OpenAI" memo that made the discussion of moats relevant in AI circles, has a specific economic definition.

By Sammi 2025-10-0321:12 The Seven Powers is considered an authoritarian source on business moats.

https://www.goodreads.com/book/show/32816087-7-powers

It has branding as one of the seven and uses coca cola as an example.

By qcnguy 2025-10-0411:09 Switching costs only make sense to talk about for fully online businesses. The "switching cost" for McDonalds depends heavily on whether there's a Burger King nearby. If there isn't then your "switching cost" might now be a 30 minute drive, which is very much a moat.

By Incipient 2025-10-0413:19 That's not entirely true. They have a 'infinite' product moat - no one can reproduce a big mac. Essentially every AI model is now 'the same' (queue debate on this). The only way they can build a moat is by adding features beyond the model that lock people in.

The concept of ‘moat’ comes out of marketing - it was a concept in marketing for decades before Warren Buffett coined the term economic moat. Brand moat had been part of marketing for years and is a fully recognized and researched concept. It’s even been researched with fMRIs.

You may not see it, but OpenAI’s brand has value. To a large portion of the less technical world, ChatGPT is AI.

By poopiokaka 2025-10-0319:521 reply Still not a moat tho

A moat can be being the largest in a field, often the case with Buffett investments eg. Coca cola, Apple, Geico.

By smcin 2025-10-0419:51 Nokia's global market share was ~50% in smartphones back in 2007. Remember that?

Comparing "brand moat" in real-world restaurant vs online services where there's no actual barrier to changing service is silly. Doubly silly when they're free users, so they're not customers. (And then there are also end-users when OpenAI is bundled or embedded, e.g. dating/chatbot services).

McDonald's has lock-in and inertia through its franchisees occupying key real-estate locations, media and film tie-ins, promotions etc. Those are physical moats, way beyond a conceptual "brand moat" (without being able to see how Hamilton Wright Helmer's book characterizes those).

By kylebenzle 2025-10-0317:52 [dead]

By trcf22 2025-10-0318:27 I wouldn’t necessarily say so. I guess that’s what they are trying to « pulse » people and « learn » from you instead of just providing decent unbiased answers.

In Europe, most companies and Gov are pushing for either mistral or os models.

Most dev, which, if I understand it correctly, are pretty much the only customers willing to pay +100$ a month, will change in a matter of minutes if a better model kicks in.

And they loose money on pretty much all usage.

To me a company like Antropics which mostly focus on a target audience + does research on bias, equity and such (very leading research but still) has a much better moat.

By nurettin 2025-10-0513:19 Please stop giving them ideas.

By aprilthird2021 2025-10-0318:31 [flagged]

OpenAI does not have 800m users.

It has 20m paid users and ~ 780m free users. The free users are not at all sticky and can and will bounce to a competitor. (What % of free users converted to paid in 2025? vs bounced?) That is not a moat. The 20m paid users in 2025 is up from 15.5m in 2024.

Forget about the free tier users, they'll disappear. All this jousting about numbers on the free tier sounds reminiscent of Sun Microsystems chirpily quoting "millions and billions of installed base" back in the Java wars, and even including embedded 8-bit controllers.

For people saying OpenAI could get to $100bn revenue, that would need 20m paid users x $5000/yr (~ the current Pro $200/mth tier), but it looks they must be discounting it currently. And that was before Anthropic undercut them on price. Or other competitors.

By famouswaffles 2025-10-0621:151 reply Free users are users. Google search is free, though ad monetized. But there's nothing stopping, and in fact they plan to monetize free users with ads.

>The free users are not at all sticky and can and will bounce to a competitor.

If you really believe this, that just shows how poor your understanding of the consumer LLM space is.

As it is, ChatGPT (the app) spends most of its compute on Non work messages (approx 1.9B per day vs 716 for Work)[0]. First, from ongoing conversations that users would return to, to the pushing of specific and past chat memories, these conversations have become increasingly personalized. Suddenly, there is a lot of personal data that you rely on it having, that make the product better. You cannot just plop over to Gemini and replicate this.

[0] https://www.nber.org/system/files/working_papers/w34255/w342...

- for code-generation, OpenAI was overtaken by Anthropic

- your comment about lock-in for existing users only applies historically to existing users.

- Sora 2 is a major pivot that signals what segment OpenAI is/isn't targeting next: Scott Galloway was saying today it's not intended to be used by 99% of casual users; they're content consumers, only for content creators and studios.

By famouswaffles 2025-10-0712:441 reply - for code-generation, OpenAI was overtaken by Anthropic

And that's nice for them.

- your comment about lock-in for existing users only applies historically to existing users.

ChatGPT is the brand name for consumer LLM apps. They are getting the majority of new subscribers as well. Their competitors - Claude, Gemini are nowhere near. chatgpt.com is the 5th most visited site on the planet.

Perhaps for as long as the base tier remains free, ad-free, and burn $8+billion/year, and for as long as they can continue funding that with circular trades with public stocks such as this week's nVidia and AMD deals.

You're aware they already announced they'll add ads in 2026.

And the circular trades are already rattling public markets.

How do they monetize users on the base tier, to any extent? By adding e-commerce? And once they add ads how do they avoid that compromising the integrity of the product?

By famouswaffles 2025-10-094:46 >How do they monetize users on the base tier, to any extent? By adding e-commerce? And once they add ads how do they avoid that compromising the integrity of the product?

Netflix introduced ads and it quickly became their most popular tier. The vast majority of people don't care about ads unless it's really obnoxious.

By didip 2025-10-0318:21 I am with you, and they still have so many dials to tweak. Ads is one of the big dials.

Training costs can be brought down. New algorithm can still be invented. So many headrooms.

And this is not just for OpenAI. I think Anthropic and Gemini also have similar room to grow.

By ActionHank 2025-10-0317:50 Using inventions from other people or those who have now left the company.

They have no moat, their competitors are building equivalent or better products.

The point of the article is that they are a bad business because it doesn't pan out long term if they follow the same path.

By zapataband2 2025-10-0318:011 reply You mean it's thanks to the incredible invention known as the Internet that they were able to "democratize cutting-edge AI to an amazing number of people"

OpenAI didn't build the delivery system they built a chat app.

By fragmede 2025-10-0415:15 AOL had a chat app too. There's something special about OpenAI's chat app though. I can't quite put my finger on it though.

By godelski 2025-10-0320:07 That's a very different definition of boring than I'd use. Something can be both popular and boring.

I also wouldn't say "democratized", more like popularized or made accessible. Though I'm more nitpicking here.

By notarobot123 2025-10-0319:33 productized != democratized

By justapassenger 2025-10-0318:253 reply They moved the industry, that's for sure.

But at this point - there's nothing really THAT special about them compared to their competition.

By rco8786 2025-10-0318:56 You can say the same thing about any number of hugely popular and profitable companies in the world.

By ThatMedicIsASpy 2025-10-0319:58 They changed the video game dota2 permanently. Their bots could not control a shared unit (courier) among themselves so bot matches against their AI had special rules like everyone having their own. Not long after the game was changed forever.

As a player for over 20 years this will be a core memory of OpenAI. Along with not living up to the name.

By IgorPartola 2025-10-0318:483 reply Apple has physical stores that will provide you timely top notch customer service. While not perfect, their mobile App Store is the best available in terms of curation and quality. Their hardware is not so diverse so is stable for long term use. And they have the mindshare in way that is hard to move off of.

Let’s say Google or Anthropic release a new model that is significantly cheaper and/or smarter that an OpenAI one, nobody would stick to OpenAI. There is nearly zero cost to switching and it is a commodity product.

By t0mas88 2025-10-0320:32 Their API product is easy to swith away from but their consumer product (which is by far the biggest part of their revenue) has much better market share and brand recognition than others. I've never heard anyone outside of tech use Gemini or Copilot or X AI outside of work while they all know ChatGPT.

Anecdata but even in work environments I hear mostly complaints about having to use Copilot due to policy and preferring ChatGPT. Which still means Copilot is in a better place than Gemini, because as far as I can tell absolutely nobody even talks about that or uses it.

By hluska 2025-10-0319:36 There is only a zero cost to switching if a company is so perfectly run that everyone involved comes to the same conclusion at the same time, there are no meetings and no egos.

The human side is impossible to cost ahead of time because it’s unpredictable and when it goes bad, it goes very bad. It’s kind of like pork - you’ll likely be okay but if you’re not, you’re going to have a shitty time.

Let's say Google release a new phone that is significantly cheaper and/or smarter than an Apple one. nobody would stick to apple. There is nearly zero cost to switching and it is a commodity product.

The AI market, much like the phone market, is not a winner take all. There's plenty of room for multiple $100B/$T companies to "win" together.

> Let's say Google release a new phone that is significantly cheaper and/or smarter than an Apple one. nobody would stick to apple.

This is not at all how the consumer phone market works. Price and “smarts” are not only factor that goes into phone decisions. There are ecosystem factors & messaging networks that add significant friction to switching. The deeper you are into one system the harder it is to switch.

By trenchpilgrim 2025-10-0319:24 e.g. I am on iPhone and the rest of my family is on Android. The group chat experience is significantly degraded, my videos look like 2003 flip phone videos. Versus my iPhone using friends everything is high resolution.

By rco8786 2025-10-0322:27 Cool now apply that same logic to AI

By trenchpilgrim 2025-10-0319:02 > Let's say Google release a new phone that is significantly cheaper and/or smarter than an Apple one. nobody would stick to apple.

I don't think this is true over the short to mid term. Apple is a status symbol to the point that Android users are bullied over it in schools and dating apps. It would take years ti reverse the perception.

There's a huge "cost" in switching when you are tied to one ecosystem (iOS vs. Android). How will you transfer all your data?

By rco8786 2025-10-0322:36 You’re aware that LLMs all have persistent memory now and personalize themselves to you over time right? You can’t transfer that from OAI to Anthropic.

By ink_13 2025-10-0319:12 Pixel phones exist (and have for some time!) yet people still buy iPhones

its going to be one of the most consequential companies in human history

By lowsong 2025-10-0319:36 I fully agree. People are going to be pointing to OpenAI as a warning of the dangers of the tech hype cycle for decades after its collapse.

By poopiokaka 2025-10-0319:53 Yeah bc of the global warming trend it started and it’s epic collapse

Idk, it's a company with 4.5B in revenues in H1 2025.

It's not insane numbers but it's not bad either. YouTube had those revenues in...2018. 12 years after launching.

There's definitely a huge upside potential in openai. Of course they are burning money at crazy rates, but it's not that strange to see why investors are pouring money into it.

By mossTechnician 2025-10-0317:331 reply The insane numbers are the ones you find when you look at their promises, like reaching $125 billion in revenue by 2029 (which they predict will be the first year they are profitable) https://www.reuters.com/technology/artificial-intelligence/o...

By senordevnyc 2025-10-0319:15 How credible would you have found their claims in 2021 that by 2025 they’d be doing north of ten billion in revenue?

> Idk, it's a company with 4.5B in revenues in H1 2025.

giving away dollar bills for a nickel each is not particularly impressive

I would be pretty impressed by anyone who managed to do that nominally. Moving a dozen billion dollars alone seems not trivial to do.

By dreamcompiler 2025-10-0318:00 Blowing a giant hole in Hoover Dam while somebody pees in Lake Mead would also be impressive. It just won't stay impressive for very long.

Even if the guy peeing is a world champion urinator named Sam.

By blibble 2025-10-0320:30 turns losing money scales well

you can even pay people to help you out, and that helps even more!

By fragmede 2025-10-044:12 It is when no one knows they're dollar bills. Obviously, I take those dollar bills to the bank and make $0.95. Easy money. But how about when it's not a dollar bill, but a conversation with a robot? And that robot lies and kinda sucks? Why would anyone even pay nickles to see that show? Haven't they heard there's free porn on the Internet they can watch? Free! If people are getting $20 or even $200 / months's worth of something out of the robot that's kinda dumb and lies, to the tune of $4.5 B in 6 months, which works out to be $750 million/month, it seems our priors, that this robot is dumb and kinda sucks, maybe isn't quite that dumb, even if it does lie occasionally?

By epolanski 2025-10-0322:07 Okay, but do you see it so hard to consider the point of who thinks that they can 5/10/20x their current revenues without seeing similar ballooning in costs long term?

It's an insane number considering how little they monetize it. Free users are not even seeing ads rn and they already have 4.5B revenue. I think 100B by 2029 is a very conservative number.

Sure but they're not selling ads because the lack of ads is the unique selling point of the consumer product. It's a loss leader to build the brand for the b2b / gov stuff.

If they junk up the consumer experience too much users can just switch to Google who, obviously is the behemoth in the ad space.

Obviously there's money to be made there but they have no moat - I feel like despite the first mover advantage their position is tenuous and ads risk disrupting that edge with consumers.

But their edge with consumers is huge. Just like "to Google" is a verb for web search for many consumers, ChatGPT is AI to them.

By degamad 2025-10-064:10 Some of us remember when Google was just an upstart and anyone who wanted to do anything serious used Altavista or MetaCrawler. Even the behemoths can get taken out.

I'm in awe they are still allowing free users at all. And I'm one of them. The free tier is enough for me to use it as a helper at work, and I'd probably pay for it tomorrow if they cut off the free tier.

By sdesol 2025-10-0318:14 > I'm in awe they are still allowing free users at all.

I am not.

> The free tier is enough for me to use it as a helper at work, and I'd probably pay for it tomorrow if they cut off the free tier.

You are sort of proving the point that thid isn't crazy. They want to be the dealer of choice and they can afford to give you the hit now for free.

By wodenokoto 2025-10-0320:40 It’s not that they are “allowing free user at all” they are expanding their free offerings. Last year I paid $20/month for ChatGPT. This year I haven’t paid anything though my usage has only increased.

> I'd probably pay for it tomorrow if they cut off the free tier.

Why would you pay if you can use a competitor for free?

By silisili 2025-10-045:58 I've used them all, and they all have their place I guess.

ChatGPT is far and away my favorite for quick questions you'd ask the genius coworker next to you. For me, nothing else even comes close wrt speed and accuracy. So for that, I'd gladly pay.

Don't get me wrong, Claude is a marvel and Deepseek punches above its weight, but neither compare with stuff like 'write me a sql query that does these 30 things as efficiently as possible.'. ChatGPT will output an answer with explanations for each line by the time Claude inevitably times out...again.

By dfsegoat 2025-10-0318:15 ...not monetized yet: Can't find the post, but a prev. HN post had a link to an article showing that OpenAI had hired someone from Meta's ad service leadership - so I took that to mean it's a matter of time.

edit: believe it was Fidji Simo et al.

https://www.pymnts.com/artificial-intelligence-2/2025/openai...

By thinkingtoilet 2025-10-0317:421 reply It's not hard to make 4.5B when you lose 13.5B. If you give me 18B, I would bet I could lose 13.5B no problem.

It is hard though. Getting people to hand $4.5B to a company is difficult no matter how much money you are losing in the process.

I mean sure, you can get there instantly if you say "click here to buy $100 for $50", but that's not what's happening here - at least not that blatantly.

By hiq 2025-10-0323:10 I get what you're saying, and it's especially interesting if revenue grows faster than costs, but for private entities it's harder to tell what the actual dynamics are. We don't really have the breakdown of the revenues, do we?

By pizlonator 2025-10-0317:231 reply > it's a company with 4.5B in revenues in H1 2025

That's a lot of money to be getting from a subscription business and no ads for the free tier

Not hard to see upside here

By scarface_74 2025-10-0317:581 reply It’s when they are losing four times as much. Are their marginal costs per subscriber even positive?

By dabockster 2025-10-0318:14 Yeah, how much profit will they make if they're able to go for-profit? Revenue doesn't tell me anything.

didn't the post a loss of $5 billion last year and are on track for a loss of $8-9 billion this year?

By saltyoldman 2025-10-0318:021 reply even if thats the case, they have eaten multiple times that amount of other companies lunch. Companies that currently use ads, whereas cgpt does not.(but will).

Have they?

GOOG is at record highs, FB is at record highs, MSFT is at record highs

As a reminder, even Apple didn't hit 1T market cap until late 2018. We didn't get a second in the 4 comma club until mid 2019 with MSFT. Google and Facebook in 2021.

And now we have 4 companies above 3T and 11 in the 4 comma club. Back when the iPhone was released oil companies were at the top and they were barely hitting 500B.

So yeah, I don't think anyone has really been displaced. Nvidia at up, Broadcom at 7, and TSMC at 9 indicate that displacement might occur, but that's also not the displacement people are talking about.

By rockercoaster 2025-10-0320:571 reply I don't entirely know what to make of a very-small number of companies' valuations going sky-high that fast (and a few completely without any apparent connection to the fundamentals or even the best-plausible-case mid-term future of those fundamentals, like Tesla) but I can't help but think it means something is extremely broken in the economy, and it's not going to end well.

Maybe we all should have been a little more pro-actively freaked out when dividends went from standard to all-but extinct, and nobody in the investor class seemed to mind... like, it seems that the balance between "owning things that directly make money through productive activity" and "owning things that I expect to go up in value" has gotten completely out-of-wack in favor of the latter.

By godelski 2025-10-041:47 My guess? Hype. All the companies at the top have a lot of hype. I don't think that explains everything, but I believe it is an important factor. I also think with tech we've really entered a Lemon Market. It is very difficult to tell the quality of products prior to purchase. This is even more true with the loss of physical retail. I actually really miss stores like Sharper Image. Not because I want to buy the over priced stuff, but because you would go into those stores and try things.

I definitely think the economy has shifted and we can see it in our sector. The old deal used to be that we could make good products and good profits. But now "the customer" is not the person that buys the product, it is the shareholder. Shareholder profits should reflect what the market value of the product being sold to customers, but it doesn't have to. So if we're just trying to maximize profits then I think there is no surprise when these things start to diverge.

Ed... I wrote him a long note about how wrong his analysis on oAI was earlier this year. He wrote back and said "LOL, too long." I was like "Sir, have you read your posts? Here's a short version of why you're wrong." (In brief, if you depreciate models over even say 12 months they are already profitable. Given they still offer 3.5, three years is probably a more fair depreciation schedule. On those terms, they're super profitable)

No answer.

By mrandish 2025-10-0318:25 > I wrote him a long note about how wrong his analysis on oAI was earlier this year.

Why don't you consider posting it on HN either as a response in this thread or as it's own post. There's clearly interest in teasing out how much of OAI's unprecedented valuation is hype and/or justified.

The depreciation schedule doesn't affect long term profitability. It just shifts the profits/loss in time. It's a tool to make it appear like you paid for something while it's generating revenue. Any company would look really profitable for a while if it chose long enough depreciation schedules (e.g. 1000 years), but that's just deferring losses until later.

No it would in fact be appropriate to match the costs of the model training (incurred over a few months) with the lifetime of its revenue. That’s not some weird shifting - it helps you understand where the business is at. In this case on a per model basis, very profitable.

You're right. But this also doesn't mean singron is wrong.> it would in fact be appropriate to match the costs of the model training with the lifetime of its revenueThink about their example. If the deprication is long lived then you are still paying those costs. You can't just ignore them.

The problem with your original comment is that it is too simple of a model. You also read singron's comment as a competing model instead of "your model needs to account for X".

You're right that it provides clues that the business might be more profitable in the future than current naïve analysis would suggest but you also need to be careful in how you generalize your additional information

By vessenes 2025-10-0415:37 I think we are, like you suggest, just talking past each other. Depreciation is supposed to conceptually be tied to the useful life of an asset; a 1000 year depreciation schedule might be reasonable for say a Roman bridge.

When we talk accrual basis profits we are trying as best we can to match the revenues and expenses even if they occur at different points in the useful life of the asset.

Almost zero kibitzers or journalists take this accrual mindset into account when they use the word profit - but that’s what profit is, excess revenue applied against a certain period’s fairly allocated expense.

What they generally mean is cashflow; oAI has negative cashflow and is likely to for quite a while. No argument there. I think it’s worth disambiguating these for people though because career and investment decisions in our industry depend on understanding the business mechanics as well as the financial ones. Right now financially simplistic hot takes seem to get a lot of upvotes. I worry this is harming younger engineers and founders.

By surgical_fire 2025-10-0319:00 The problem of this "depreciation" rationale is that it presumes that all the cost is in training, ignoring that actually serving the models is also very expensive. I certainly don't believe they would be profitable, and vague gestures at some hypothetical depreciation sounds like accounting shenanigans.

Also, the whole LLM industry is mostly trying to generate hype, at a possible future where it is vastly more capable than it currently is. It's unclear if they would still be generating as much revenue without this promise.

By BoorishBears 2025-10-0318:551 reply Your brief doesn't make sense, maybe you need to expand?

They're only offering 3.5 for legacy reasons: pre-Deepseek, 3.5 did legitimately have some things that open source hadn't caught up on (like world knowledge, even as an old model), but that's done.

Now the wins come from relatively cheap post-training, and a random Chinese food delivery companies can spit out 500B parameter LLMs that beats what OpenAI released a year ago for free with an MIT license.

Also as you release models you're enabling both distillation of your own models, and more efficent creation of new models (as the capabilities of the LLM themselves are increasingly useful for building, data labeling, etc.)

I think the title is inflammatory, but the reality is if AGI is really around the corner, none of OpenAI's actions are consistent with that.

Utilizing compute that should be catapulting you towards the imminent AGI to run AI TikTok and extract $20 from people doesn't add up.

They're on a treadmill with more competent competitors than anyone probably expected grabbing at their ankles, and I don't think any model that relies on them pausing to cash in on their progress actually works out.

> expand brief please

OK!

Longcat-flash-thinking is not super popular right now; it doesn't appear on the top 20 at open router. I haven't used it, but the market seems to like it a lot less than grok, anthropic or even oAI's open model, oss-20b. Like I said I haven't tried it.

And to your point, once models are released open, they will be used in DPO post-training / fine-tuning scenarios, guaranteed, so it's hard to tell who's ahead by looking at an older open model vs a newer one.

Where are the wins coming from? It seems to me like there's a race to get efficient good-enough stuff in traditional form factors out the door; emphasis on efficiency. For the big companies it's likely maxing inference margins and speeding up response. For last year's Chinese companies it was dealing with being compute poor - similar drivers though. If you look at DeepSeek's released stuff, there were some architectural innovations, thinking mode, and a lottt of engineering improvements, all of which moved the needle.

On treadmills: I posit the oAI team is one of the top 4 AI teams in the world, and it has the best fundraiser and lowest cost of capital. My oAI bull story is this: if capital dries up, it will dry up everywhere, or at the least it will dry up last for a great fundraiser. In that world, pausing might make sense, and if so, they will be able to increase their cash from operations faster than any other company. While a productive research race is on, I agree they shouldn't pause. So far they haven't had to make any truly hard decisions though -- each successive model has been profitable and Sam has been successful scaling up their training budget geometrically -- at some point the questions about operating cashflow being deployed back to R&D and at what pace are going to be challenging. But that day is not right now.

By BoorishBears 2025-10-0320:161 reply You're arguing a different point than the article, and in some ways even agreeing with it.

The article is not saying OpenAI must fail: it's saying OpenAI is not "The AGI Company of San Francisco". They're in the same bare knuckle brawl as other AI startups, and your bull case is essentially agreeing but saying they'll do well in the fight.

> In fact, the only real difference is the amount of money backing it.

> Otherwise, OpenAI could be literally any foundation model company, [...] we should start evaluating OpenAI as just another AI startup

Any startup would be able to raise with their numbers... they just can't ask for trillions to build god-in-a-box.

It's going to be a slog because we've seen that there are companies that don't even have to put 1/10th their resources into LLMs to compete robustly with their offerings.

OpenRouter doesn't capture 1/100th of open weight usage, but more importantly the fact that Longcat is legitimately robustly competitive to SOTA models from a year ago is the actual signal. It's a sneak peak of what happens if the AGI case doesn't pan out and OpenAI tries to get off the treadmill: within a year a lot of companies catch up.

By vessenes 2025-10-0416:54 Love this. Let’s start a podcast

I agree with you. Even Anthropic's CEO said EXACTLY this. He said, if you actually look at the lifecycle of each model as its own business, then they are all very profitable. It's just that while we're making money from Model A, we've started spending 10x on Model B.

Exponential cost growth with, at best, linear improvement is not a promising business projection.

Perhaps at some point we'll say "this model is profitable and we're just gonna stick with that".

> Perhaps at some point we'll say "this model is profitable and we're just gonna stick with that".

I don't follow it that closely but my perception is that's already happened. Various flavors of GPT 4 are still current products, just at lower prices.

By majewsky 2025-10-0323:10 That is not what GP was saying. They were saying that a foundation model company would say "$CURRENT_MODEL is so good that it is no use training $NEXT_MODEL right now", which I don't think is the current stance of any of those companies.

Given that they are all constantly spending money on R&D for the next model, it does not really matter how long they get to offer some of the older models. The massive R&D spend is still incurred all the time.

Yes and it’s furnished to some of the worlds best investors who are happy to pay valuations between 150 and 500b to access it

By surgical_fire 2025-10-0319:07 Among those are the guys that gave money to WeWork.

Make of that what you will.

I am not really betting on it.

By jrflowers 2025-10-0321:59 I don’t quite understand your wording here. Do we have to “pay valuations between 150 and 500b” to access the data that supports the justification of the valuation or can you just link to it?

By louiereederson 2025-10-0319:17 the logic of a happy WeWork investor

By pmdr 2025-10-0320:51 Yeah, no investor has ever been conned before /s

I do hope Ed's wrong, I actually stand to financially benefit from it.

> Given they still offer 3.5, three years is probably a more fair depreciation schedule.

But the usage should drop considerably as soon as next model is released. Many startups down the line are existing in hope of better model. Many others can switch to a better/cheaper model quite easily. I'd be very surprised if the usage of 3.5 is anywhere near what it was before release of the next generation, even given all the growth. New users just use the new models

Probably true! If revs shift to a new cheaper model that’s not bad though.

By AbstractH24 2025-10-0414:51 I probably agree!

By treadump 2025-10-0318:48 OpenAI expects multi-year losses before turning consistently profitable, so saying they are already profitable based solely on an aggressive depreciation assumption overstates the case

Why 3.5 or 3 years for depreciations? Models have been retrained much faster than that. I would guess more in the 3 months range.

By t-writescode 2025-10-0318:39 Because businesses and people rely on consistentish responses, which you can get on models you’ve already validated your prompts on.

Dropping old models means breaking paying customers, which is bad for business.

By nzeid 2025-10-0319:07 This perspective ignores headwinds from copyright infringement lawsuits and increasingly popular LLM scraping protections.

By dom96 2025-10-0319:04 Can you share your note to him and where he responded that way? Is it public?

That's disappointing to hear. I've generally liked Ed's writing but all his posts on AI / OAI specifically feel like they come from a place of seething animosity more than an interest in being critical or objective. At least one of his posts repeated the claim that essentially all AI breakthroughs in the last few years are completely useless, which is just trainwreck hyperbole no matter where you lie on the spectrum as far as its utility or potential. I regularly use it for things now that feel like genuine magic, in wonder to the point of annoying my non-technical spouse, for whom it's just all stuff computers can do. I don't know if OpenAI is going to be a gazillion dollar business in ten years but they've certainly locked in enough customers - who are getting value out of it - to sustain for a while.

By fragmede 2025-10-0415:16 That's not remotely surprising though if you've known anyone like how Ed presents himself through his writing and videos. I don't know him personally, so I can't speak to what he's actually like, but there's something about his writing style and how he comes off in his videos as a conviction that he's right and his got the secret truth and why won't everybody believe him. Cassandra's curse, perhaps, but naming it doesn't convince me.

By triceratops 2025-10-0317:512 reply > He wrote back and said "LOL, too long."

Some nerve

By gorjusborg 2025-10-0318:041 reply Seriously. It took more time to respond with disrespect than to just ignore it.

By triceratops 2025-10-0318:101 reply I meant more the nerve to say it was "too long". Ed Zitron may be right but he's got no right to accuse anyone else of writing too many words.

By sharkjacobs 2025-10-0318:161 reply It's a rude response from someone whose public persona is famously rude and abrasive. It's also worth considering the difference between publishing 10000 words to an audience of subscribers, and sending 10000 words unsolicited to a stranger.

By azemetre 2025-10-0323:38 There’s no proof that this exchange ever happened. Happy to eat crow if proven wrong. I’ve disagreed and argued with Ed on his subreddit, he never banned me or acted hostile.

Especially rude given, if he was feeling it was too long, he could've had an AI summarize it.

But this shows a certain intellectual laziness/dishonesty and immaturity in the response.

Someone's taken the time to write a response to your article, you can choose to learn from it (assuming it's not an angry rant), or you could just ignore it.

In fact, that completely dismisses this stupid article for me.

I mean, using ai to summarise someone's arguments and then using ir in any way would be considerably more dishonest, lazy and rude.

Like, expectation that he will treat an unsolicited email with all seriousness is absurd in the first place, but ai summarize it would be wtf.

By atonse 2025-10-0319:45 Not responding was a perfectly good neutral action.

Instead they chose to respond with a "LOL" and saying it was too long, like they're a pretty unintellectual person.

Let's agree to disagree.

By bayarearefugee 2025-10-0318:235 reply If you spend more money training the model and offering it as a service (with all the costs that that entails) than you earn back directly from that model's usage, it can only be profitable if you use voodoo economics to fudge it.

Luckily we live in a time period where voodoo economics is the norm, though eventually it will all come crashing down.

You’re right, but that’s not what’s happening. Every major model trained at Anthropic and oAI have been profitable. Inference margins are on the order of 80%.

By oblio 2025-10-0319:18 > Inference margins are on the order of 80%.

Source, please?

By true_religion 2025-10-0318:491 reply That’s true, but OpenAI and its proponents say each model is individually profitable so if R&D ever stops then the company as a whole will be profitable.

By nickff 2025-10-0319:02 The problem with this argument is that if R&D ever stops, OpenAI will not be differentiated (because everyone else will be able to catch up), so their pricing power will disappear, and they won't be able to charge much more than the inference costs.

By CuriouslyC 2025-10-0319:191 reply You're missing that they're pricing the value of models progressing them towards AGI, and their own use of that model for research and development. You can argue the first one, and the second is probably not fully spun up yet (though you can see it's building steam fast), but it's not total fantasy economics, it's just highly optimistic because investors aren't going to buy the story from people who're hedging.

By bayarearefugee 2025-10-0319:541 reply > progressing them towards AGI

I don't see any reason to believe that LLMs, as useful as they can be, ever lead to AGI.

Believing this in an eventuality is frankly a religious belief.

By CuriouslyC 2025-10-0320:11 LLMs by themselves are not going to lead to AGI, agreed. However, there's solid reasons to believe that LLMs as orchestrators of other models could get us there. LLMs have the potential to be very good at turning natural language problems into "games" that a model like MuZero can learn to solve in a superhuman way.

what exactly does “come crashing down” mean? a service with 700 million users will cease to exist? close shop, oooops our bad?

same “come crashing down” arguments permiated HN on Uber and Meta monetizing mobile and …

nothing is crashing down at this type of “volume”/user base…

If you've been around, imgur, basically. All the image hosting solutions before it (imageshack) and all the file hosting solutions before them. Yahoo.

"Crashing" in this context doesn't mean something goes completely away, just that its userbase dwindles to 1-5-10% of what it once was and it's no longer part of the zeitgeist (again, Yahoo).

By bdangubic 2025-10-0320:37 the only way I can see that happening here (userbase dwindles to 1-5-10% of what it once was) is if another better service came about but I don't think that is what @bayarearefugee is talking about when s/he said 'come crashing down' ?

By vpShane 2025-10-0318:39 [dead]

I mean they are clearly trying to get some attention here. I wouldn't bite.

OpenAI is many things but I don't think I would call it boring or desperate. The title seems more desperate to me.